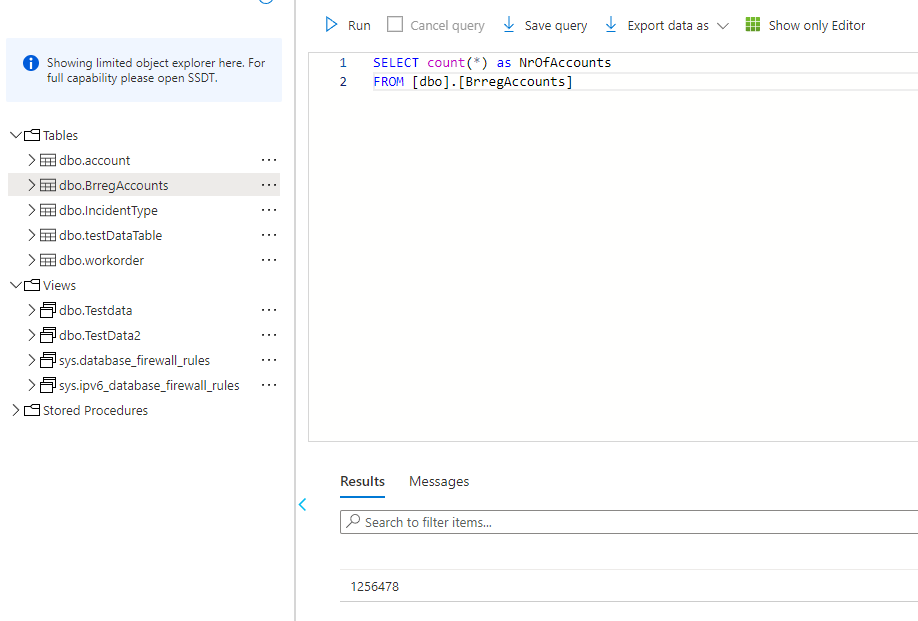

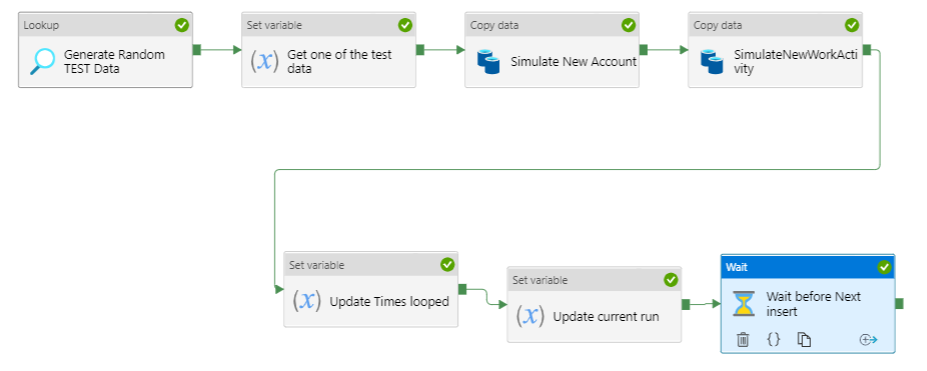

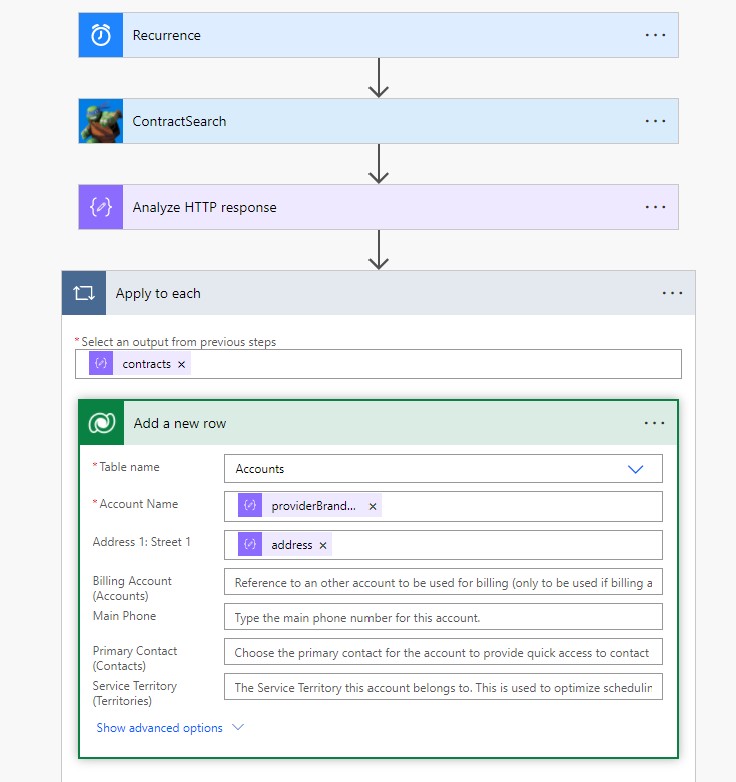

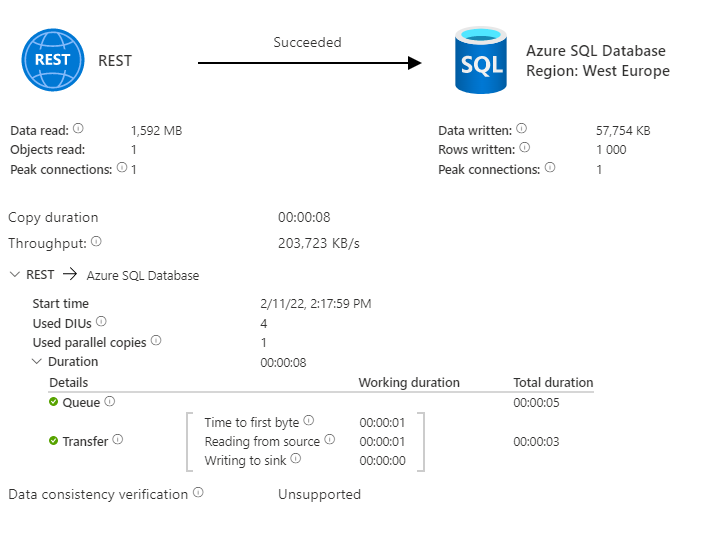

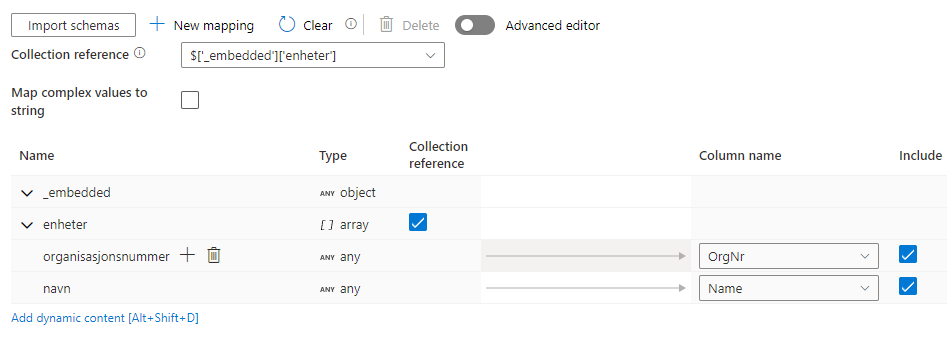

To get more data for testing we did setup a new soruce in Azure Data Factory to download “Account Name” and “Orgnummer” from Brreg.

The result downloading first 1000 from Brreg..

We made it simple.. using this URL

https://data.brreg.no/enhetsregisteret/api/enheter?size=1000&page=1

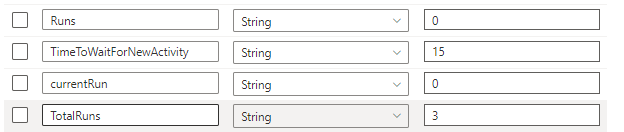

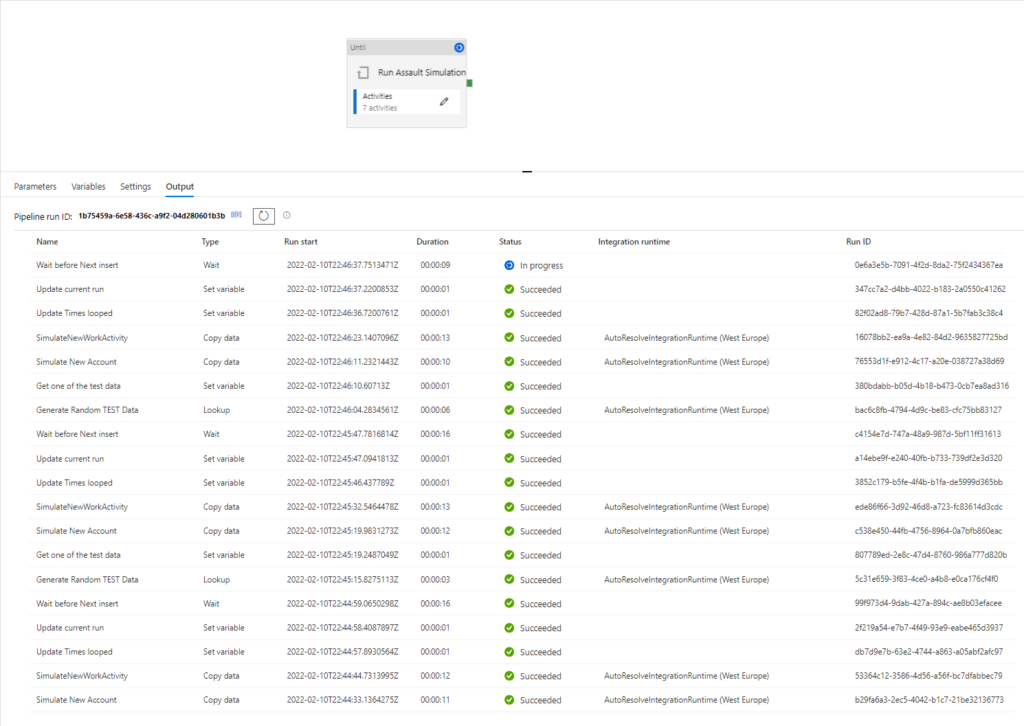

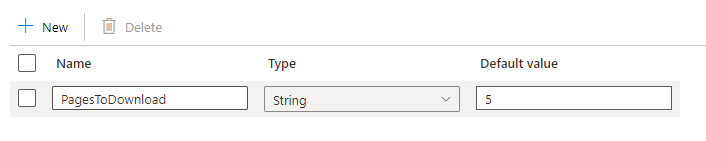

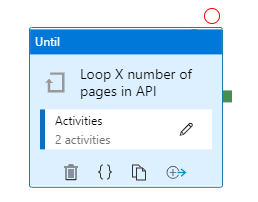

With an param to ADF to say how many pages we should download.. Simply changing the number of pages to download.. Meaning batches of 1000 accounts.. So, g.ex: We set

ADF will loop until 5 pages in URL have been set in API URL and downloaded to source

g:ex:

run 1: https://data.brreg.no/enhetsregisteret/api/enheter?size=1000&page=1

run 2: https://data.brreg.no/enhetsregisteret/api/enheter?size=1000&page=2

run 3: https://data.brreg.no/enhetsregisteret/api/enheter?size=1000&page=x

For each loop we download these data and dump them into TEST SQL DB

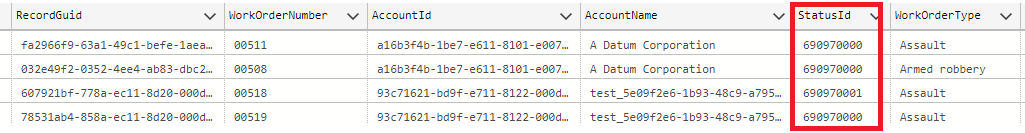

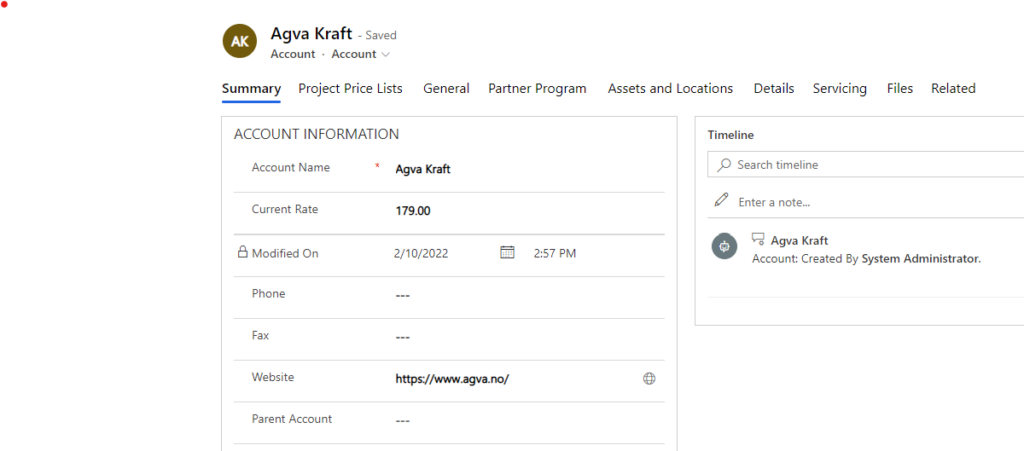

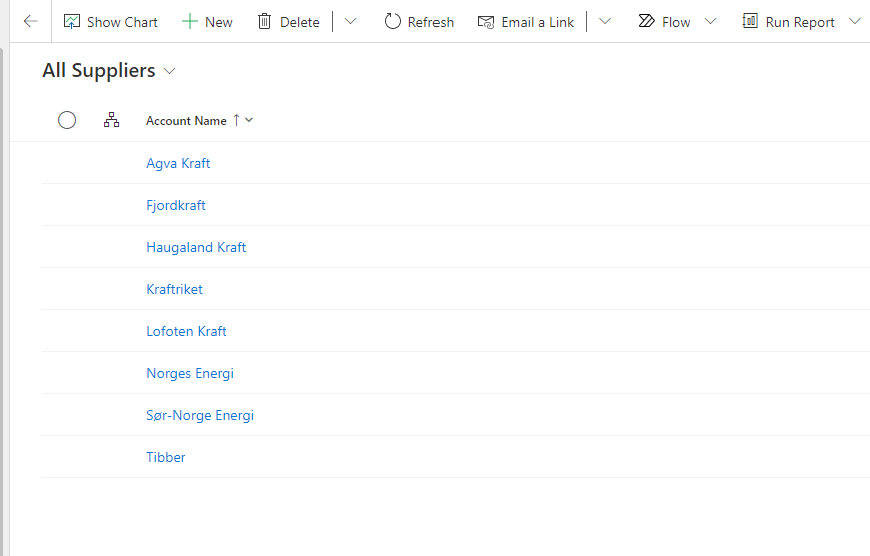

Example now: