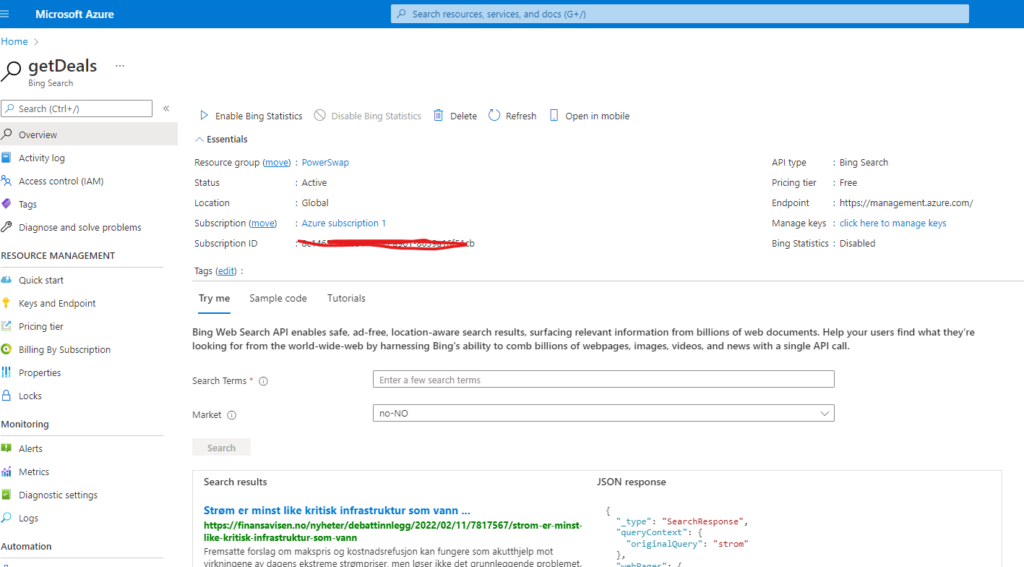

Since we are using a specific service to get deals, it could occur that our provider doesn’t give us all deals out there. That’s why we created a “Web Search API” in Azure. This allows us to create a flow that runs every day on bing for new deals and then notify us if our provider’s deal isn’t the best deal.

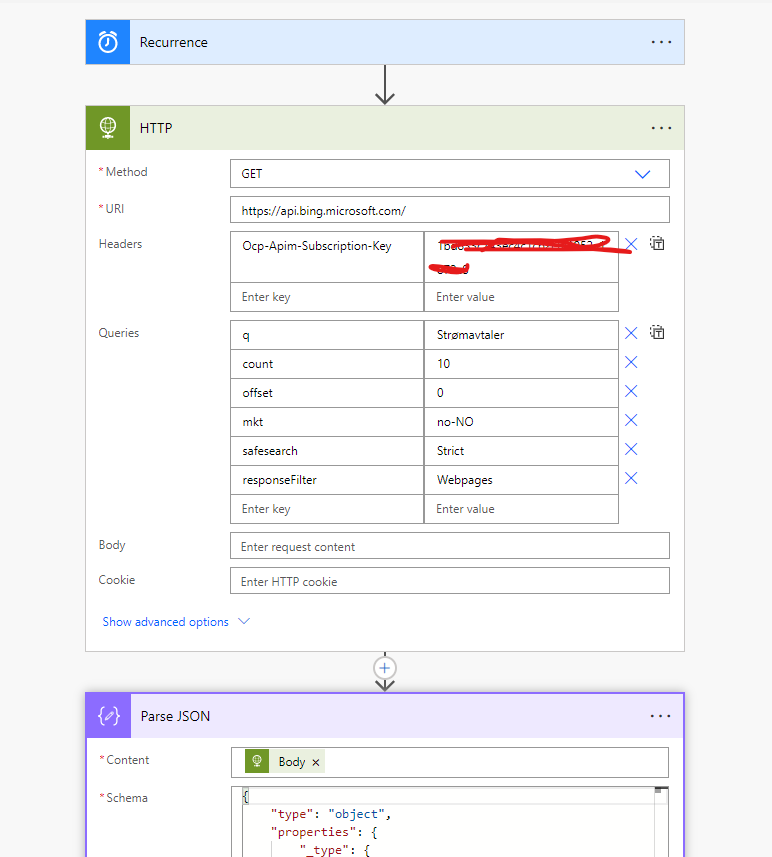

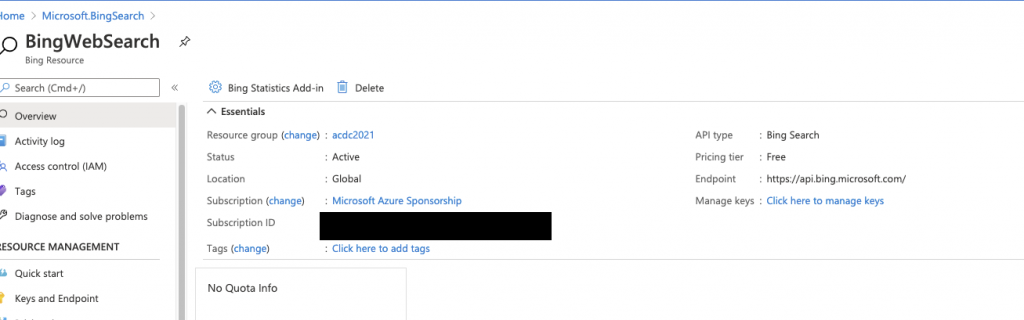

We started off by creating the Web Search API

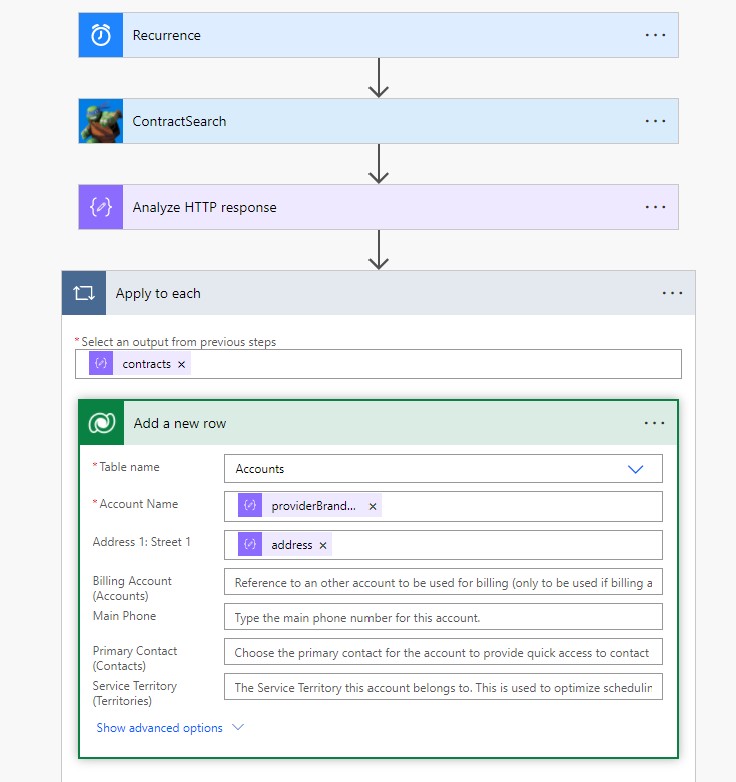

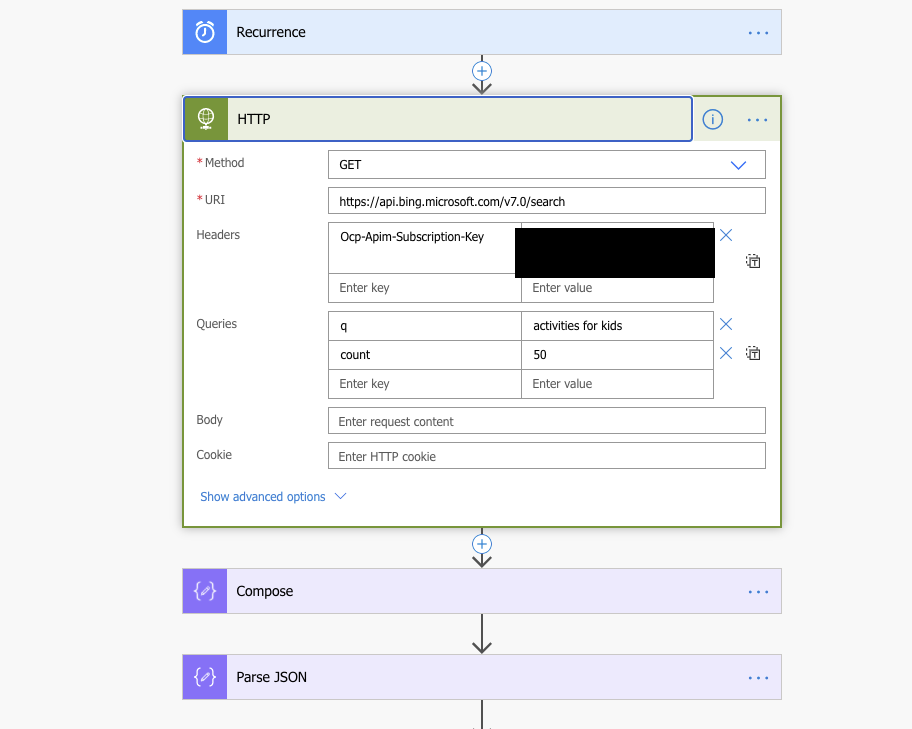

We then created a scheduled flow to run every day, to get those deals we don’t catch in our providers API. In our query, we go for the keyword “Strømavtaler”.

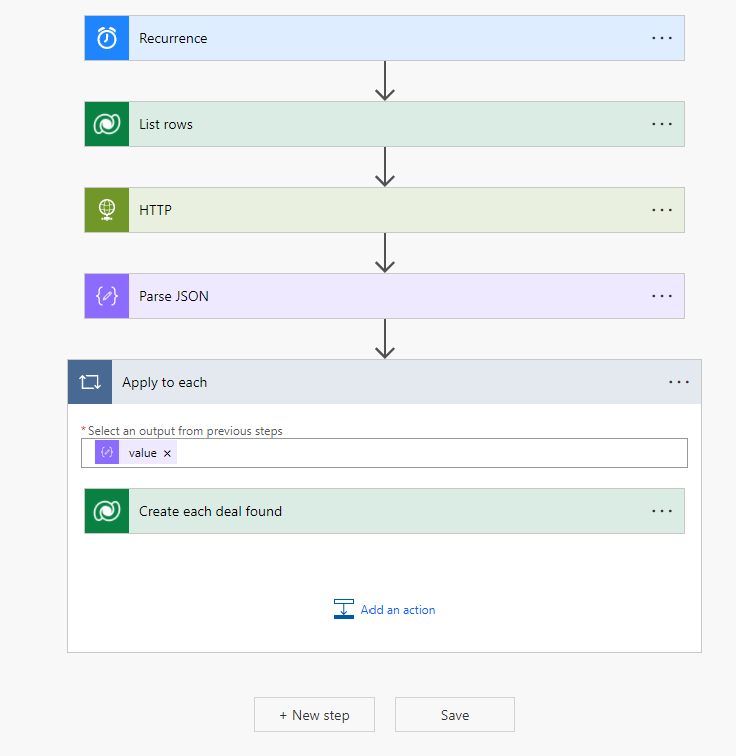

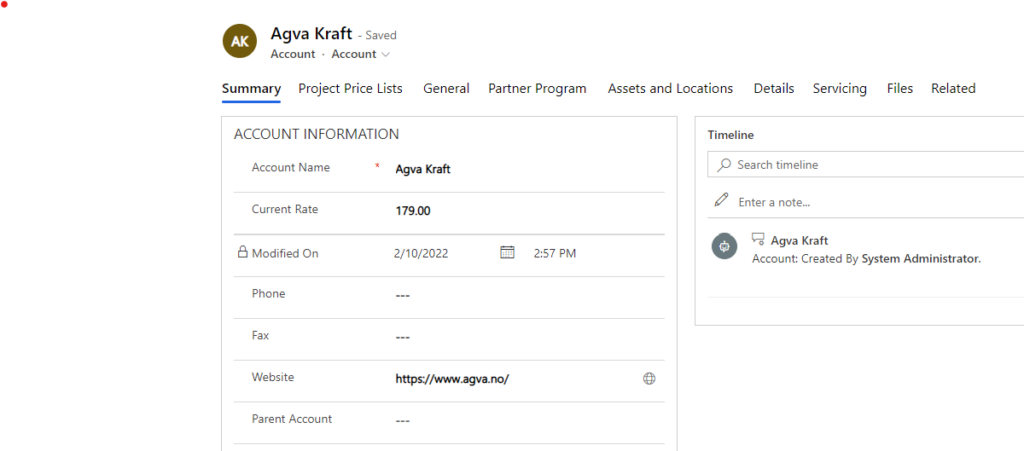

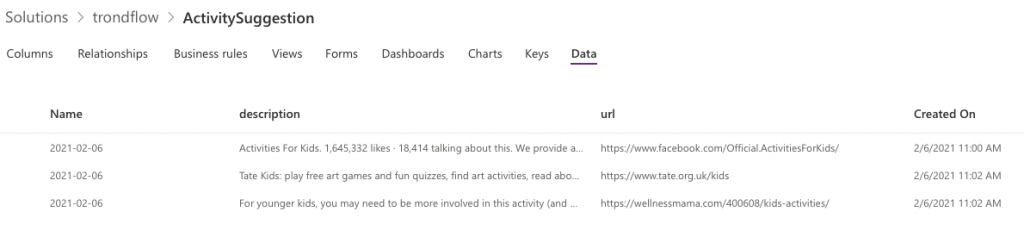

Parsing the JSON allows us to structure the data and get it out. Now we can add each deal we find into a table inside Dataverse to create tasks to follow up on these.

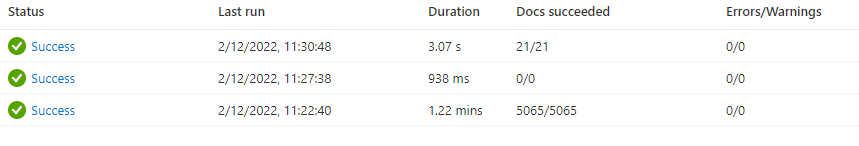

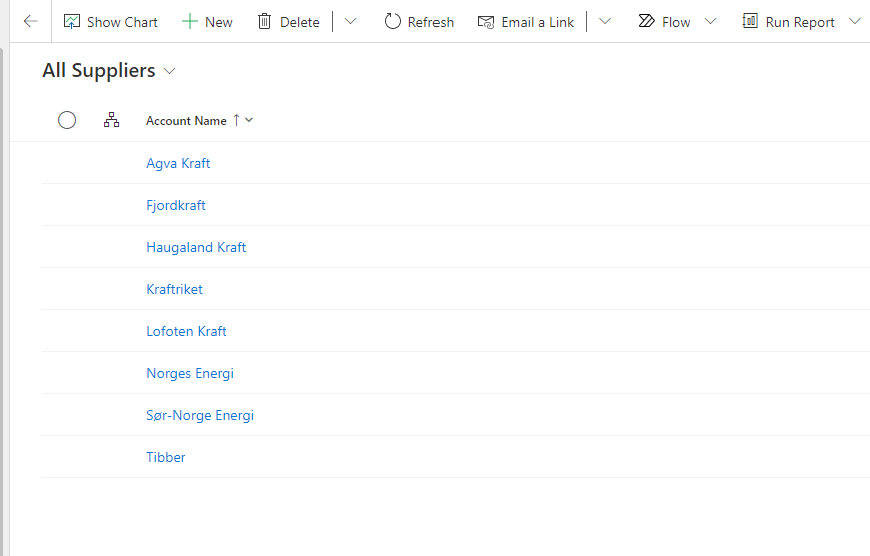

We think this would be an excellent crawler because we are doing a search to bing each day for electricity deals. Then we are creating a record in dataverse for each deal we find, and follow up on these.

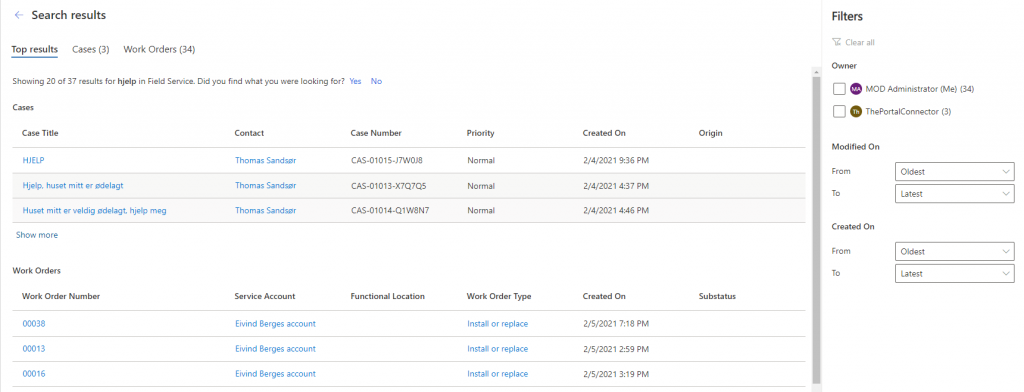

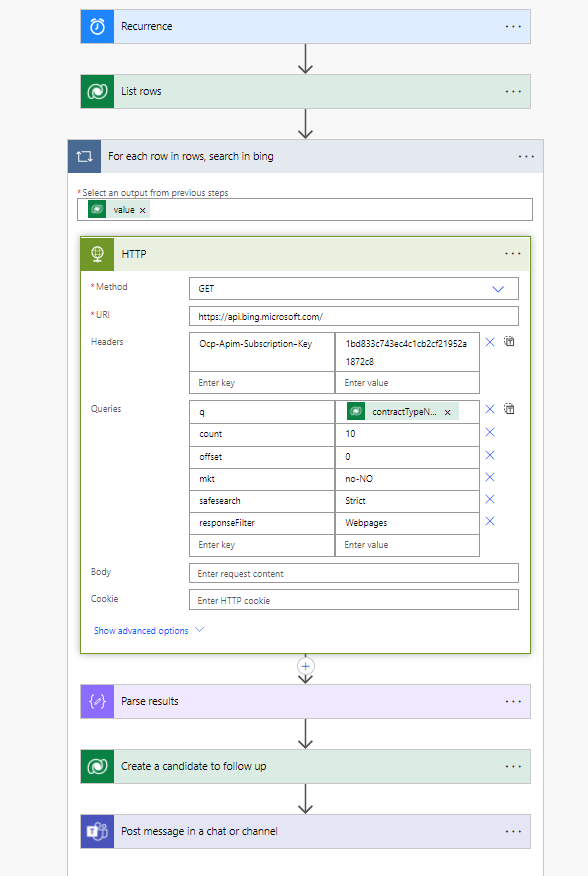

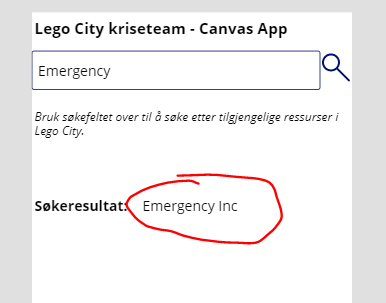

We also extended this flow, with another flow doing a search based on our library of electricity deals to see what the search engines give us if we run a search for each of our stored deals daily.

So based on our contract names, we are doing a search on bing each day, to see for possible better deals. We search based on the data we are capturing on www.elskling.no and then use this data to search on bing daily for updates on these contracts.

.jpeg)

.jpeg)