Greetings, fellow tech wizards and hackathon enthusiasts! ✨

At Team PowerPotters, we understand the power of automation—especially when it comes to managing hardware like the ESP32 microcontroller in our potion-making IoT system. To streamline firmware updates and ensure seamless operation, we’ve created a GitHub Actions workflow that automates the entire process of flashing firmware to the ESP32.

This submission demonstrates how the Power of the Shell has enabled us to simplify complex processes, saving time and reducing errors while showcasing our technical wizardry.

🪄 The ESP32 Firmware Deployment Workflow

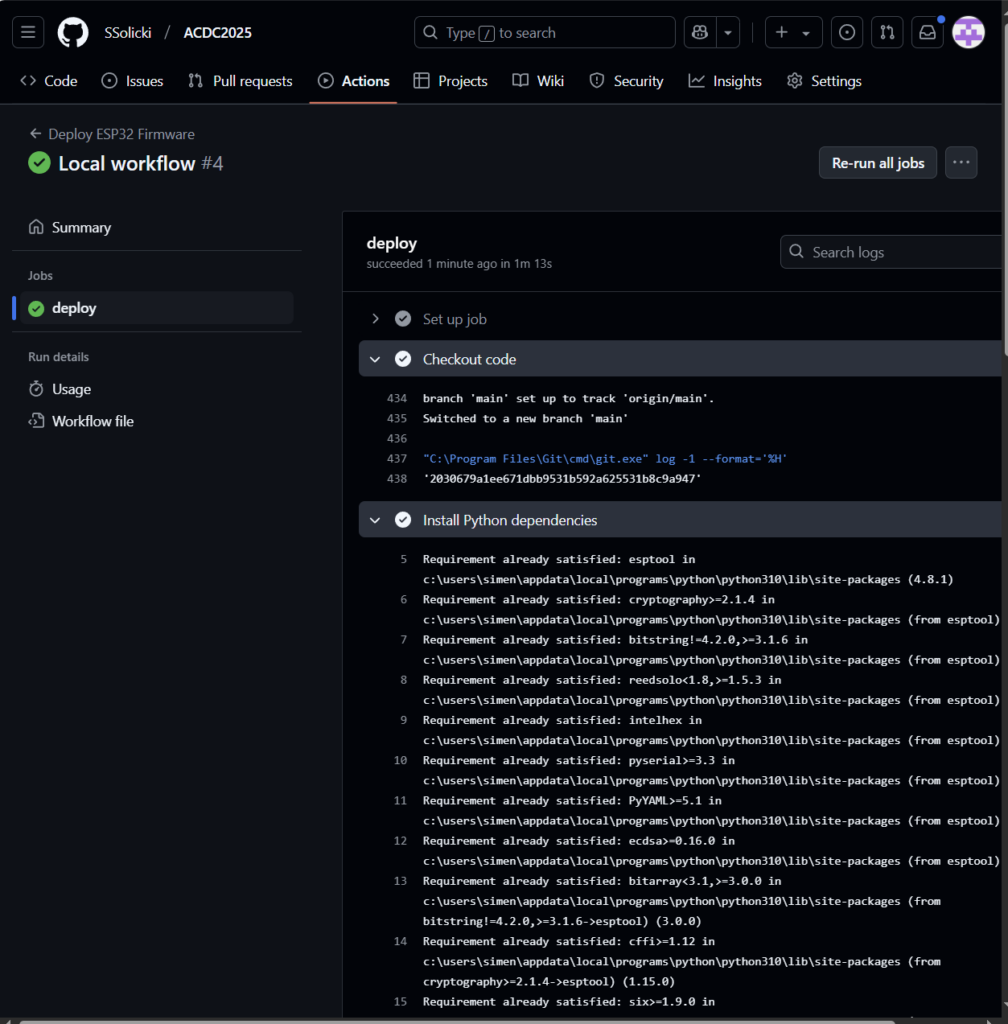

Our GitHub Actions workflow, named “Deploy ESP32 Firmware,” automates the process of flashing firmware to an ESP32 microcontroller whenever code changes are pushed to the main branch. Here’s how it works:

1. Triggering the Workflow

The workflow kicks off automatically when a commit is pushed to the main branch of our repository. This ensures that the firmware on the ESP32 is always up to date with the latest code.

2. Code Checkout

Using the actions/checkout@v3 action, the workflow pulls the latest code from the repository and prepares it for deployment.

3. Installing Dependencies

The workflow installs the esptool Python package, a critical tool for communicating with the ESP32 and flashing firmware. This step ensures that the deployment environment is ready for the flashing process.

4. Flashing the ESP32

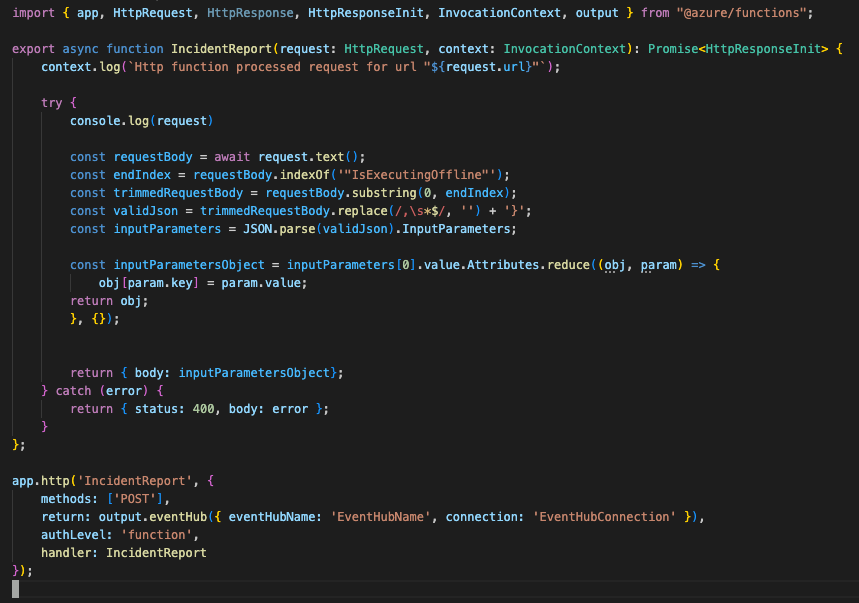

The magic happens here! The workflow runs the following shell command to upload the firmware to the ESP32:

on:

push:

branches:

- main

jobs:

deploy:

runs-on: self-hosted

steps:

- name: Checkout code

uses: actions/checkout@v3

- name: Install Python dependencies

run: python -m pip install esptool

- name: Flash ESP32

run: |

python -m esptool --chip esp32 --port COM3 --baud 115200 write_flash -z 0x1000 .pio/build/esp32dev/firmware.bin

- Chip Selection: Specifies the ESP32 chip for flashing.

- Port Configuration: Uses the COM3 port to communicate with the ESP32.

- Baud Rate: Sets the communication speed to 115200 for efficient data transfer.

- Firmware Location: Specifies the path to the firmware binary to be flashed onto the device.

The process ensures the firmware is deployed quickly, accurately, and without manual intervention.

✨ Why This Workflow is a Game-Changer

- Automation: By automating the firmware deployment, we’ve eliminated manual steps, reducing the risk of errors and saving time for potion production magic.

- Reliability: The workflow runs on a self-hosted runner, ensuring direct access to the ESP32 hardware and a stable deployment environment.

- Efficiency: The esptool command streamlines the flashing process, enabling us to quickly update the firmware as new features or fixes are developed.

- Scalability: This workflow can be adapted for other IoT devices or expanded to handle multiple ESP32 units, making it a versatile solution for hardware automation.

🧙♂️ Why We Deserve the Power of the Shell Badge

The Power of the Shell badge celebrates the creative use of shell scripting to automate technical processes. Here’s how our workflow meets the criteria:

- Command-Line Expertise: We leveraged the power of esptool and shell scripting to automate the firmware flashing process, showcasing our technical proficiency.

- Automation Innovation: The integration with GitHub Actions ensures that every code push triggers an immediate, accurate firmware update.

- Hardware Integration: By running on a self-hosted runner with direct hardware access, our solution bridges the gap between software and IoT hardware seamlessly.

🔮 Empowering Potion Production with Automation

From potion-making to IoT innovation, our ESP32 firmware deployment workflow ensures that our hardware is always ready for the next magical task. We humbly submit our case for the Power of the Shell badge, showcasing the efficiency and power of shell scripting in modern hackathon solutions.

Follow our magical journey as we continue to innovate at ACDC 2025: acdc.blog/category/cepheo25.

#ACDC2025 #PowerOfTheShell #ESP32 #AutomationMagic #PowerPotters