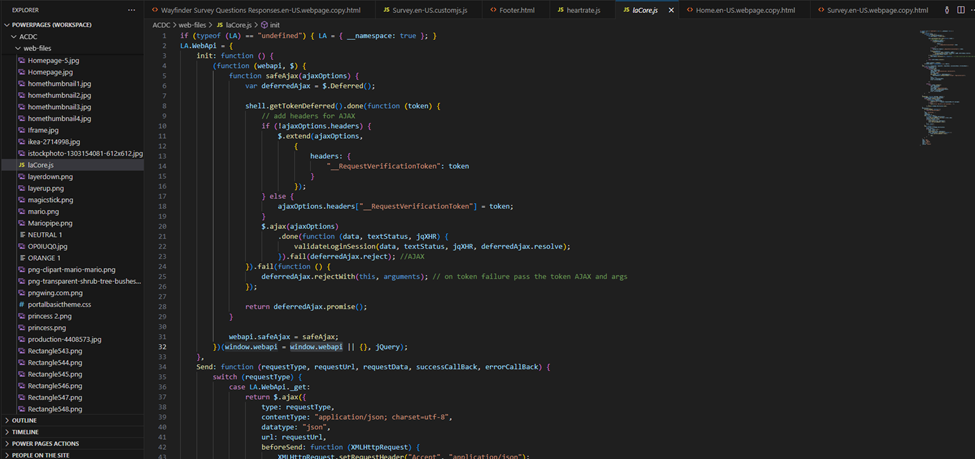

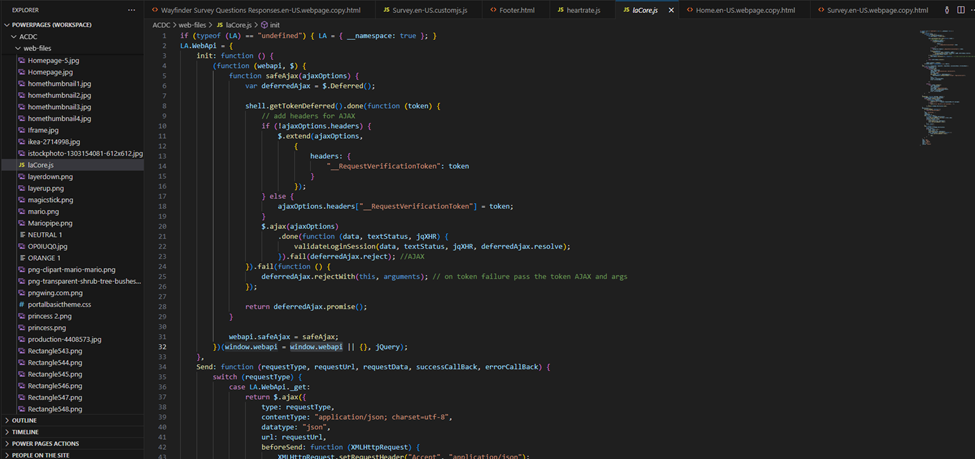

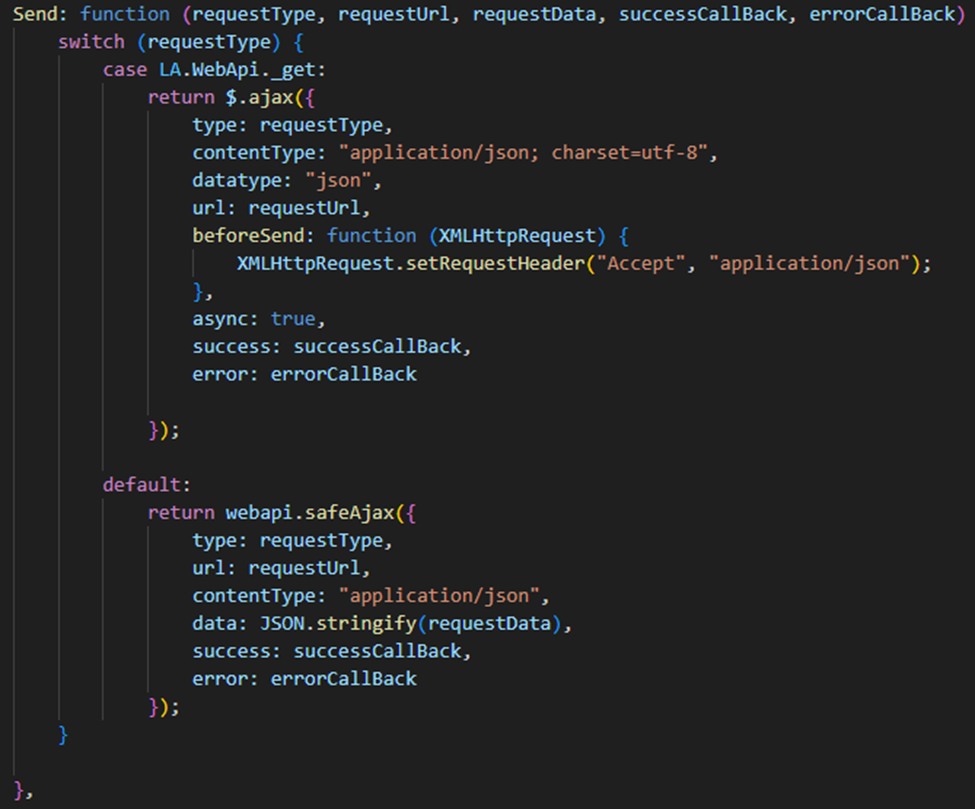

When working on Power Pages, we created a Core JavaScript file to streamline development. This file contains reusable methods used across different pages.

For example,

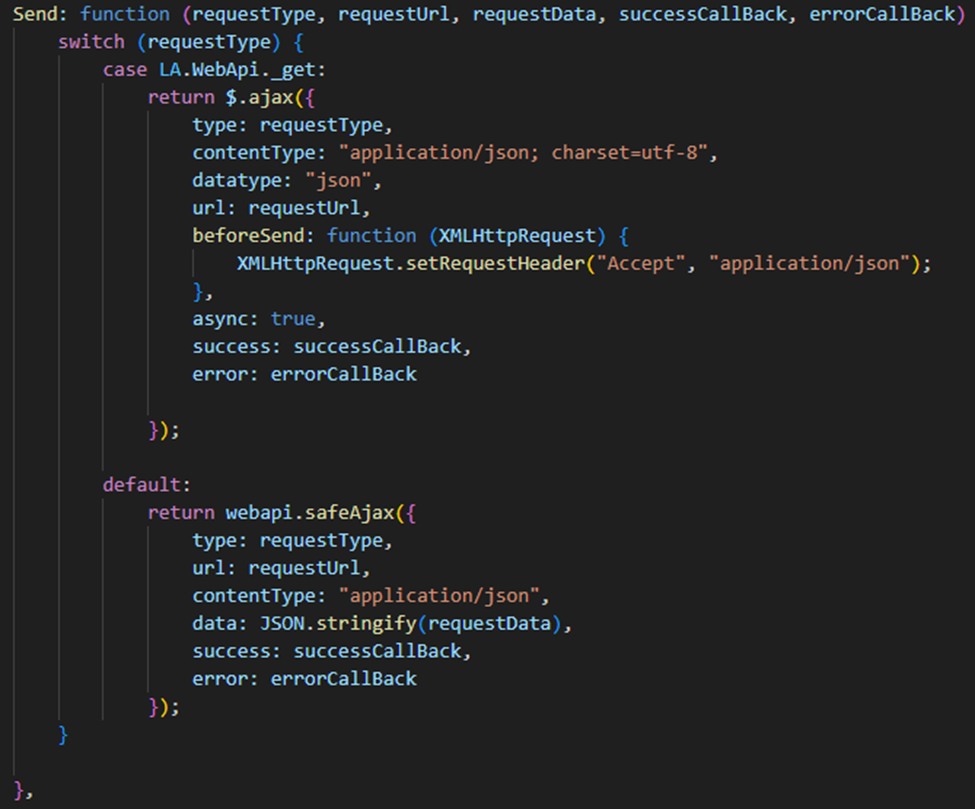

we are using the Send function across the pages to send a get request

When working on Power Pages, we created a Core JavaScript file to streamline development. This file contains reusable methods used across different pages.

For example,

we are using the Send function across the pages to send a get request

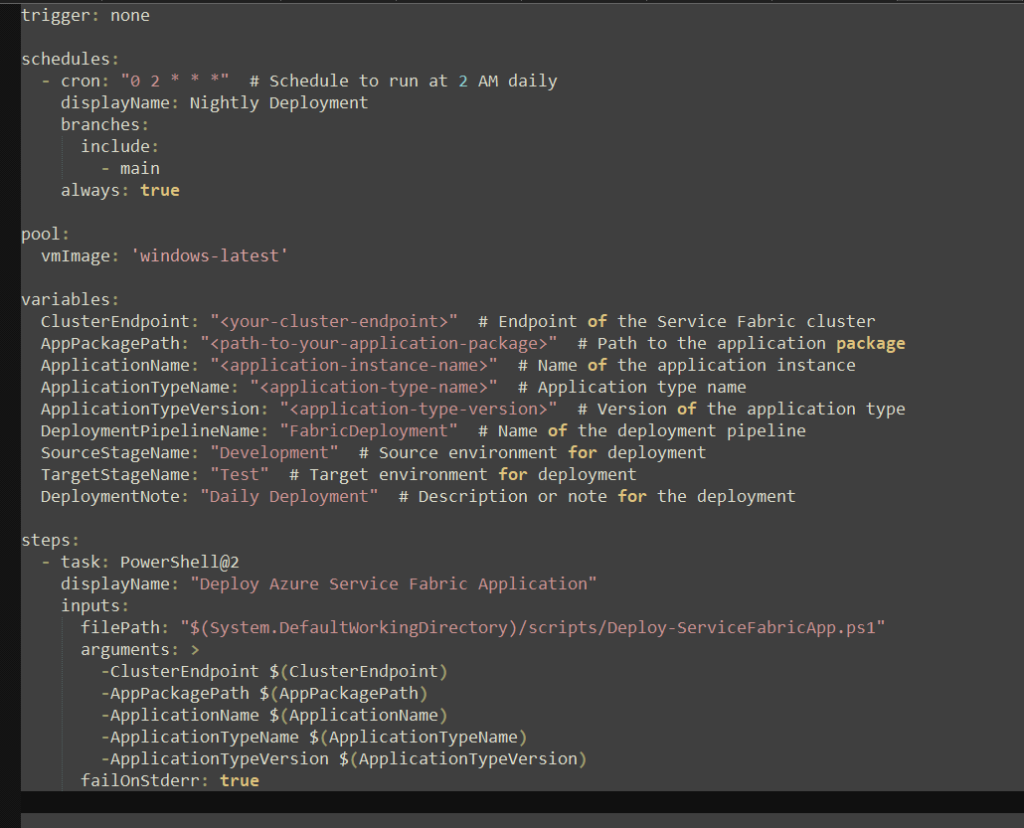

In this article, we’ll are being very serious and will discuss how to set up a DevOps schedule to call a PowerShell script for deploying an Azure Service Fabric application.

Previously, we created a pipeline to promote changes across Development, Test, and Production workspaces. However, a robust Application Lifecycle Management (ALM) process requires more automation. This time, we’ll use scheduled PowerShell scripts in Azure DevOps to streamline deployment tasks.

s

DevOps YAML Pipeline for Scheduled Deployment

trigger: none

schedules:

– cron: “0 2 * * *” # Schedule to run at 2 AM daily

displayName: Nightly Deployment

branches:

include:

– main

always: true

pool:

vmImage: ‘windows-latest’

variables:

ClusterEndpoint: “<your-cluster-endpoint>” # Endpoint of the Service Fabric cluster

AppPackagePath: “<path-to-your-application-package>” # Path to the application package

ApplicationName: “<application-instance-name>” # Name of the application instance

ApplicationTypeName: “<application-type-name>” # Application type name

ApplicationTypeVersion: “<application-type-version>” # Version of the application type

DeploymentPipelineName: “FabricDeployment” # Name of the deployment pipeline

SourceStageName: “Development” # Source environment for deployment

TargetStageName: “Test” # Target environment for deployment

DeploymentNote: “Daily Deployment” # Description or note for the deployment

steps:

– task: PowerShell@2

displayName: “Deploy Azure Service Fabric Application”

inputs:

filePath: “$(System.DefaultWorkingDirectory)/scripts/Deploy-ServiceFabricApp.ps1”

arguments: >

-ClusterEndpoint $(ClusterEndpoint)

-AppPackagePath $(AppPackagePath)

-ApplicationName $(ApplicationName)

-ApplicationTypeName $(ApplicationTypeName)

-ApplicationTypeVersion $(ApplicationTypeVersion)

failOnStderr: true

Above yaml will call the powershell script in following link.

Here comes another update from Fabricator,..

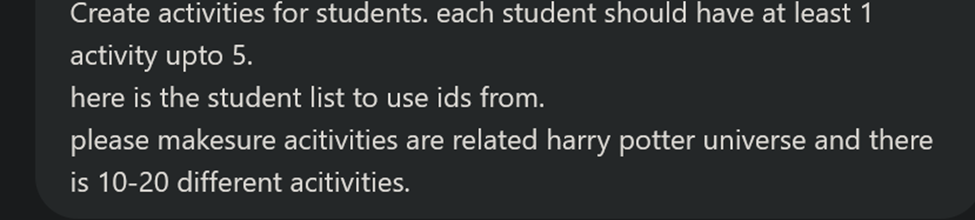

You know the moment when everything is ready with your application, and you need some data for proper testing?

You could search the internet for a dataset, or you could write meaningless strings as data.

However, if your solution includes Power BI reports, you need accurate data so the reports display meaningful visuals.

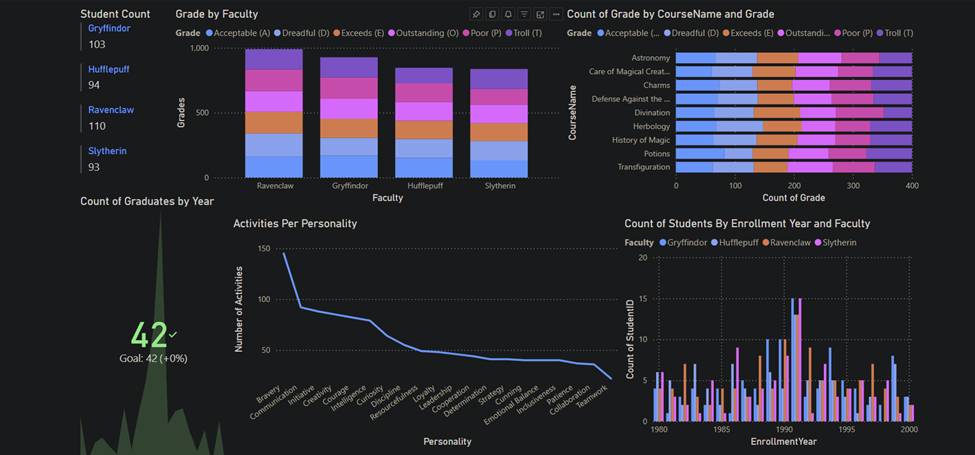

We used AI to create historical data.

First, we generated real data, then asked the AI to expand it using similar values.

We worked with the following tables:

After expanding the student table, we asked the AI to generate the other tables one by one.

In each prompt, we included the previously generated data so the AI would create new rows with matching IDs and related information.

We also asked the AI to generate this data in a lore-friendly way. As a result, our data became very realistic:

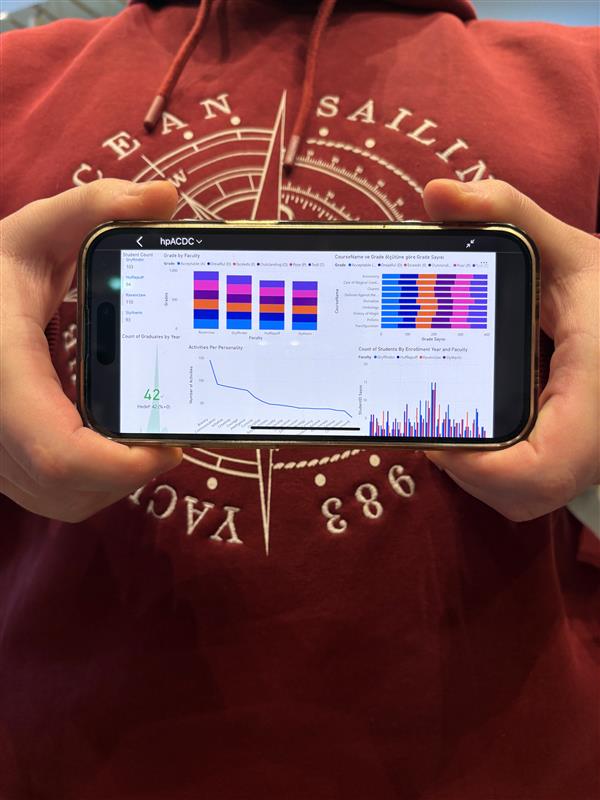

For example, Ravenclaw has the best grades overall, and brave students have the highest number of activities.

“SharePoint: The Room of Requirement for files—sometimes it’s there, sometimes it’s not, and sometimes it’s full of things you didn’t ask for”

So our Oleksii the Oracle, following his dream to follow the footsteps of one of our honorable judges and become the “World Champion of SharePoint” (We admire your work, @mikaell ).

went to expore, learn and share.

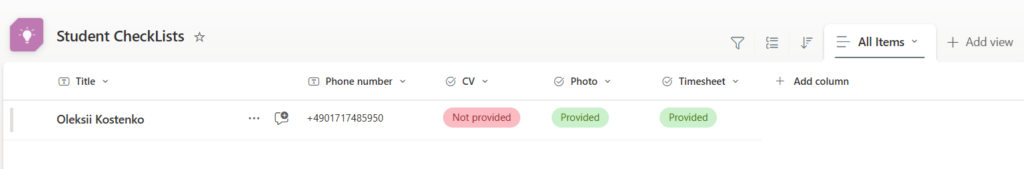

In our Wayfinder academy, we collect a lot of data, we manage it. And everyone knows how difficult it is not to forget to provide to faculty administration some important stuff or remember where it is, etc. To fix this problem for students of Hogwarts we are using a sharepoint which acts as a back office for faculty administrators and our academy workers.

For this purpose, we have a checklist that controls the completeness of the provision of documents by students required by faculty administrators.

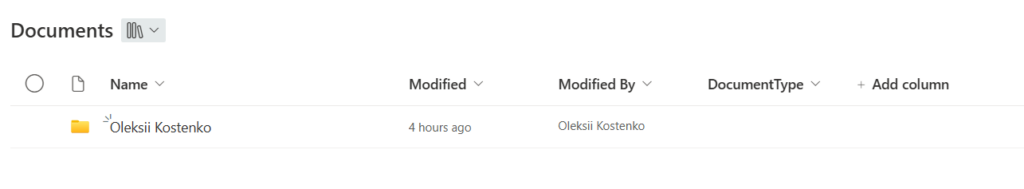

Also we have a docset that stores data from students. Files from students are uploaded to corresponding folders from out of the box integration with Dataverse and SharePoint.

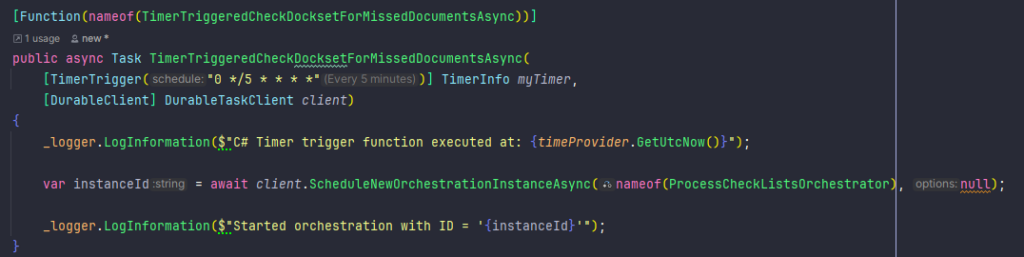

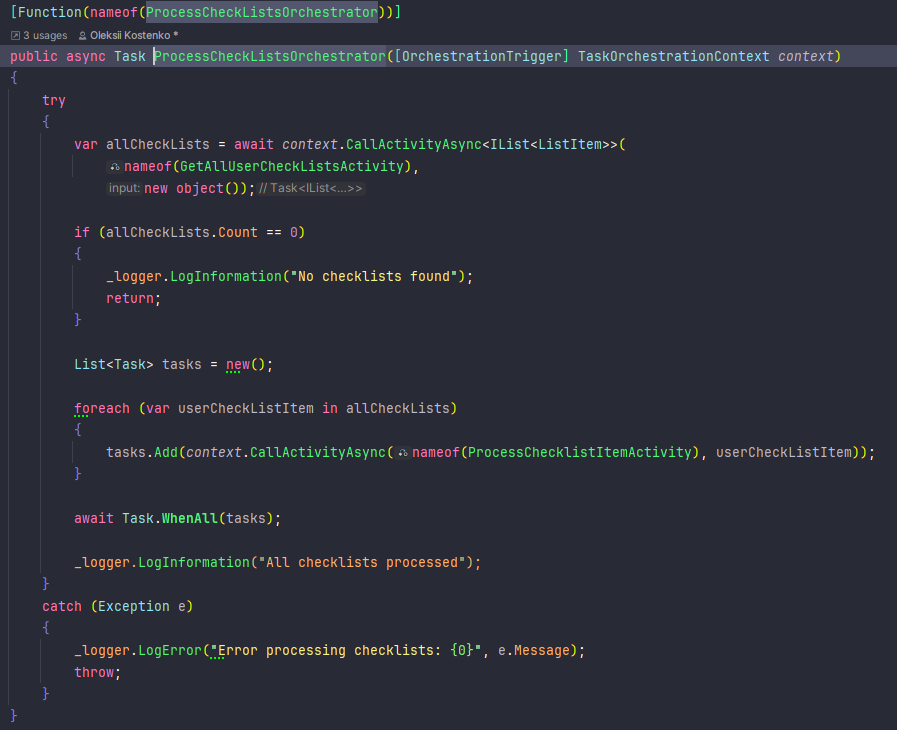

CheckList processing handled by a timer triggered Azure durable function that crawls all the students from checklist and searches for the missing documents in the library. If app found any missed documents it updates checklists, so faculty administrators could see which documents are missing and act accordingly (exclude student, joking!)

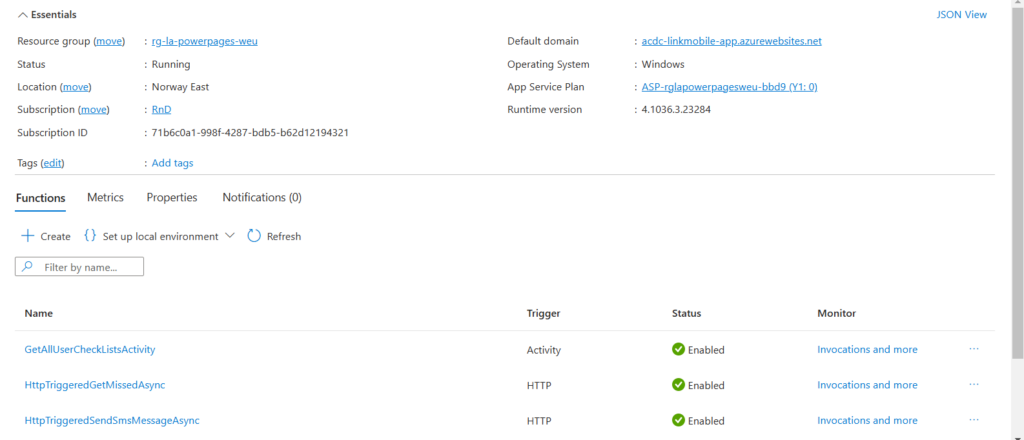

Azure function is written with .Net and hosted in the Azure. Variables for azure function are also stored in the Azure Function App.

On the next step we are going to send sms notifications thanks to our integrations posted yesterday about utilizing a LinkMobility API (LinkMobility & Logiquill Love story | Arctic Cloud Developer Challenge Submissions)

Also we would like to setup an Azure devops project for repository and CI/CD of our Azure Functions. Stay tuned, more is coming!! Woo hooooo! This is so much fun!!!!

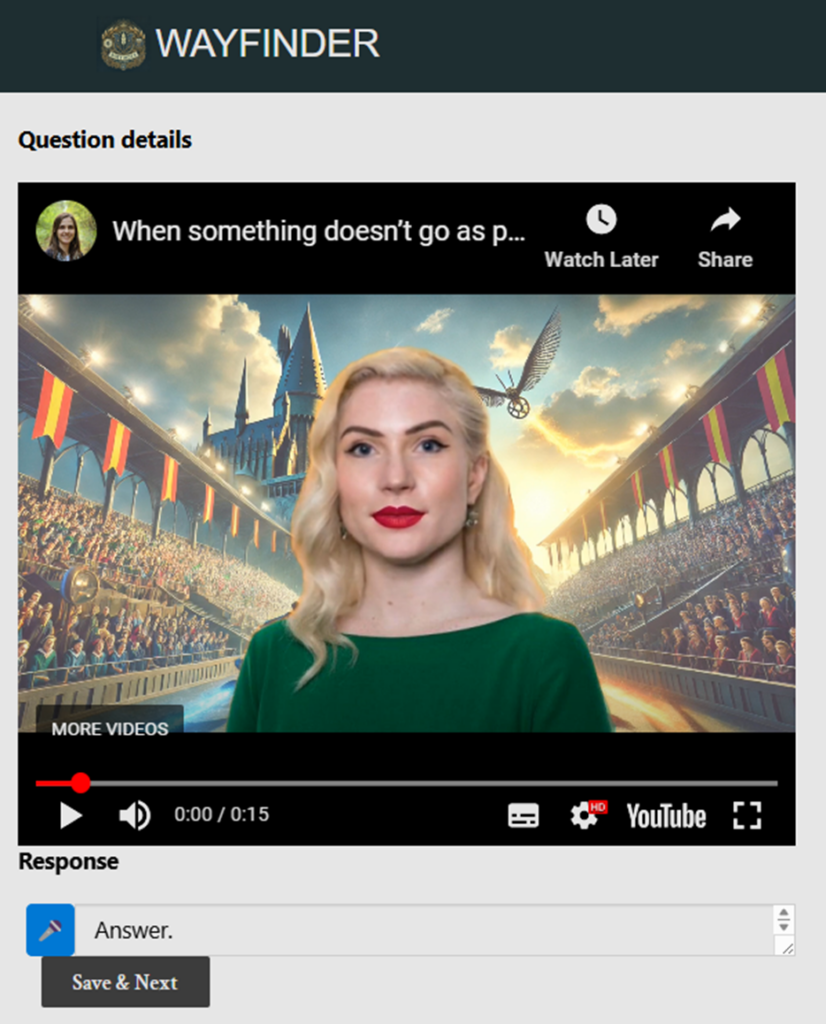

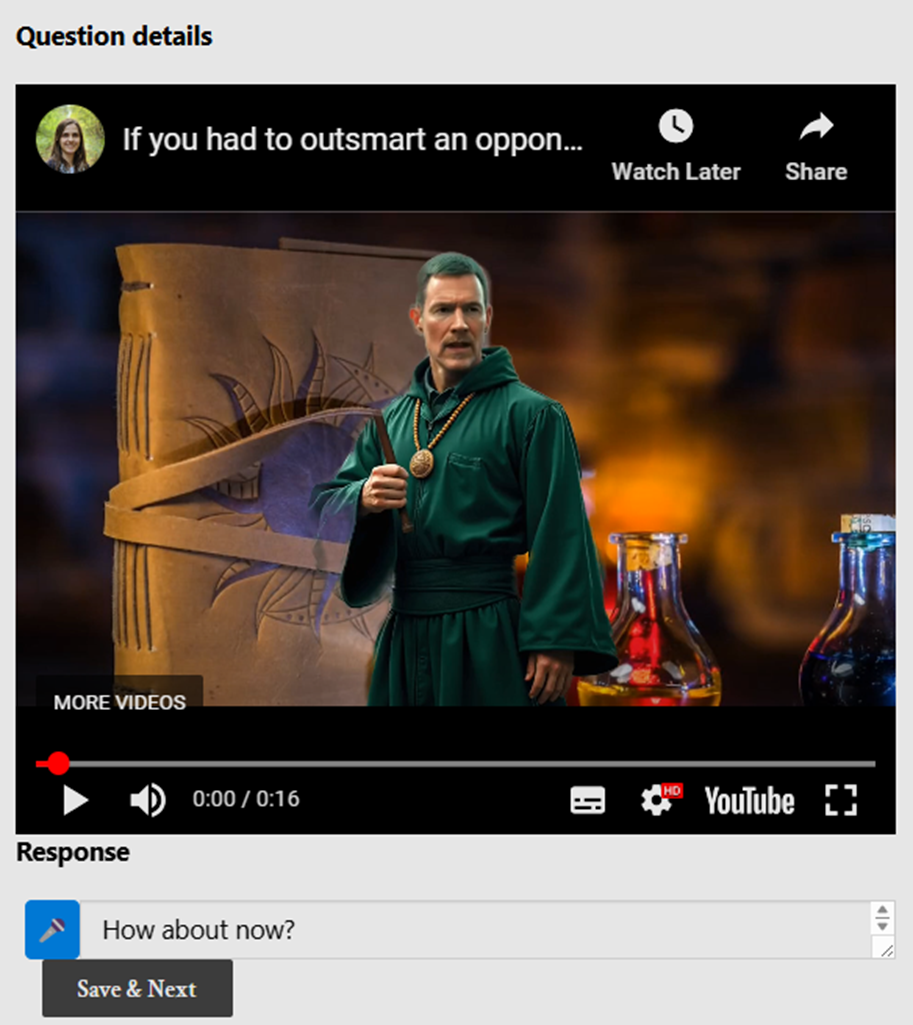

Or maybe, our honorable jury feels like its a good idea delegating some work in the hackaton to their Digital Twins and free up some time for “Me time”? Well, we thought about this already, as in our Wayfinder Academy, we really needed you to help us in interviewing students that send us their applications to get help on defining the faculties they truly belong to. So, given how busy you are, we used Elai.IO to generate videos that visualize you running an interview.

It went so well, that we went further to implement Copilot Agents (WE LOVE THEM!!!) so that they, fed by the data related to you and available, will provide real time experience for the students going thru the interview or getting some mentorship as if they are talking to you and not your digital twin.

Are you excited to see it?

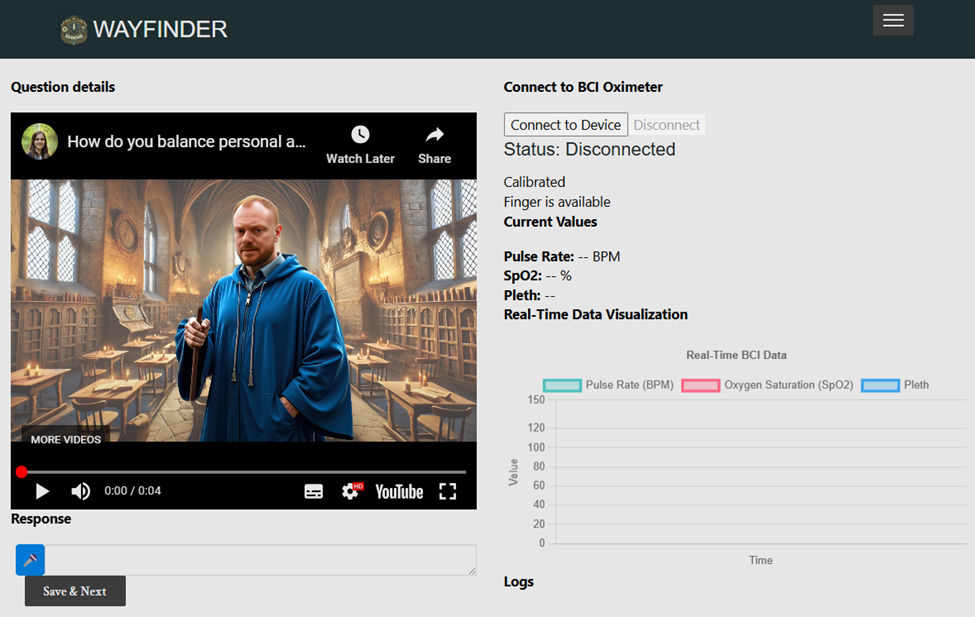

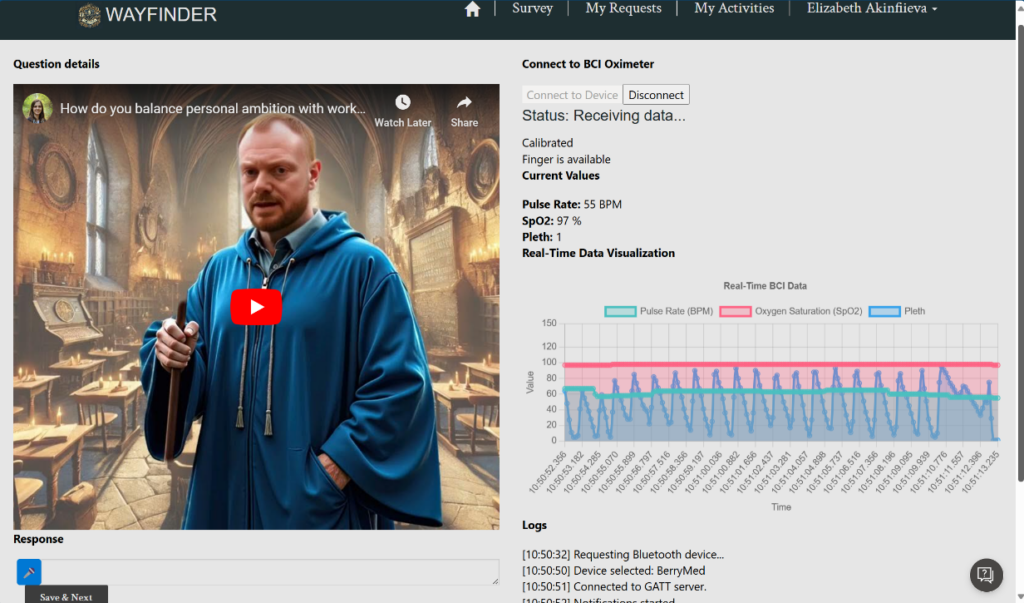

Below is the view we have when the interview is running, where you see both the video, and some other data (described here Magic Sensors: the enchanted Howlers of the tech world—they always tell you what’s wrong, loudly and repeatedly. | Arctic Cloud Developer Challenge Submissions)

And below is the students view, isn’t it beautiful?

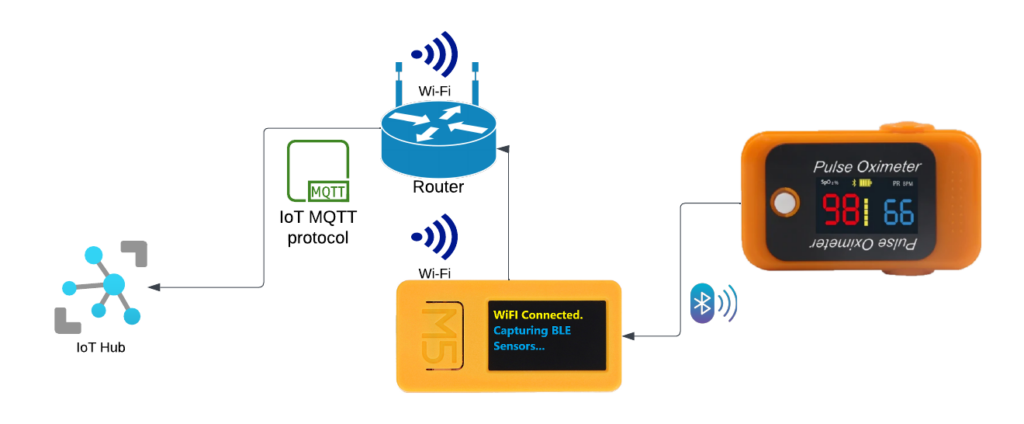

Building up on IoT in the Pro Code category, and in continuation of our earlier update Edit Post “Magic Sensors: the enchanted Howlers of the tech world—they always tell you what’s wrong, loudly and repeatedly.” ‹ Arctic Cloud Developer Challenge Submissions — WordPress

So, with Yurii the Wise, we are connecting our Bluetooth device to the internet.

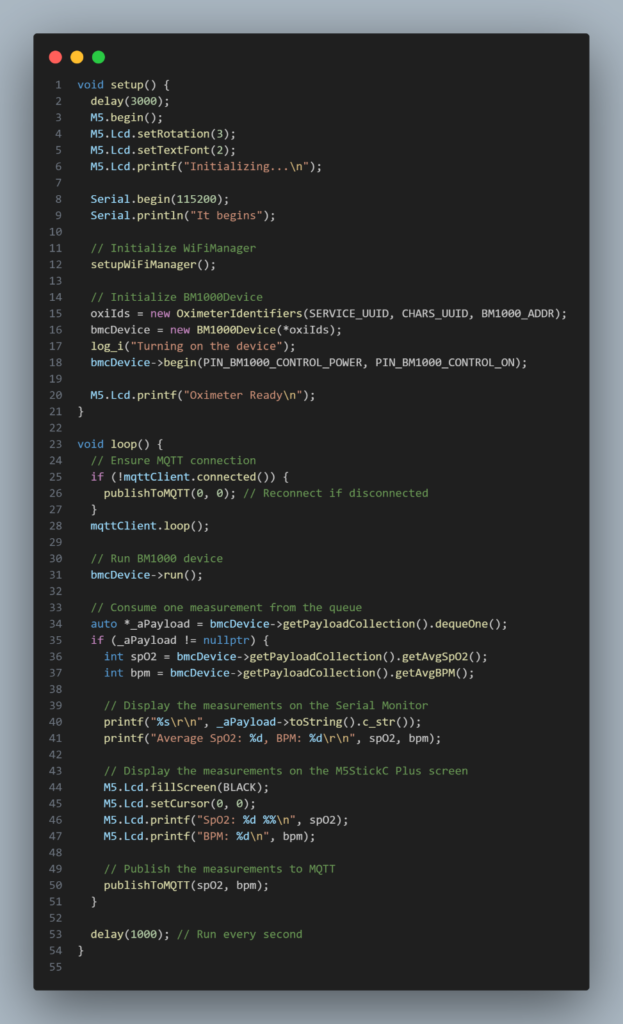

This article describes implementing an IoT device: a Bluetooth to MQTT gateway. The idea is to implement a device with both Bluetooth and Wi-Fi connectivity. Then, all captured data from the BLE can be streamed to the IoT Gateway.

The controller is M5Stick C plus2, based on the ESP32 System on the Module.

We implemented the firmware in the Arduino IDE, which can be used to create a fallback Wi-Fi access point to initialize the Wi-Fi and MQTT connection details. The firmware was written in C++ language using the Arduino framework. We also flipped the bit to turn on the Bluetooth proxy oximeter automatically and turned on the screen to render average values of the SpO2 and BPM.

How does it work?

We used the following external library:

We are building the firmware with the Single-responsibility principle to encapsulate the logic of interaction with different peripheral devices and make the logic of main.ino file as clean as possible. Unfortunately, making it work in that couple of days was impossible, and the result was unstable. But stay tuned; we will release the repo with the worked code soon.

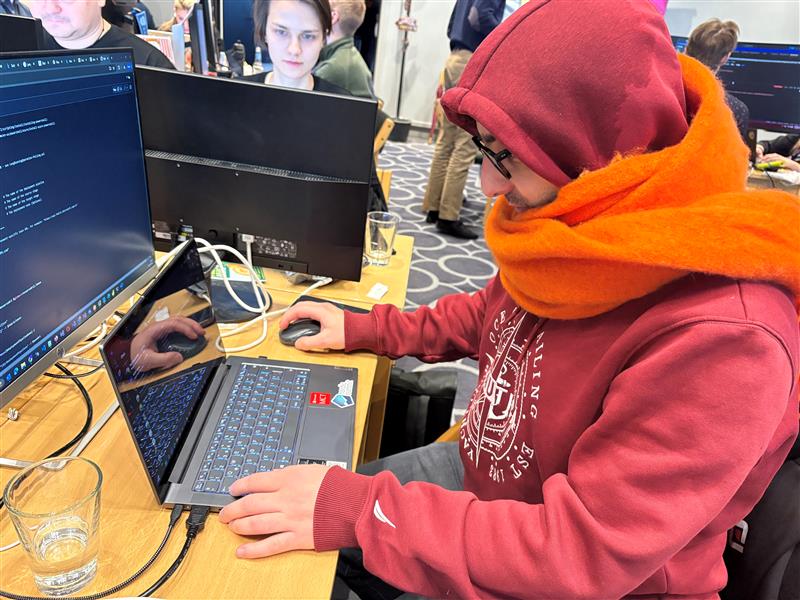

Faruk the Fabricator who travelled all the way from sunny Istanbul, got really frozen outdoors. So he came back unusually alerted and went straight into work, to warm up.

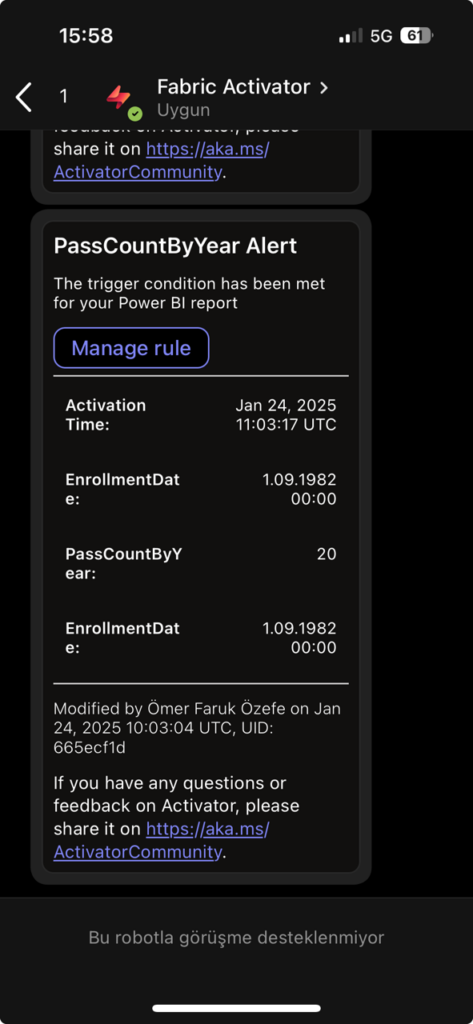

Data activators is up. When failure is close, our Fabricator will know.

An alert has been set for the KPI column. When it falls below a certain value we send an email and alert the user.

More will come with table based data activators. This is just the beginning.

Faruk the Fabricator is just warming up…

PS: we claimm PlugNPlay badge for the sending notifications to TEAMS

Keeping up with our Low code focus, even after the outdoor activity which rushed our metabolism to its highest level! It feels GRRRRREAT!!!! Thanks for arranging this.

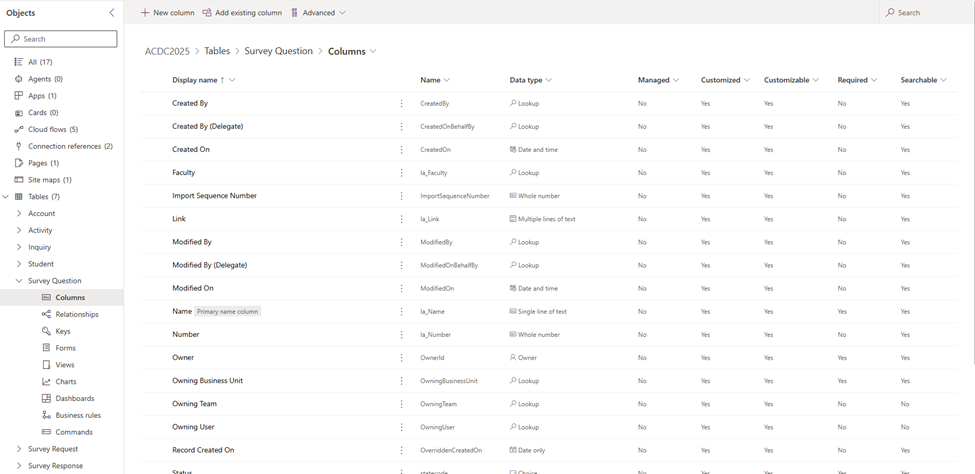

We are building a backend for the academy administrators using Dataverse and Model driven application.

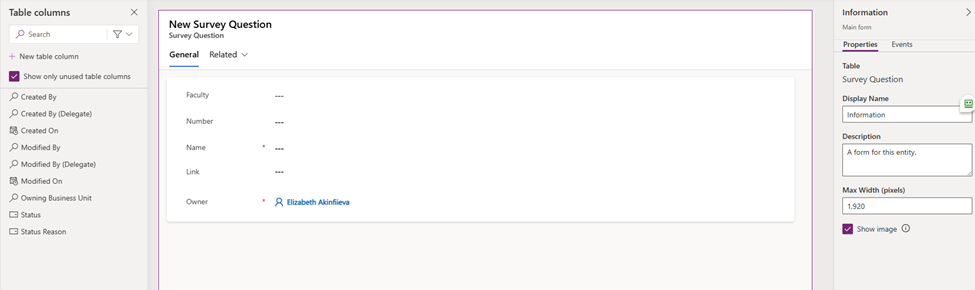

The dataverse allows us easily set up the data model for Survey Questions for students:

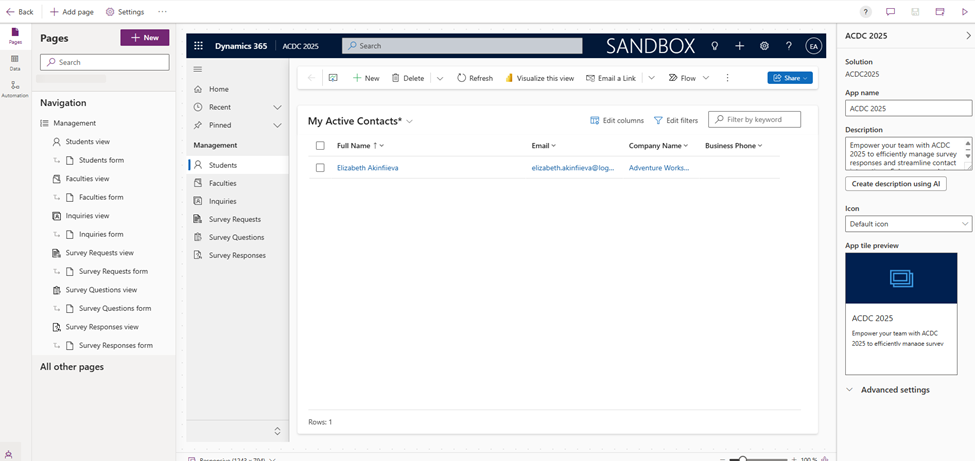

We are using the power of the Model-driven apps to easily create a back office to easily set up which forms and views are accessible for our academy administrators:

In a previous article, we mentioned that our Logiquill platform aims to help students unleash their potential using insightful information from their background, current aspirations and vision on the future. This type of data gives us the opportunity to build preliminary suggestions, but how can we make it more precise?

For our creative minds, the first and obvious idea was to use wearable devices like smart rings and smartwatches to catch the physical metrics during the review sessions

Which metrics are available to fetch from the wearable devices?

To demonstrate that type of integration during the event, we used a portable Pulse Oximeter to capture real-time health data )come and try it out by the way :))

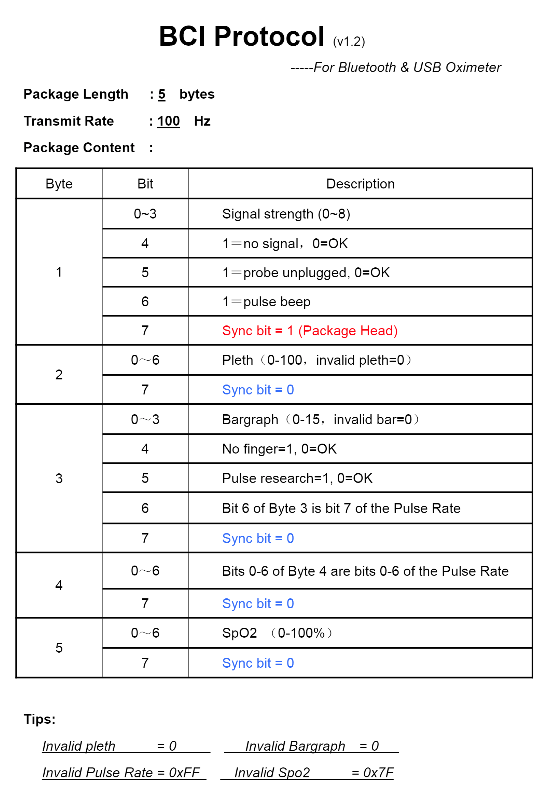

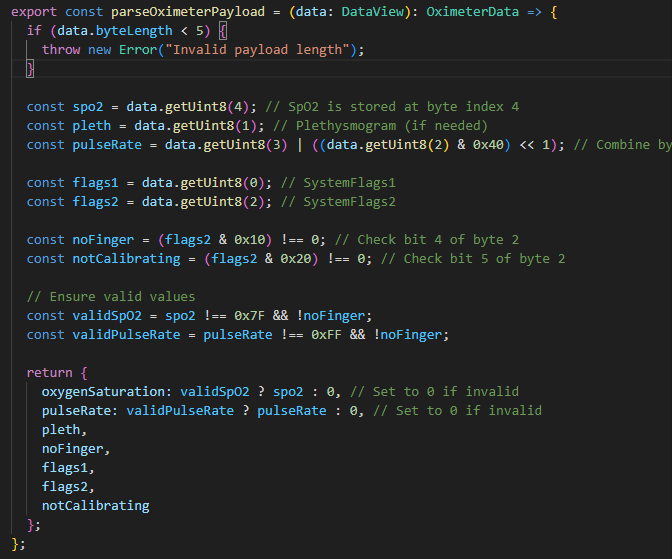

This device has a built-in Bluetooth interface that exposes all available sensors, such as isFIngerExists, isCalibrated, BPM, Oxygenation, and Pleth. So, we can use HTML 5 API to make it work with our portal app to capture the health data during the students’ review session. The device uses the BCI protocol:

Let us add a few details regarding Bluetooth devices:

According to the specifications of the Bluetooth, each device must implement at least one service (Audio, UART, MediaPlayer Control, etc.). Each service can have its characteristics (Volume, Speed, weight, etc..).

In our case, the Pulse Oximeter device has Service ID: 49535343-fe7d-4ae5-8fa9-9fafd205e455 and characteristic ID: 49535343-1e4d-4bd9-ba61-23c647249616. Here is an example of the JS code how to parse the Bluetooth packet:

The final result looks like this and we are loving it! We hope you too 🙂

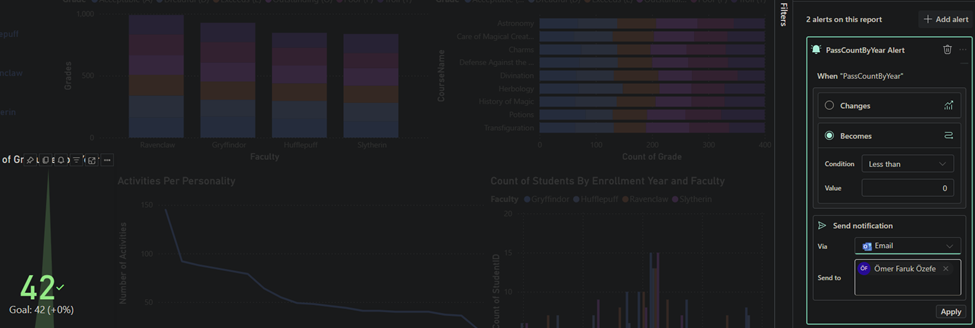

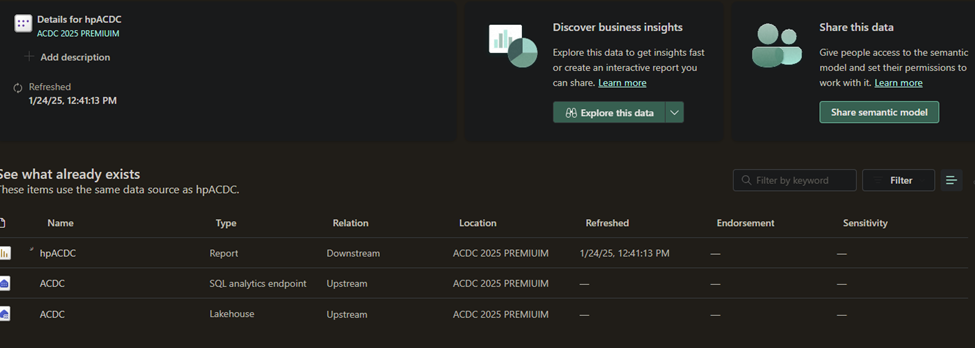

Our Faruk the Fabricator is living it now. Since yesterday, he was challenging himself on how to display the results in the best possible way… but he never questioned his Loooove to Fabric. And he also knows that the Fabric is the Marauder’s Map of data—you always know where everything is, even if it’s trying to hide.

Here are the results of our data in Power BI report. We can see different metrics for student data.

We can also put KPI and compare ourselves with the so called Sorting Hat.

It is our first year since Wayfinder Academy was created and we introduced our Logiquill portal and we already show same performance, wait a few more years and we will leave him in the dust, as it already wasn’t dusty enough.

Here are our components inside Fabric

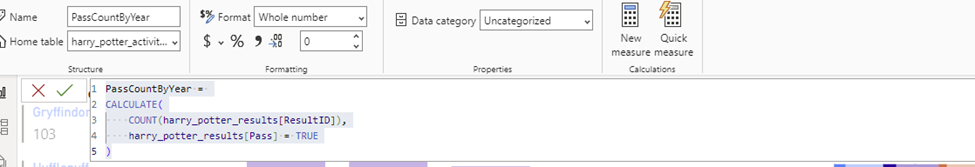

Our dax code in Power BI:

Here is why we also claim Chameleon badge, in addition to Dash it Out:

Solution is responsive. Adapts to all devices and screen sizes.