🎯 Goal: To equip Hogwarts to seize opportunities in the future.

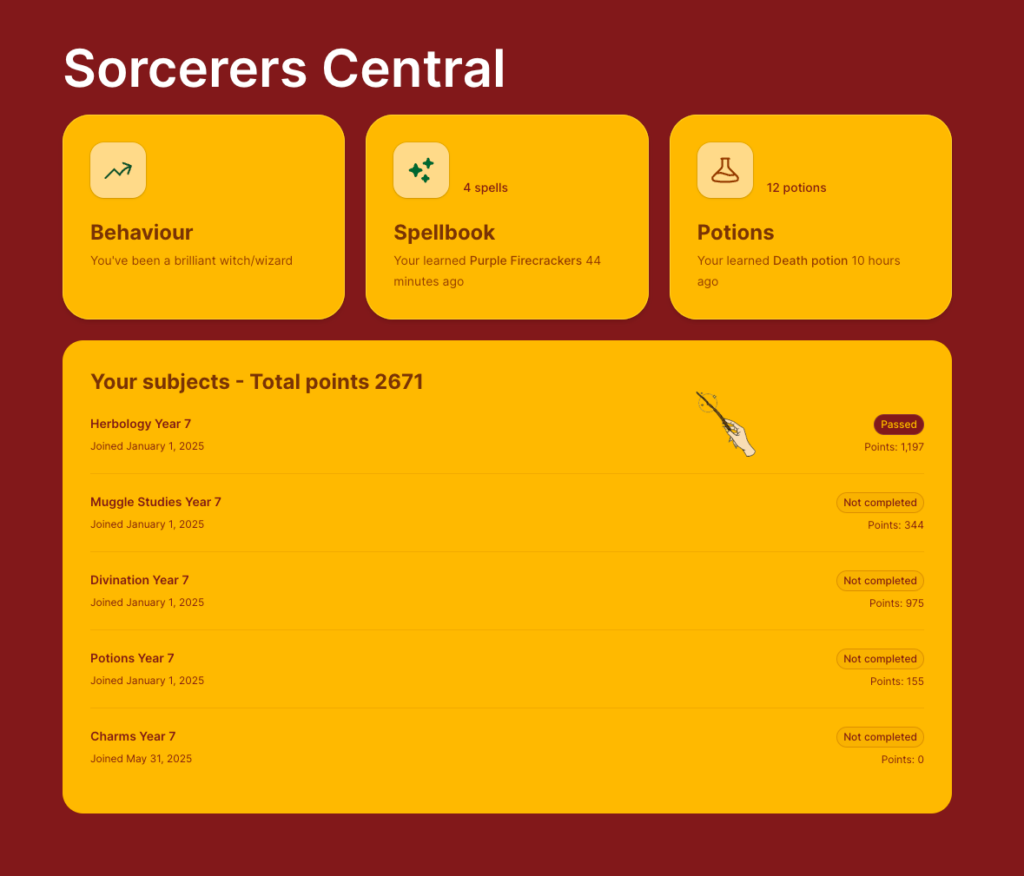

🛠️ Method: Implement the wide range of Microsoft Technology, from low-code apps and automations to pro-code portals and AI-models.

💡 Solution:

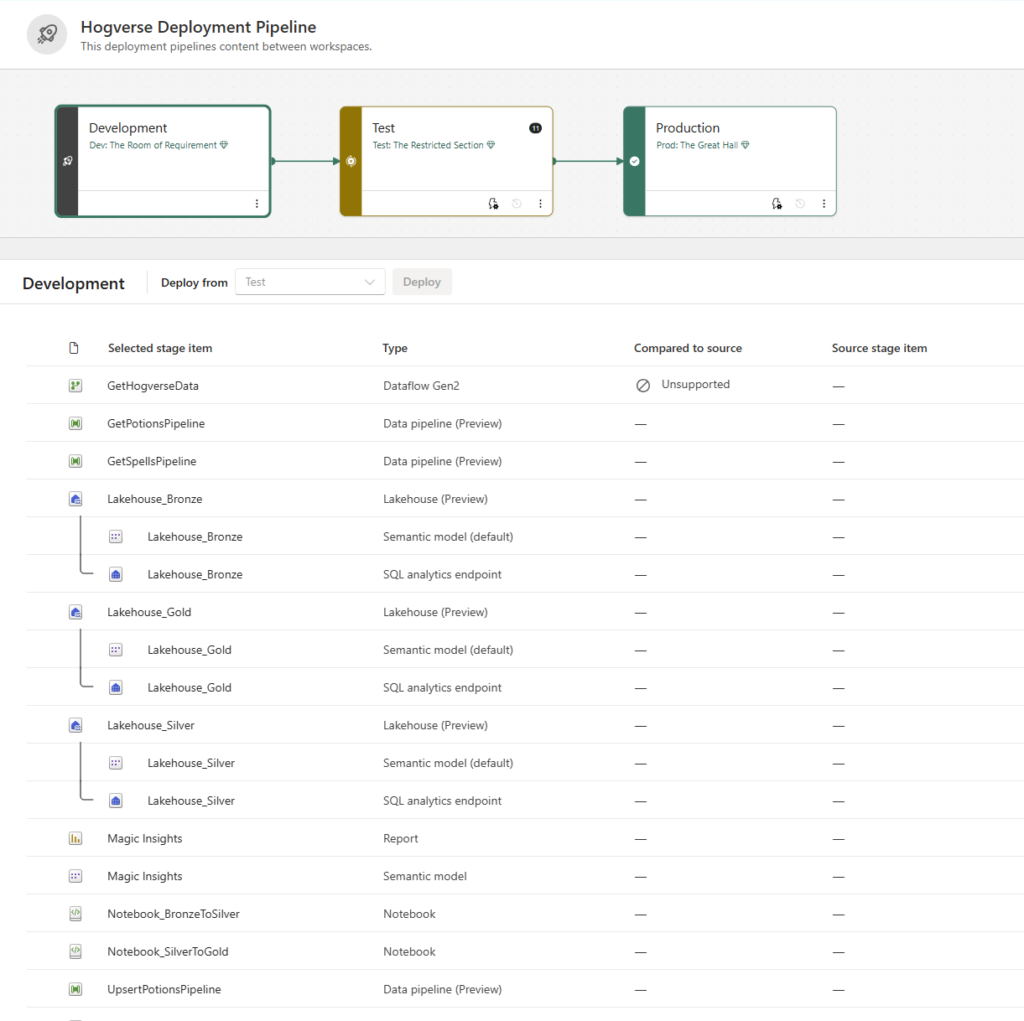

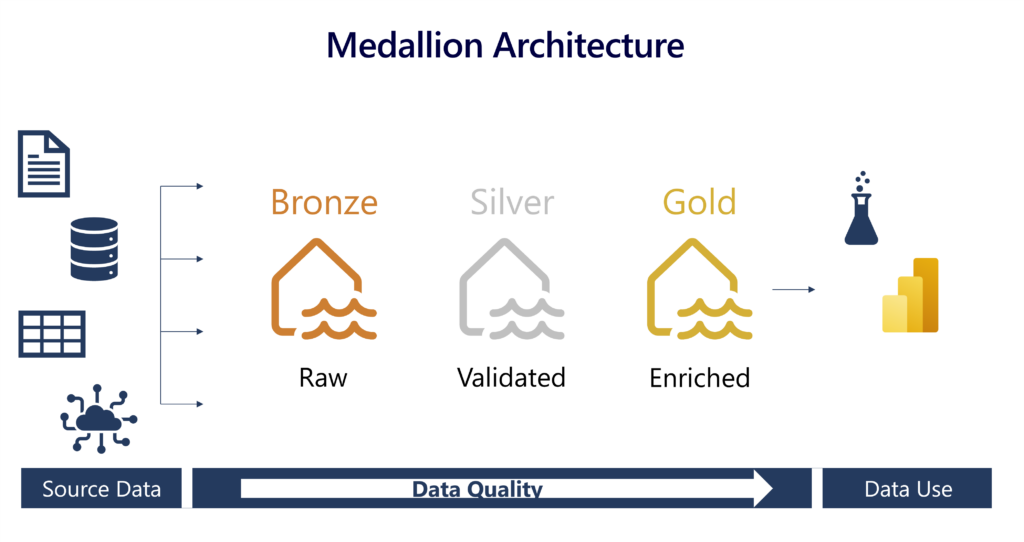

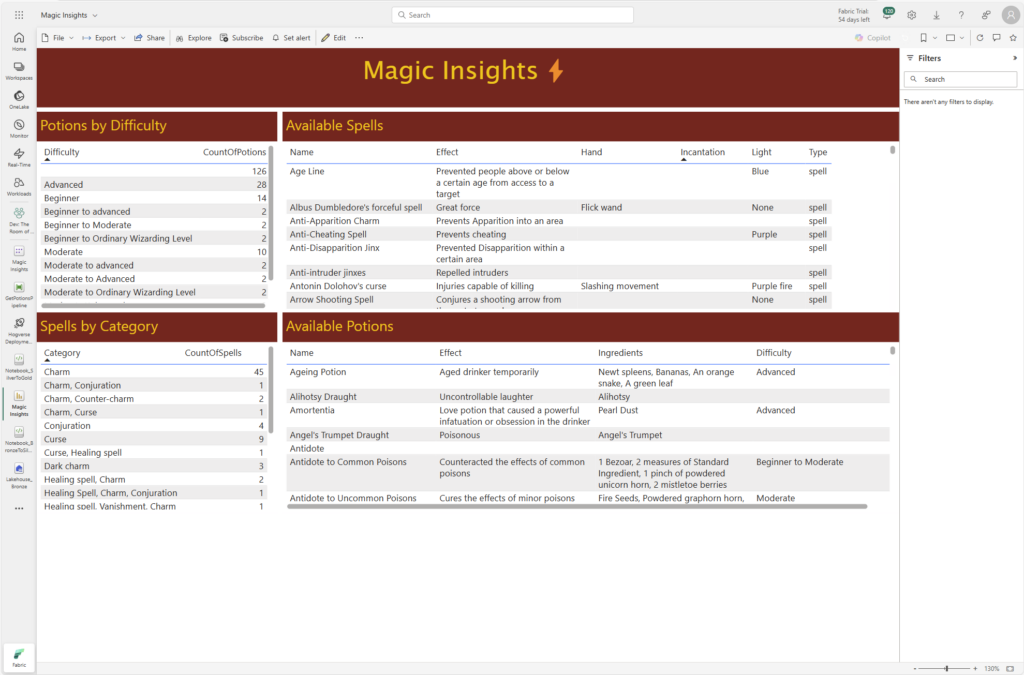

Fabric Fera Verto

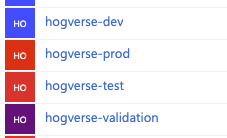

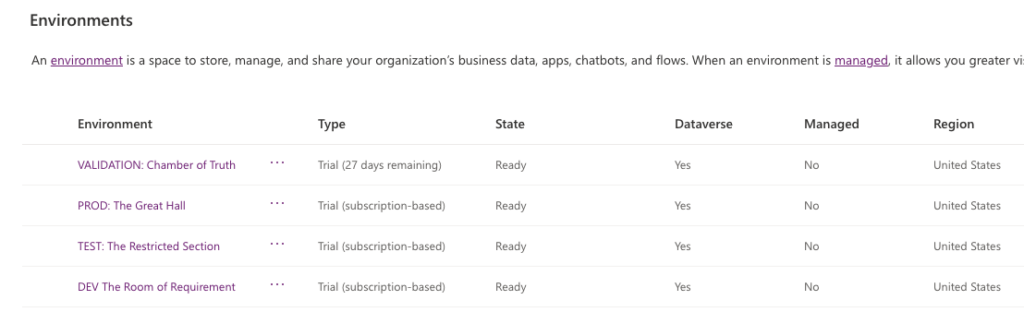

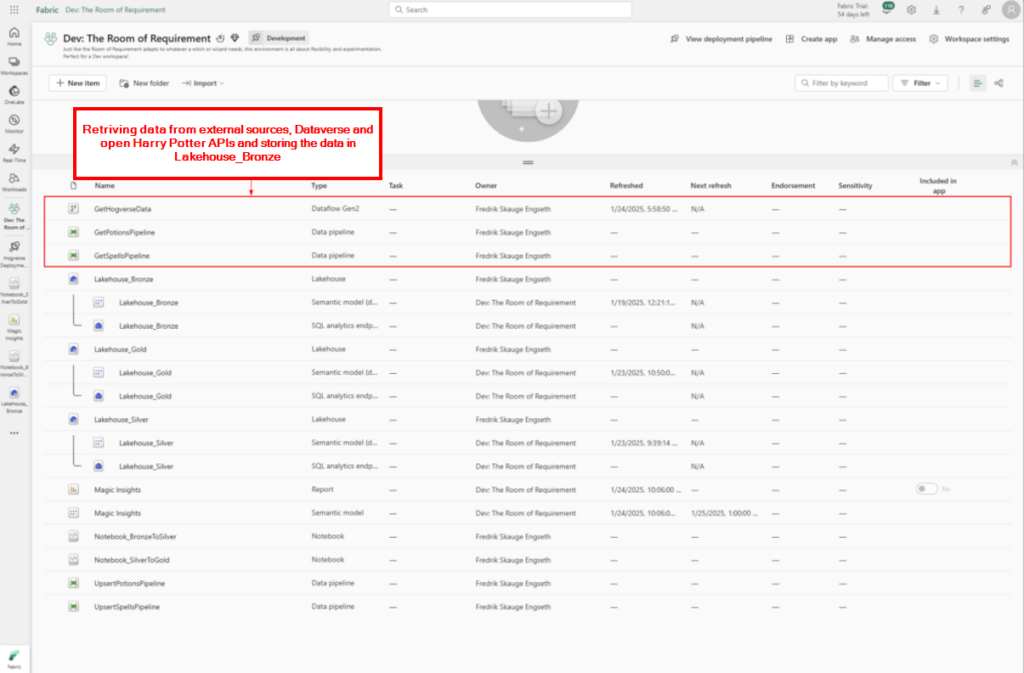

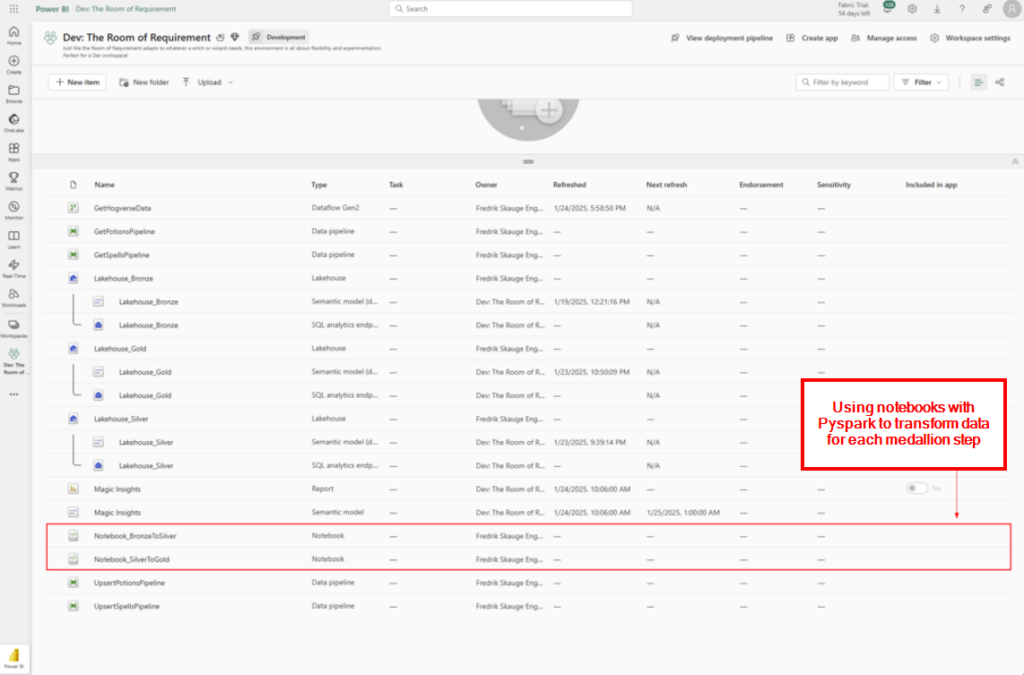

Workspaces Setup with Dev: Room of Requirement, Test: Restricted Section, Prod: Great Hall. We utilized deployment pipelines to manage seamless transitions between workspaces.

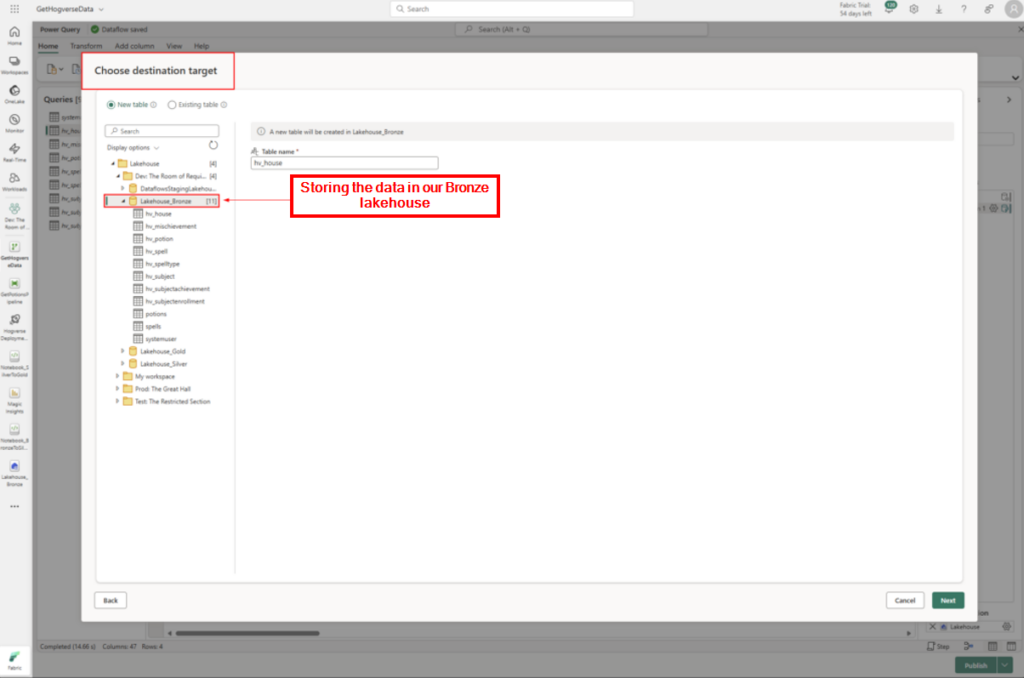

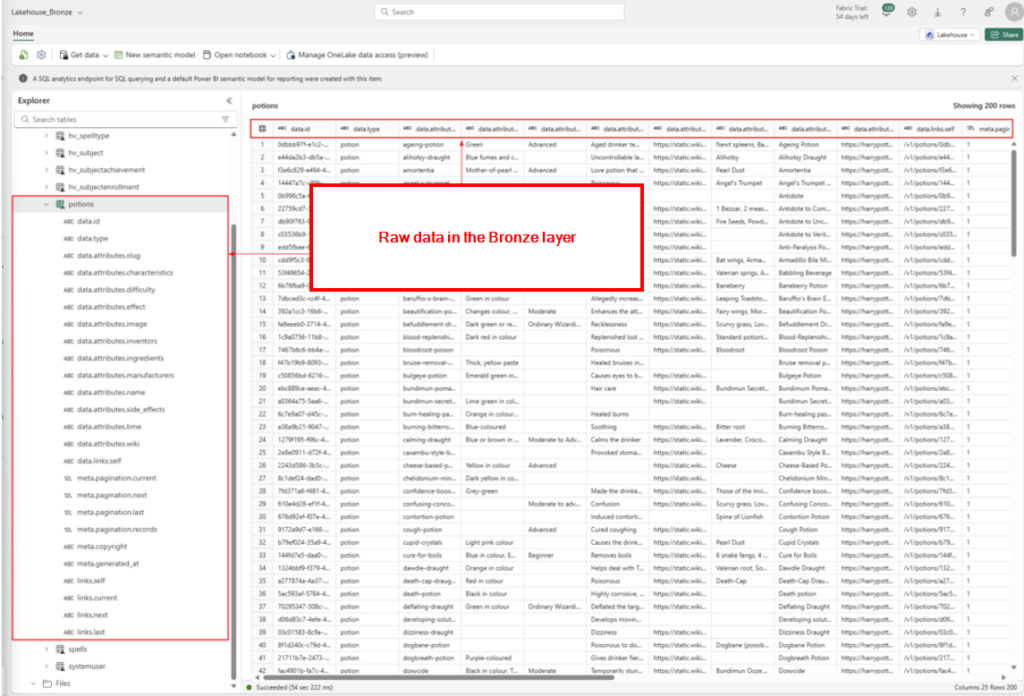

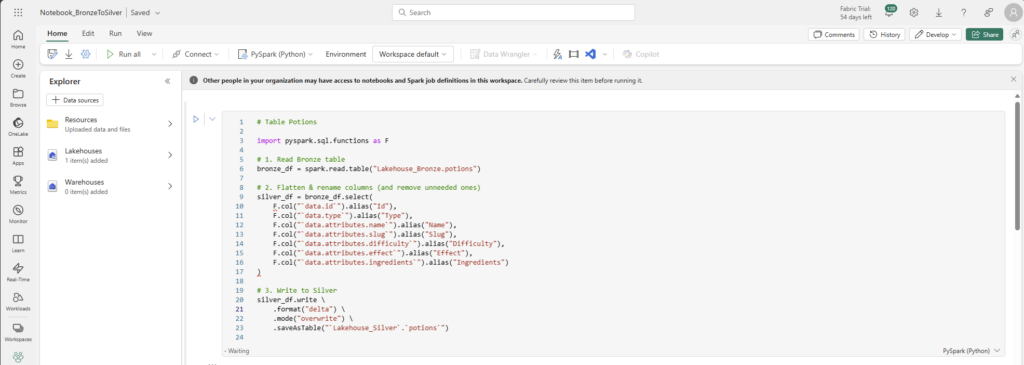

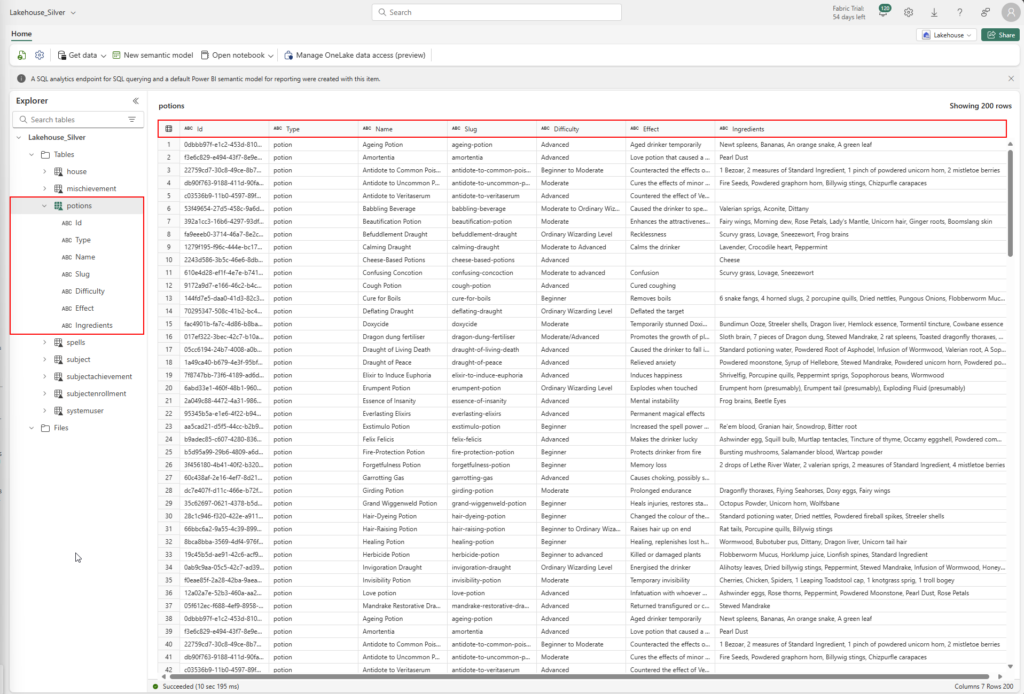

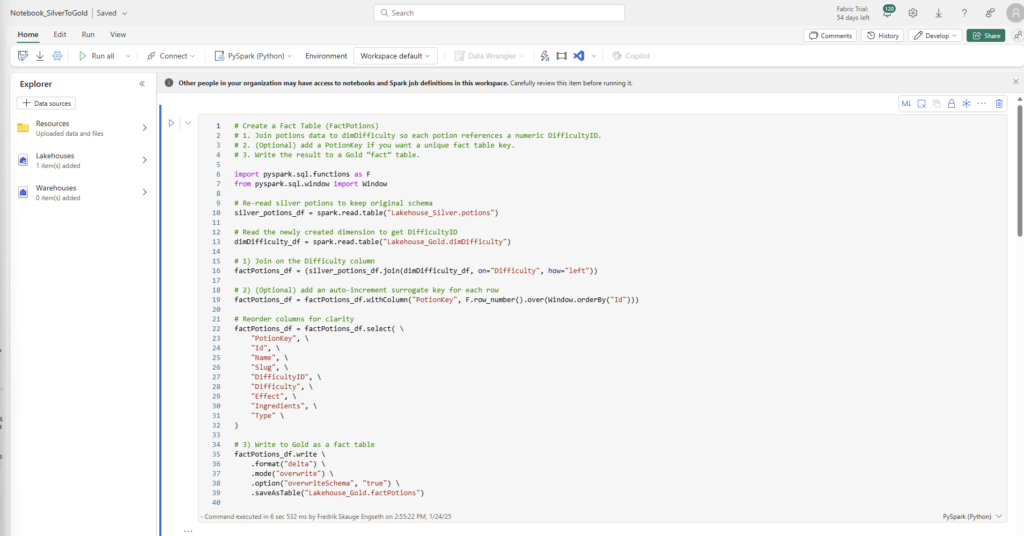

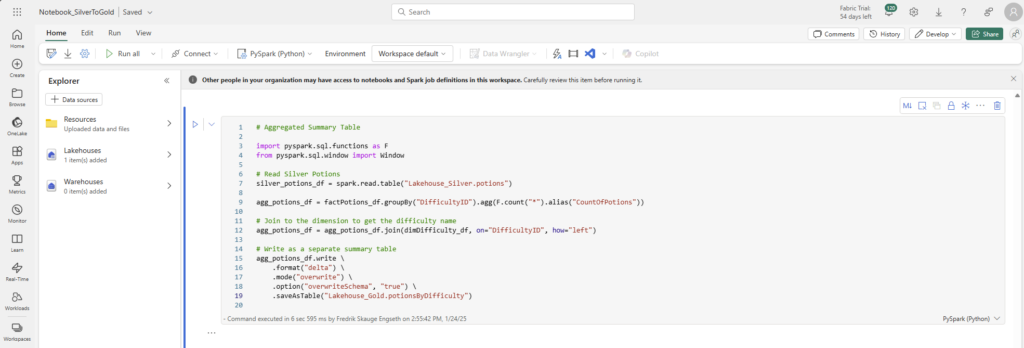

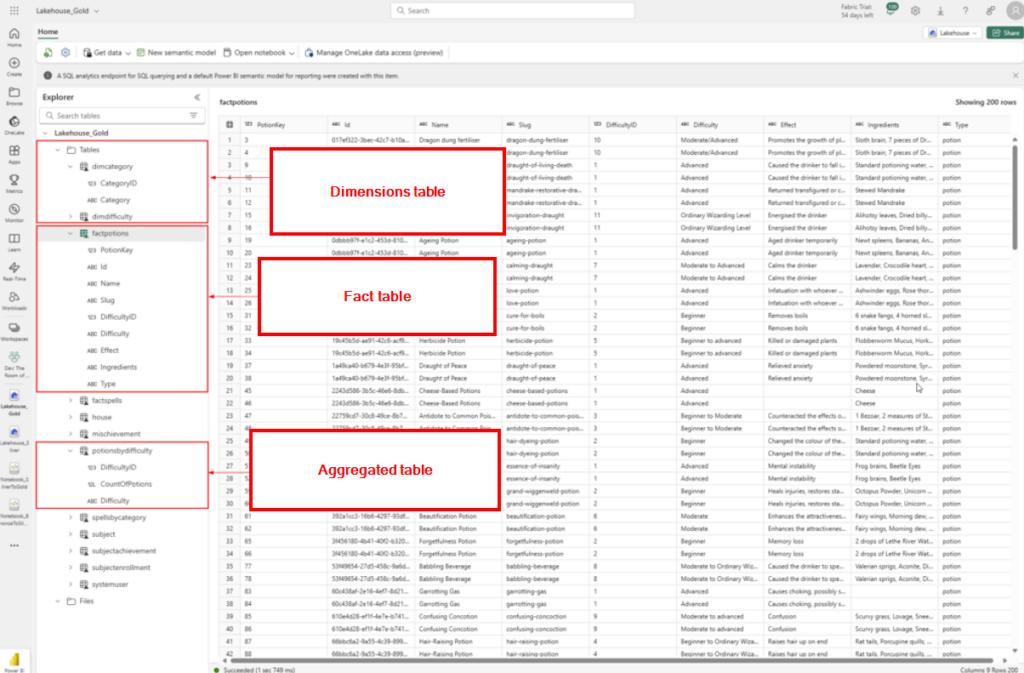

Medallion Architecture Implementation with Bronze, Silver and Gold data.

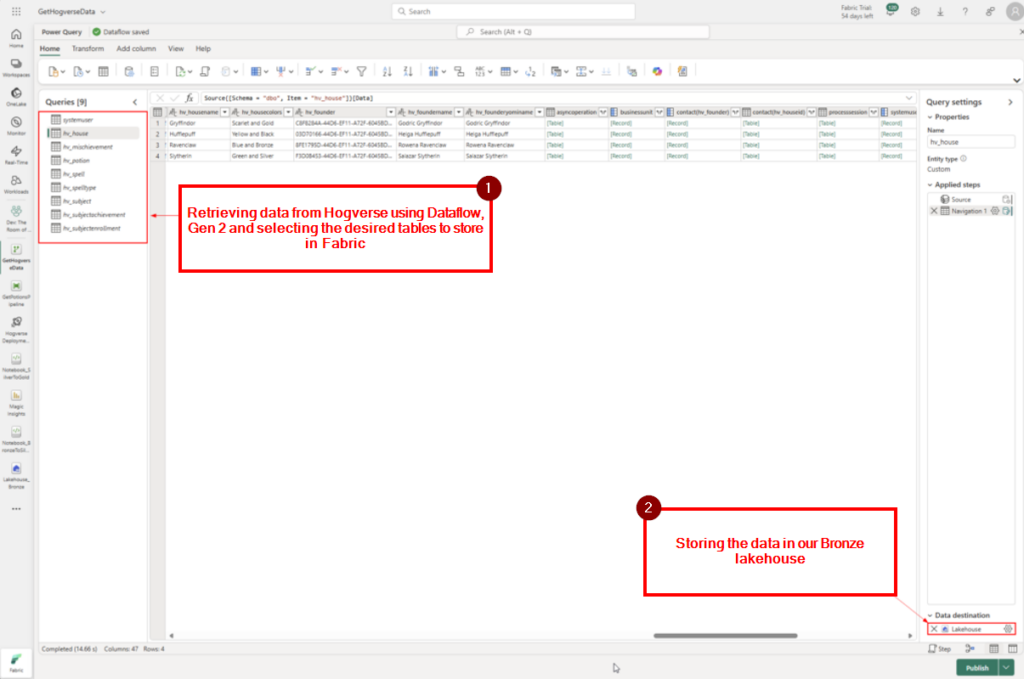

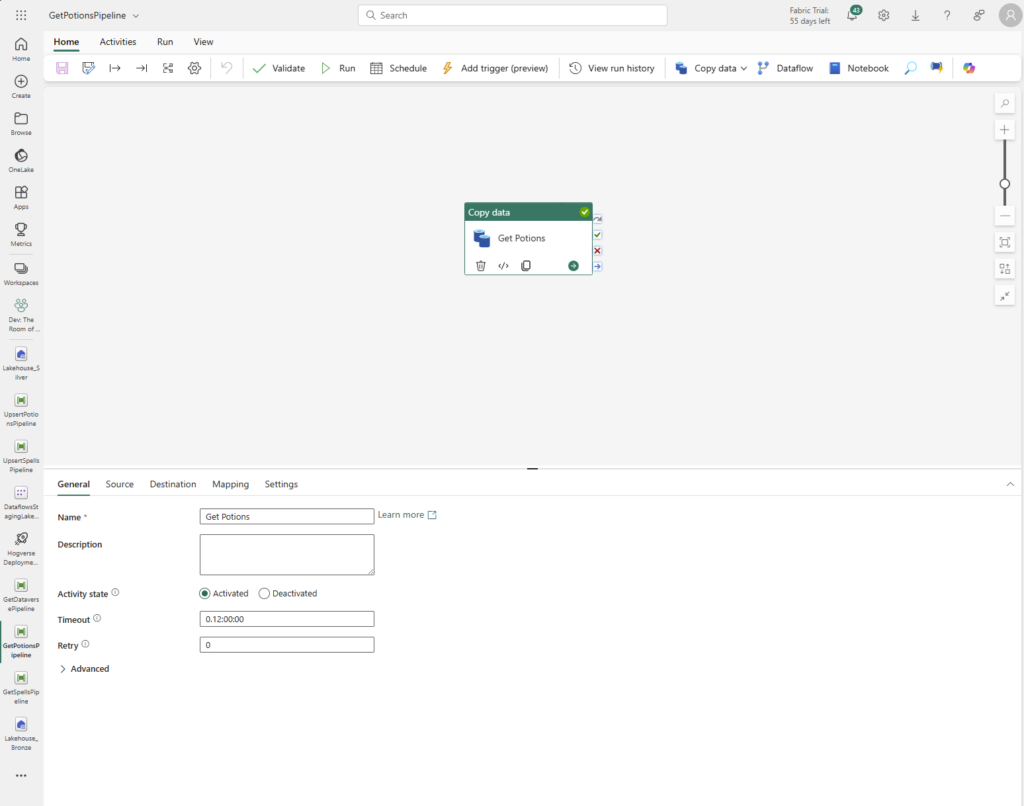

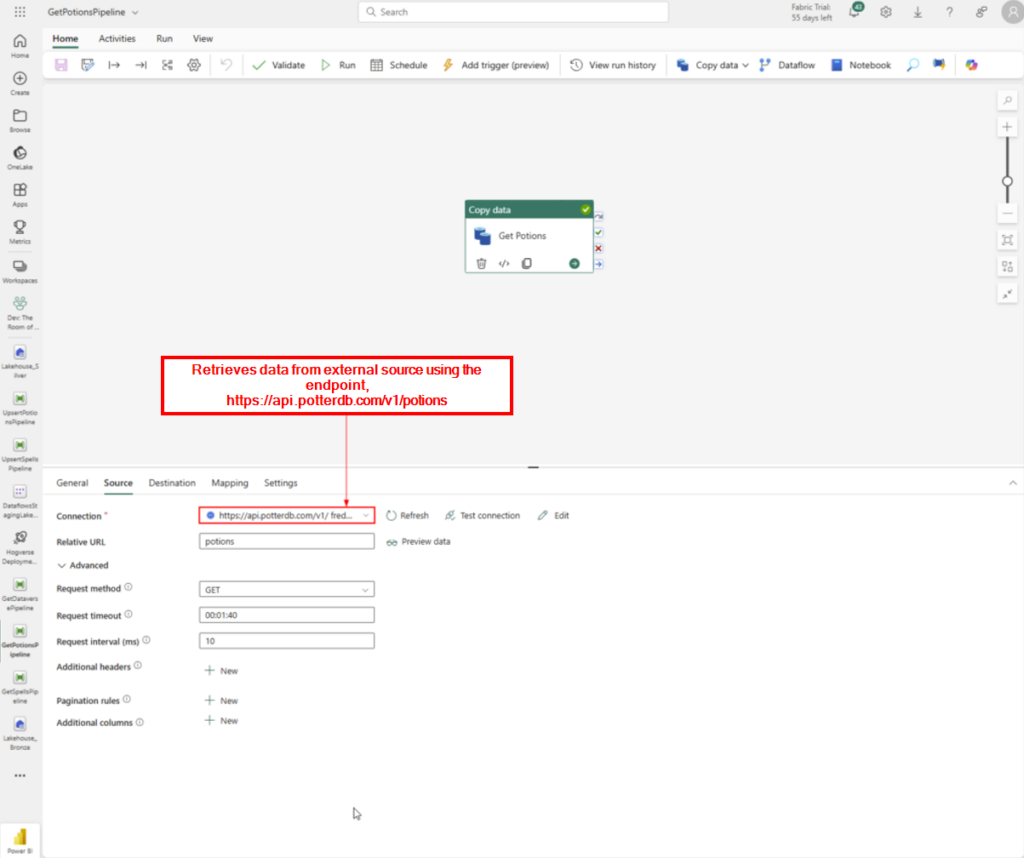

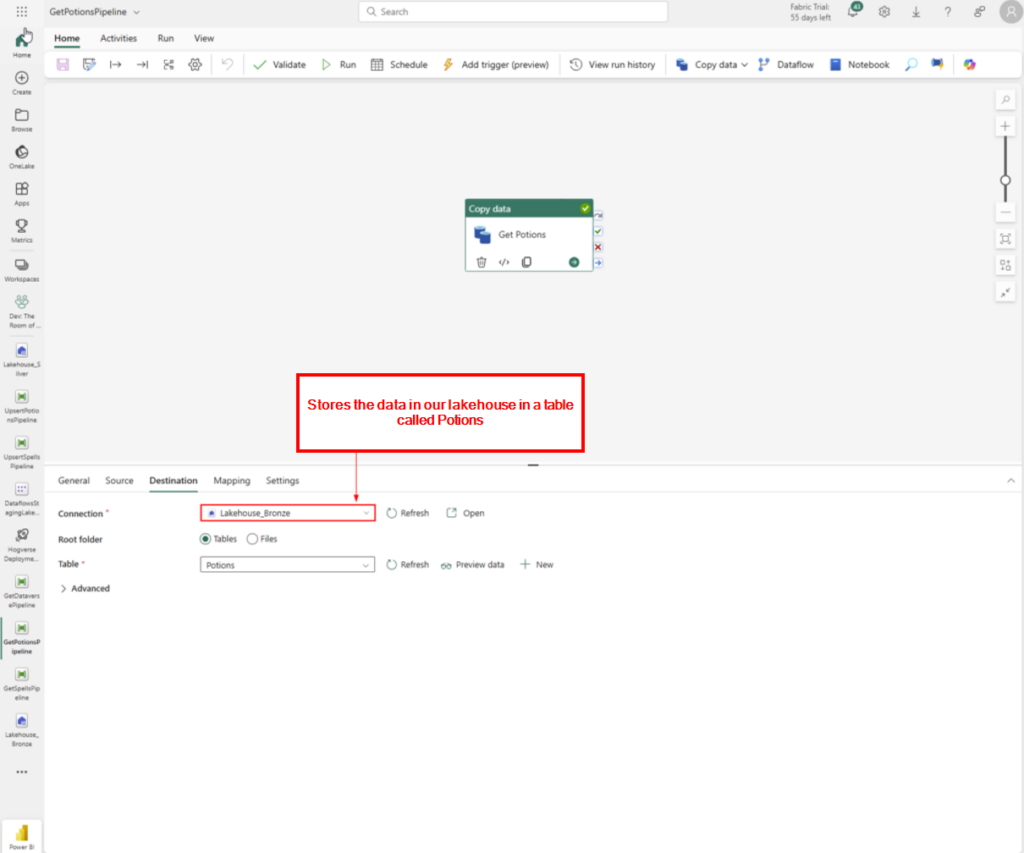

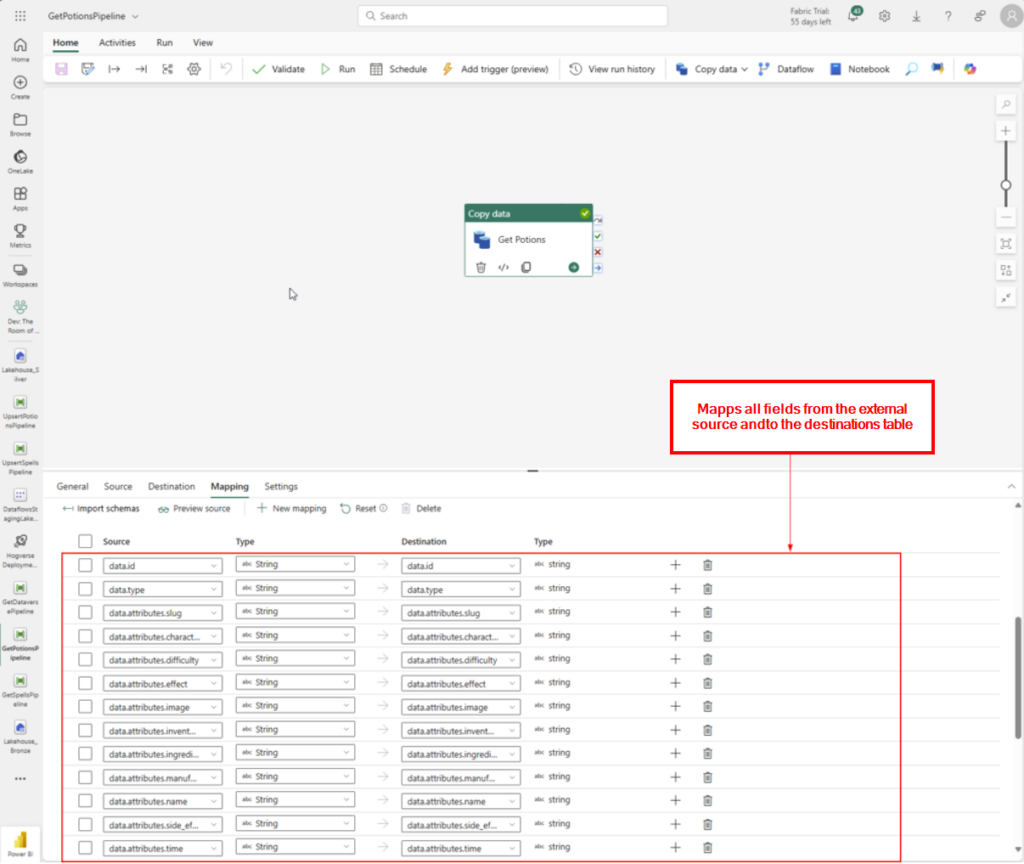

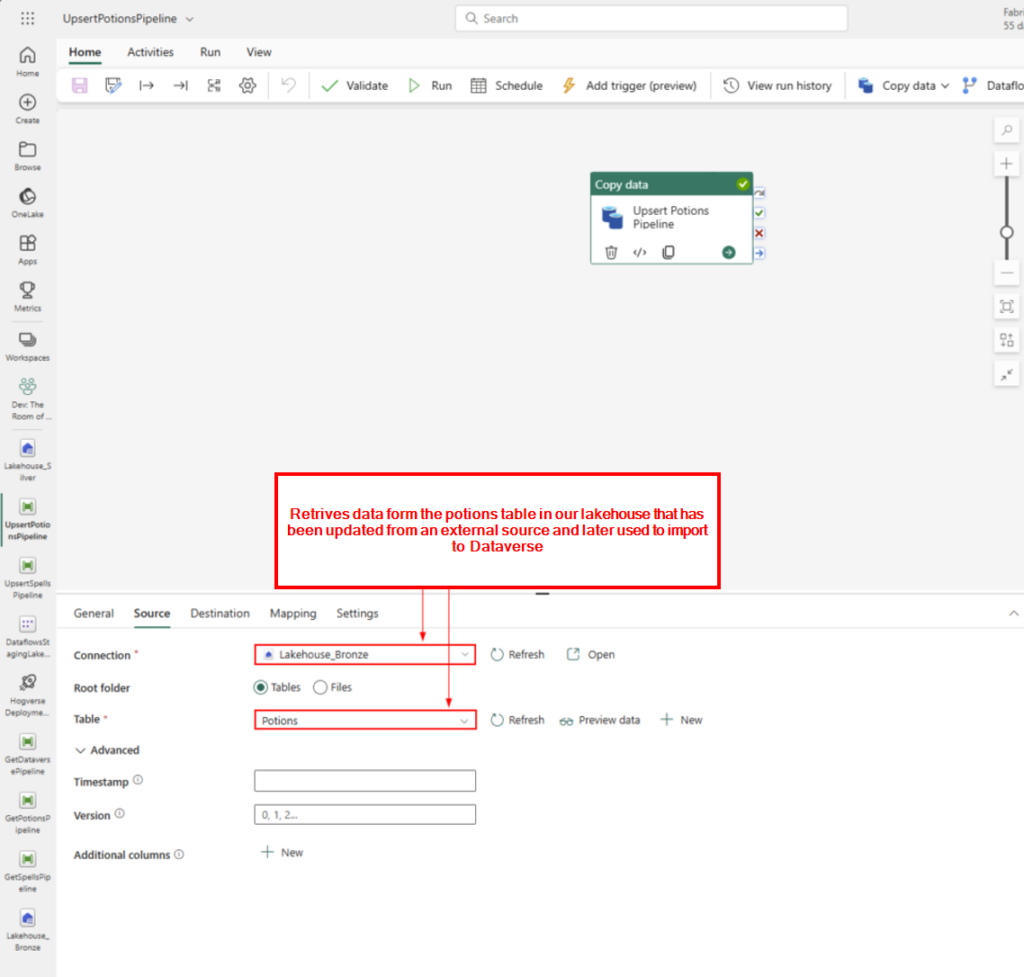

Data Ingestion: Used Dataflows Gen2 to retrieve data from another Dataverse tenant, leveraging a service principal for authentication. Utilized Data Pipelines to fetch data from Harry Potter APIs (spells, potions) and store it in the Bronze Lakehouse.

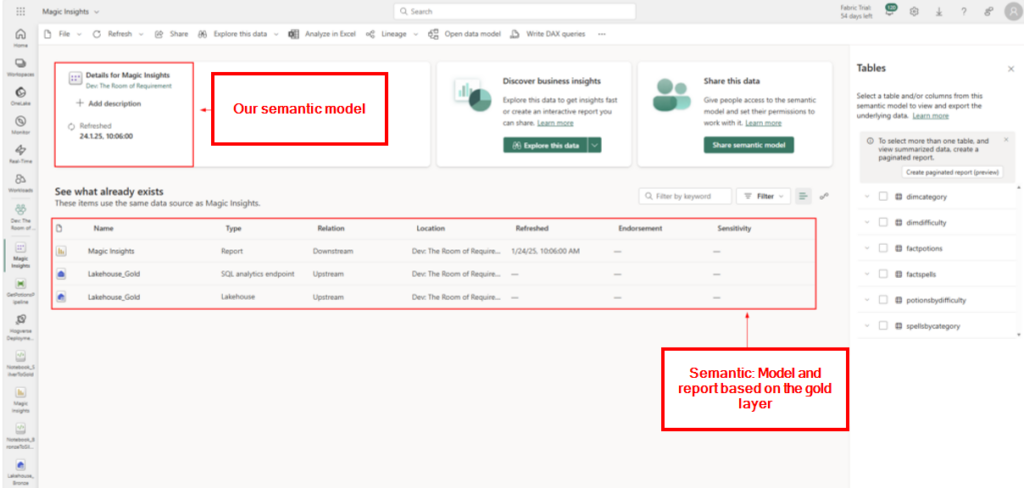

Semantic Model and Reporting – Built a semantic model on the Gold Layer for reporting. Aimed for a star schema for optimal reporting but noted incomplete implementation. Generated reports showcasing aggregated data and transformed columns.

And in depth blog post has been written here.

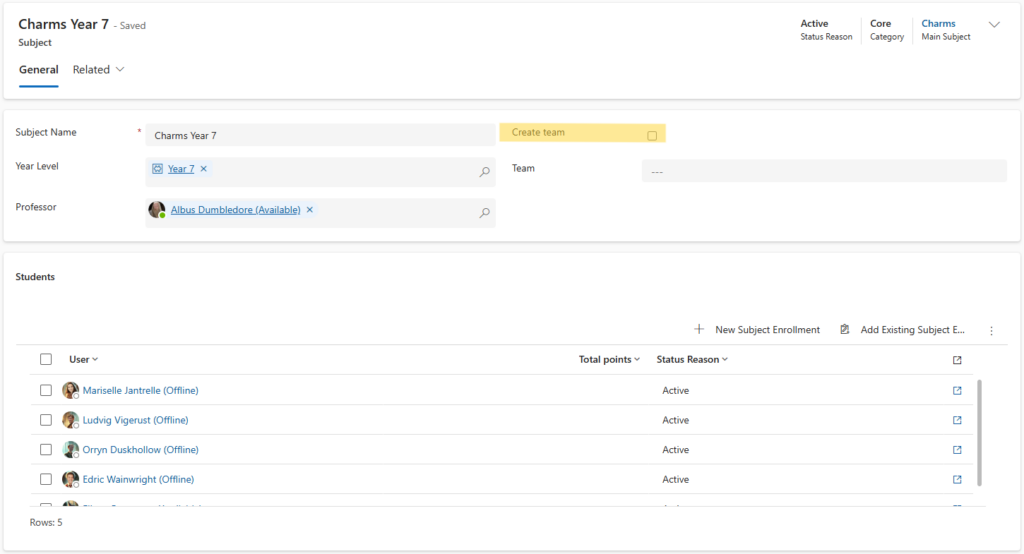

Low Code Charms

- Automated Teams channel creation using Power Automate.

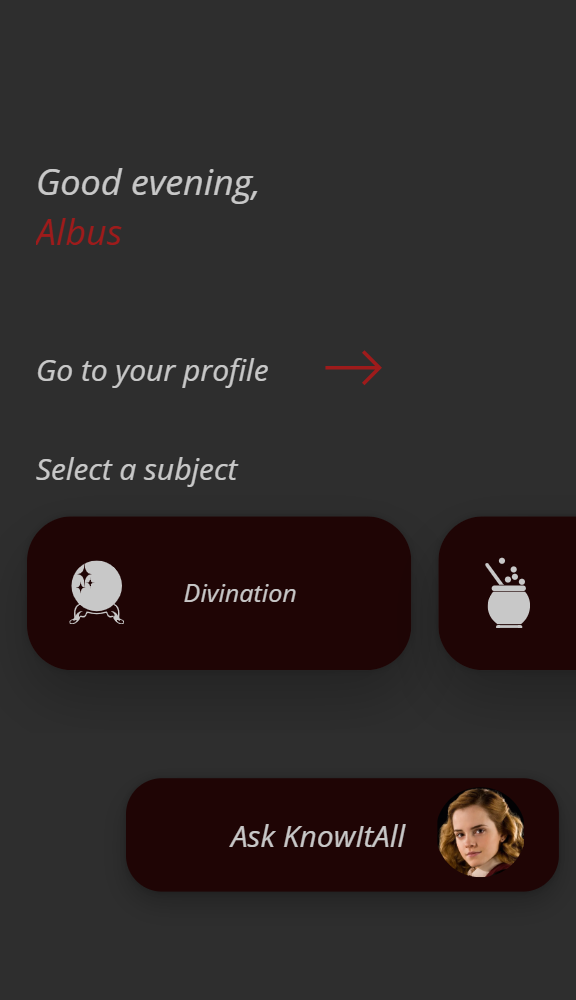

- Canvas App that enables on-the-go learning for enrolled subjects, breaking reliance on fixed class schedules.

- In the subject of Divination, students analyze tea or coffee cup patterns using AI Builder, ensuring interactive learning experiences.

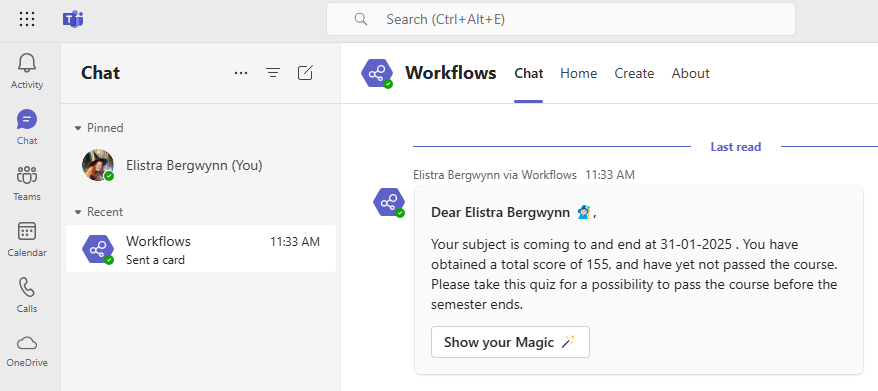

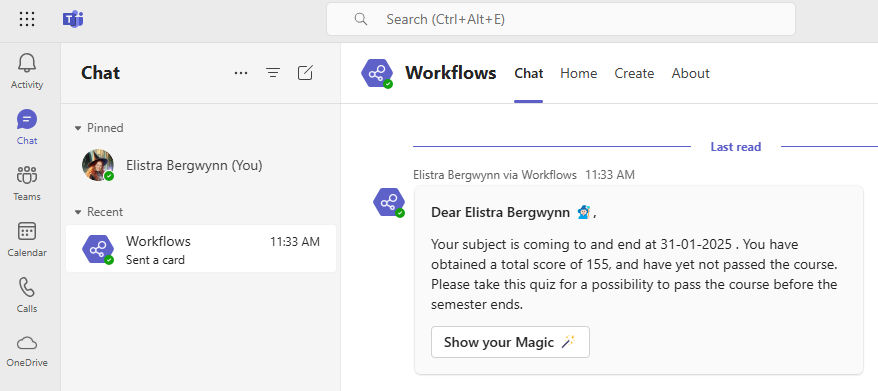

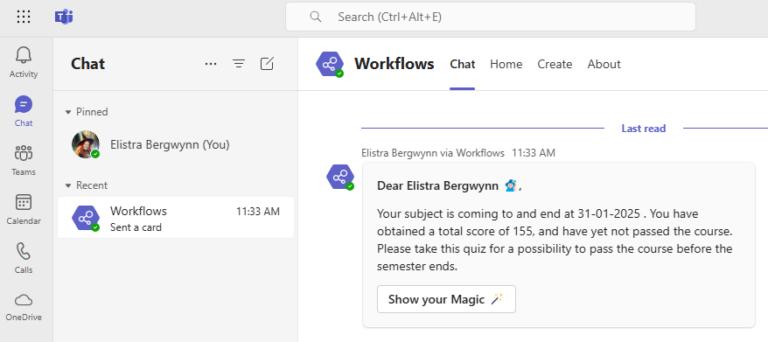

- Adaptive cards simplify communication between teachers and students, using Power Automate.

- Fields in Dataverse with Formula as Data Type using PowerFX

If you want to know more, come by and get a fortune reading 🔮

Pro-Code Potions

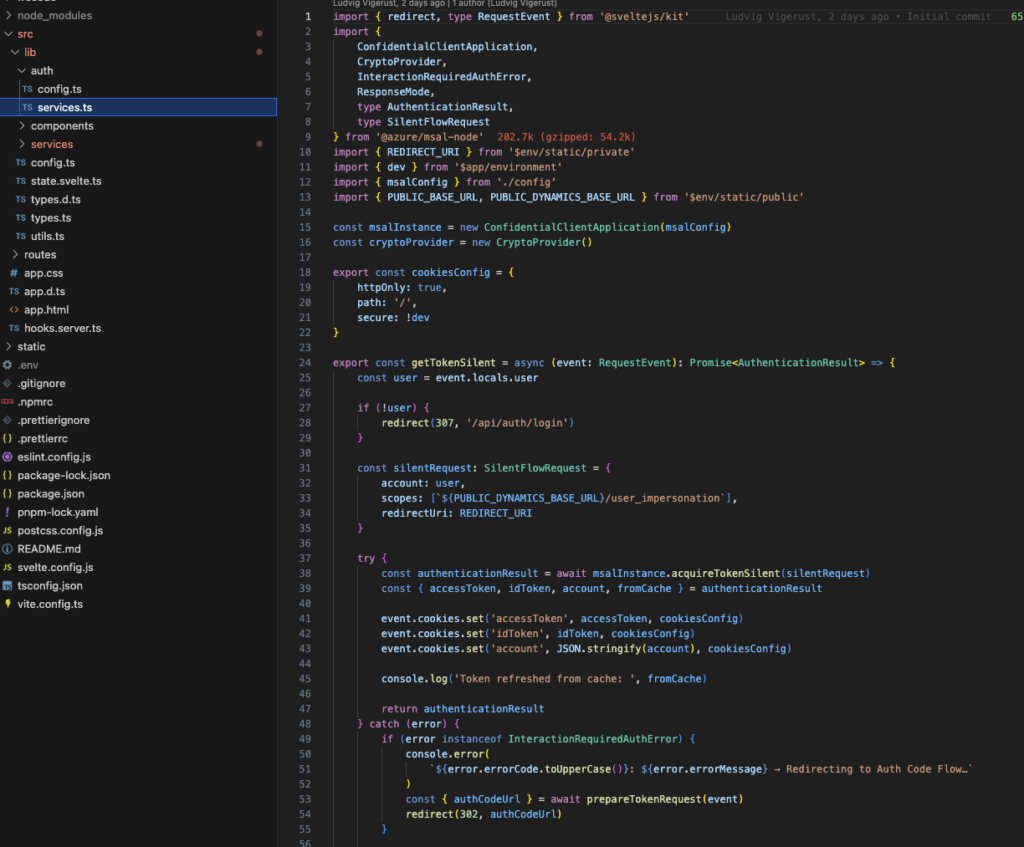

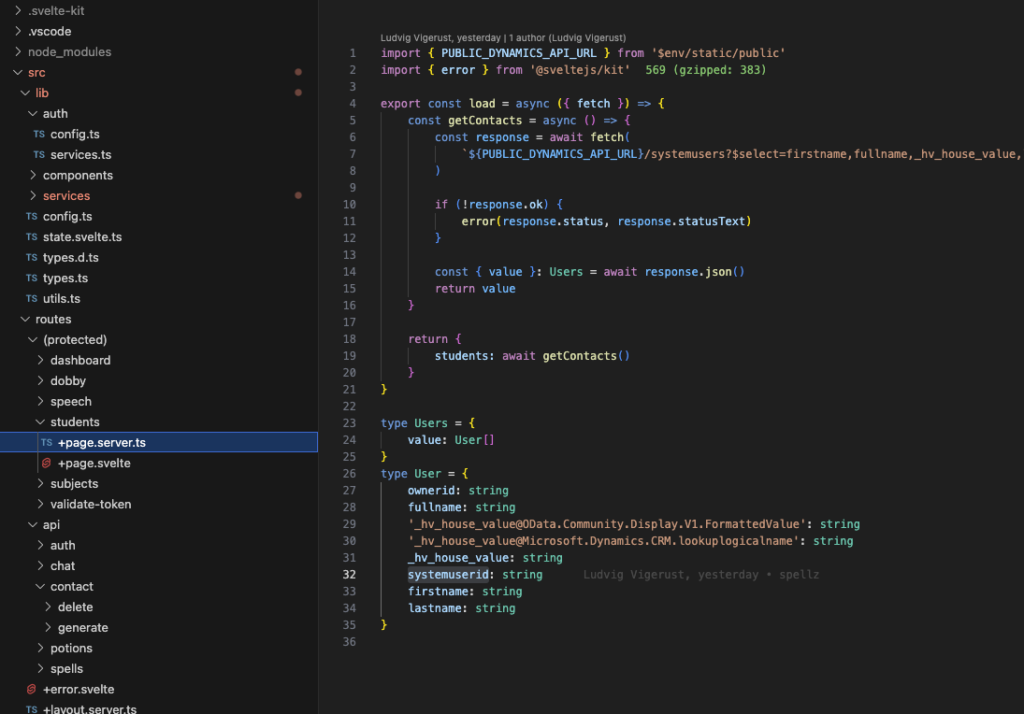

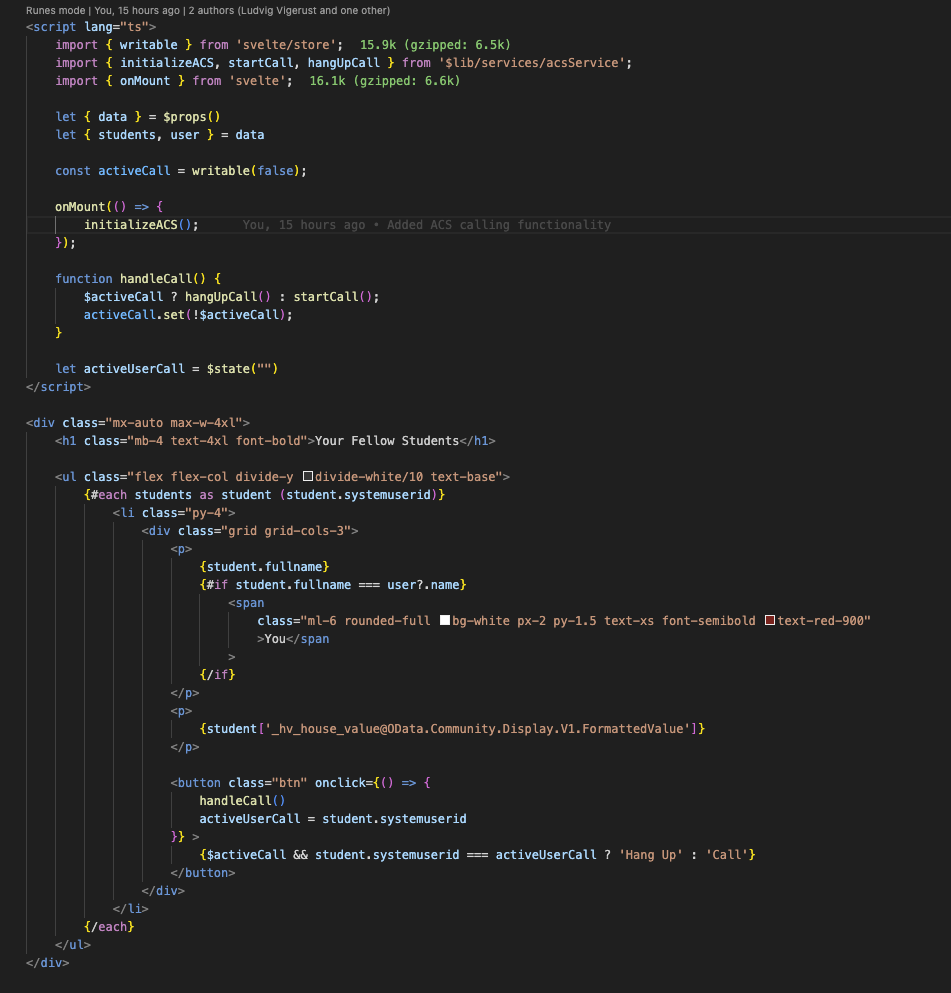

Our pro-code solution is a student portal made with Svelte 5 and SvelteKit.

It’s main features are

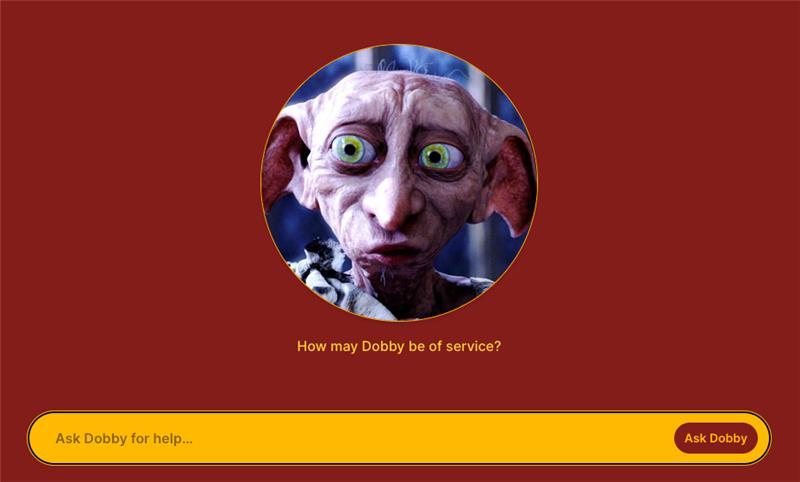

- Dobby – AI-assistant for students to learn new spells and potions

- Student Dashboard (Sorcerers Central)

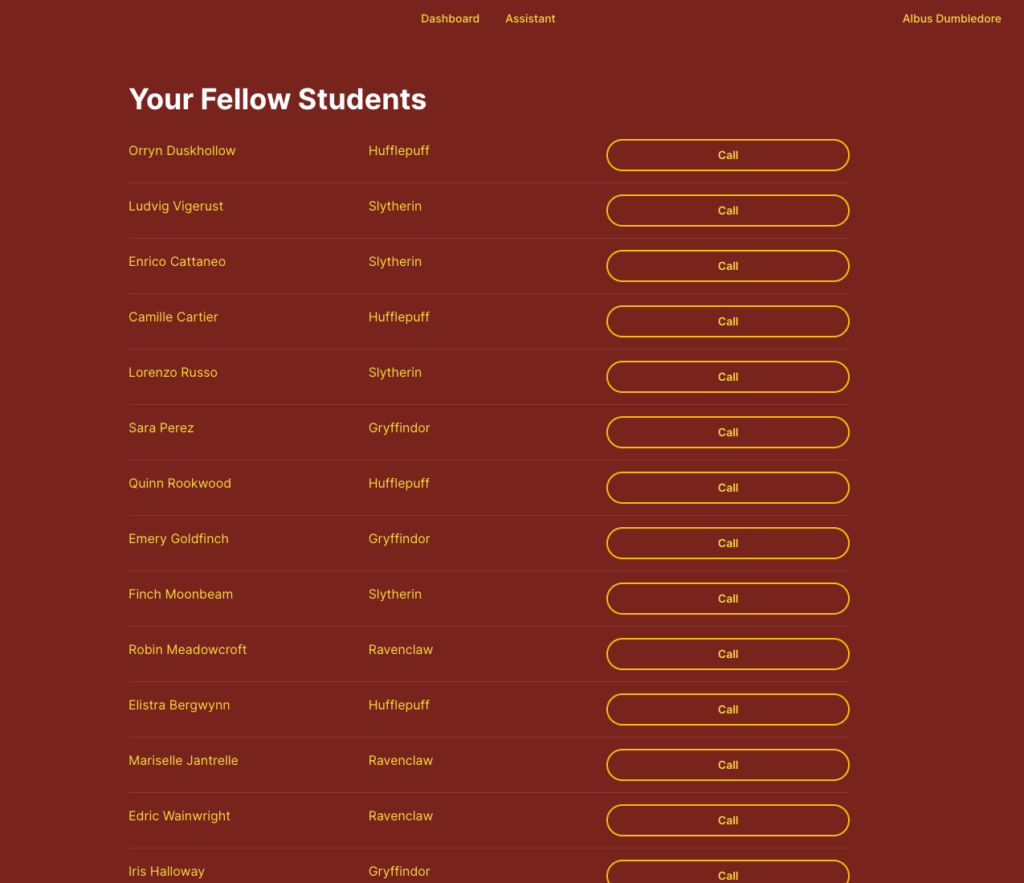

- Fellow Students – Call your classmates using Azure Communication Services

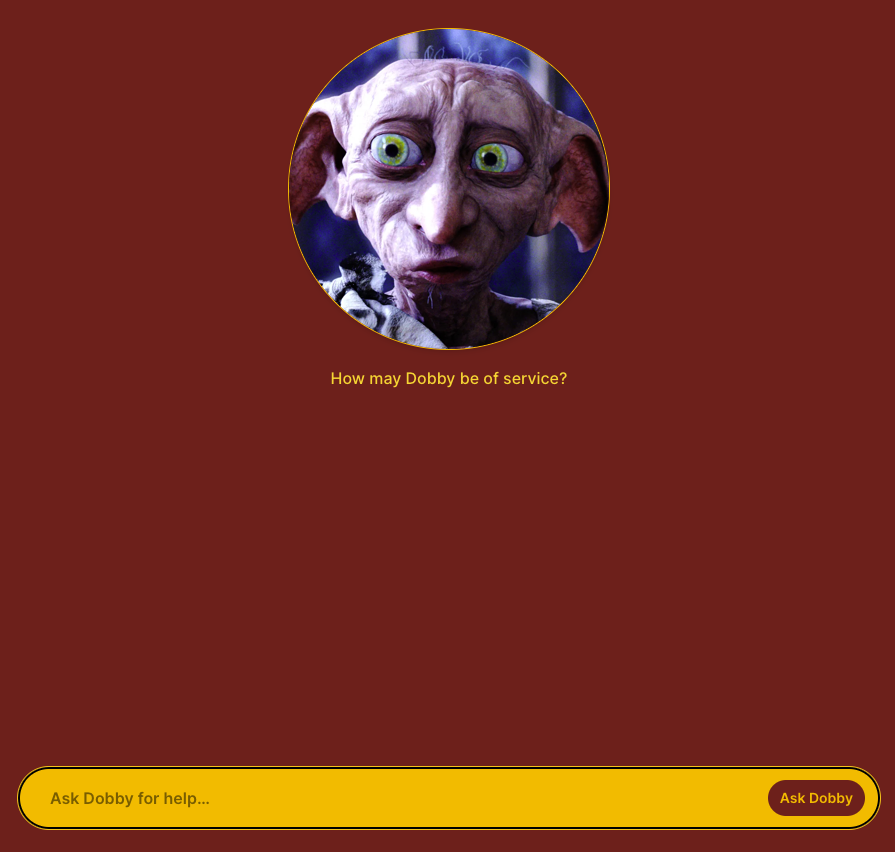

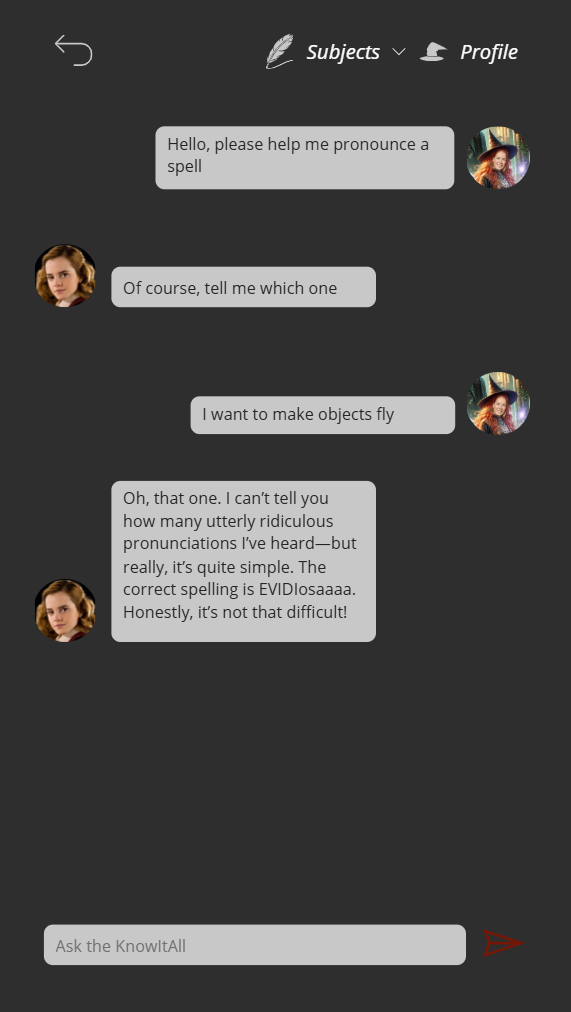

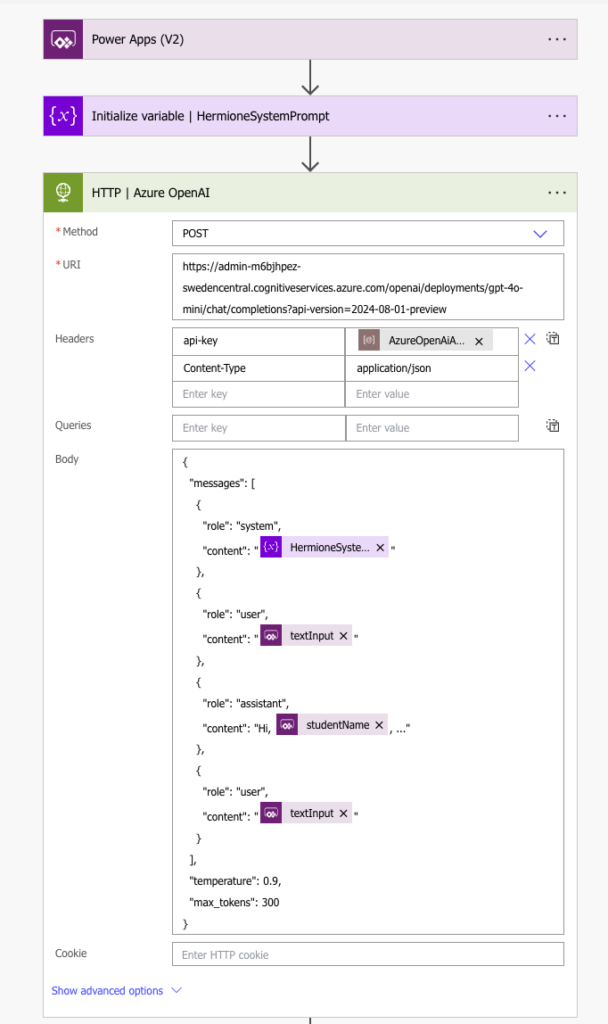

Dobby – AI-assistant

- Dobby is trained to recognize if the student is looking for a spell or potion that induces a certain effect.

- Based on analysis of the request, it makes requests to respective endpoint in the Harry Potter DB API and uses the desired effects as filters.

- It returns the data back to the student where the student can add the spells and potions to their spell or potion book.

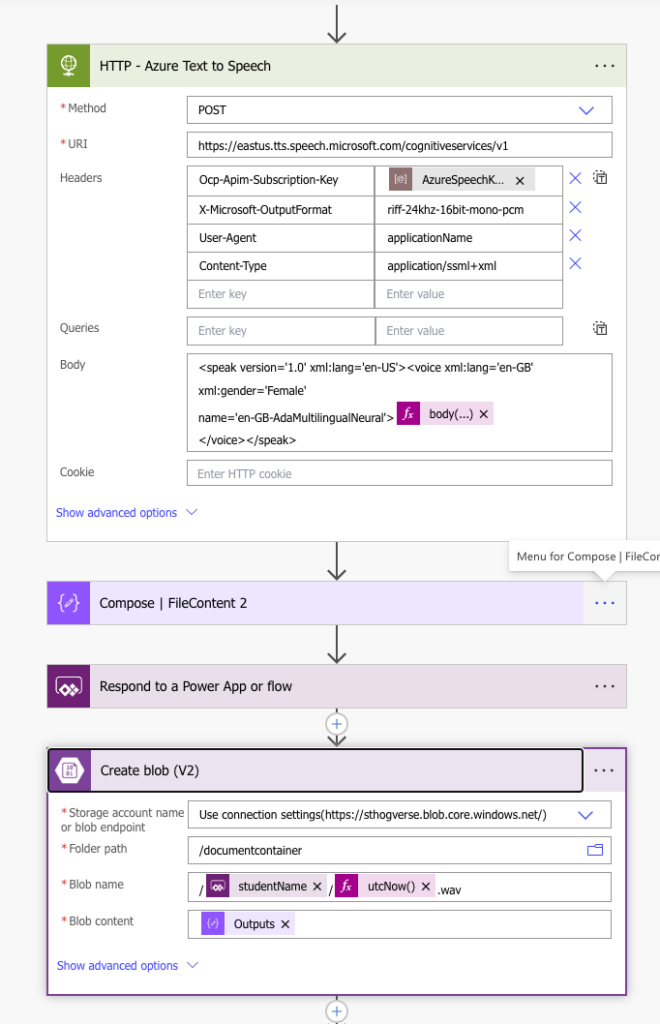

Student Dashboard (Sorcerers Central)

- The student dashboard is the main hub for the students

- Here they can get a quick glance of their personal progress at Hogwarts.

- The dashboard have a section for Mischievements, Spellbook, Potions and Subjects.

Fellow Students – Call your classmates

- This page contains a list of all students in your year.

- If you ever need to call a friend, you can do that right from this page using Azure Communication Services in the background.

Read about searching in natural language here.

Read about the ACS and phone solution here

Digital Transformation

- Replaced manual processes with automated workflows for subject management, teacher-student communication, and achievement tracking.

- Enabled online learning to maintain education during crises, like school closures or emergencies.

- Integrated adaptive cards in Teams for real-time updates on tests, achievements, and behavioral notifications.

- Used Power Automate to trigger parent notifications and contract signing for mischievements, ensuring accountability.

- Leveraged AI (GPT-4 and AI Builder) to create interactive and engaging learning experiences for students.

More detailed explanation here.

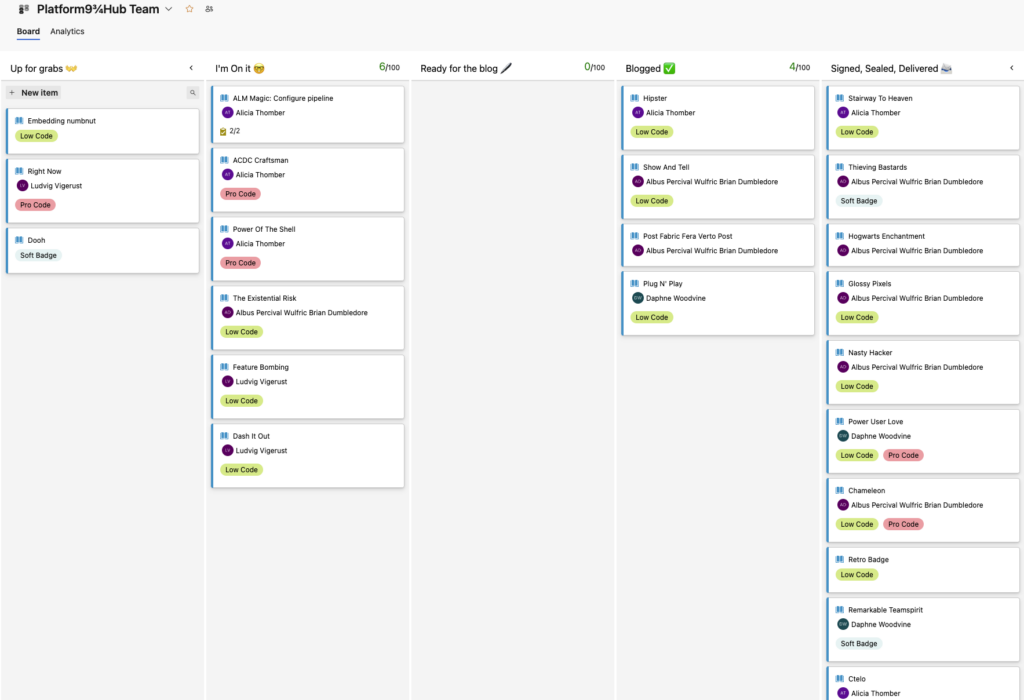

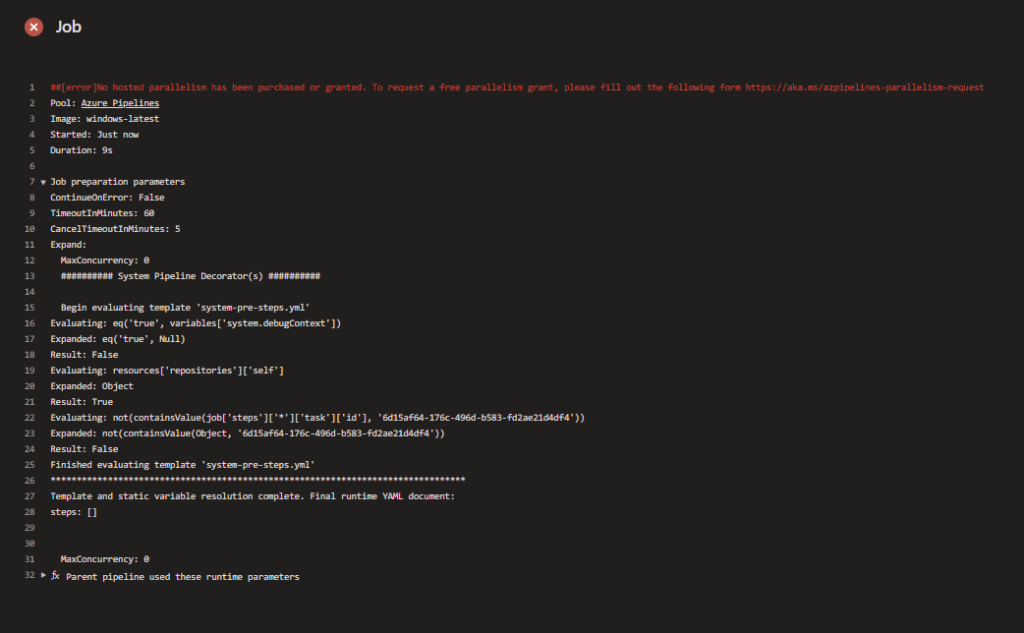

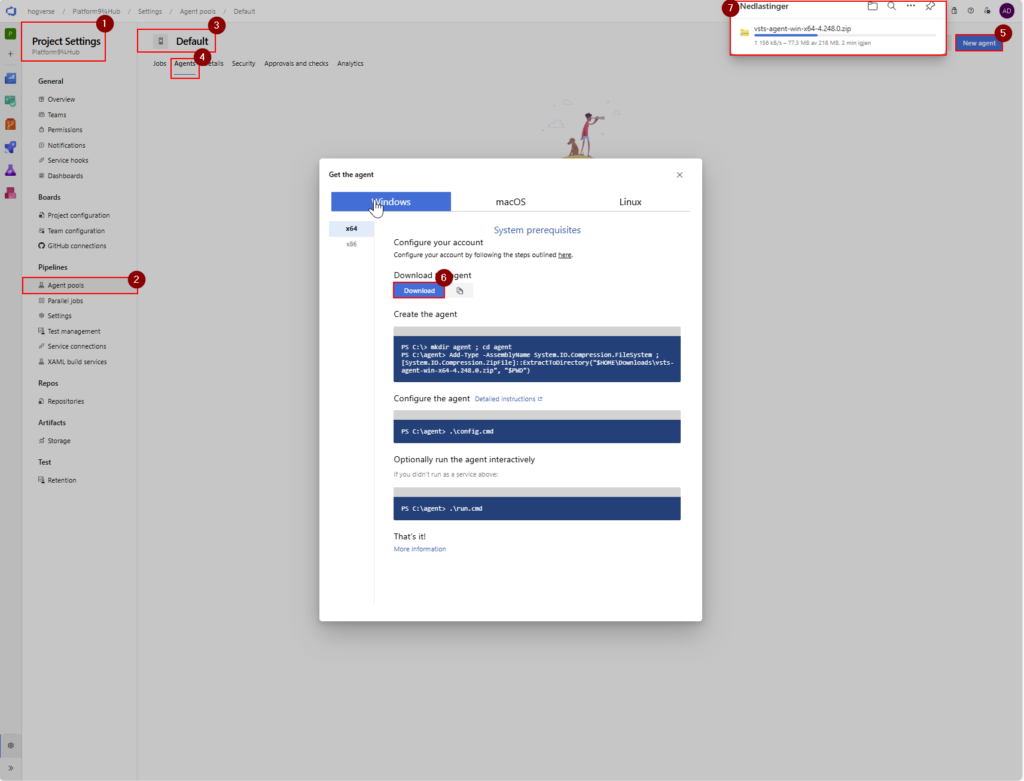

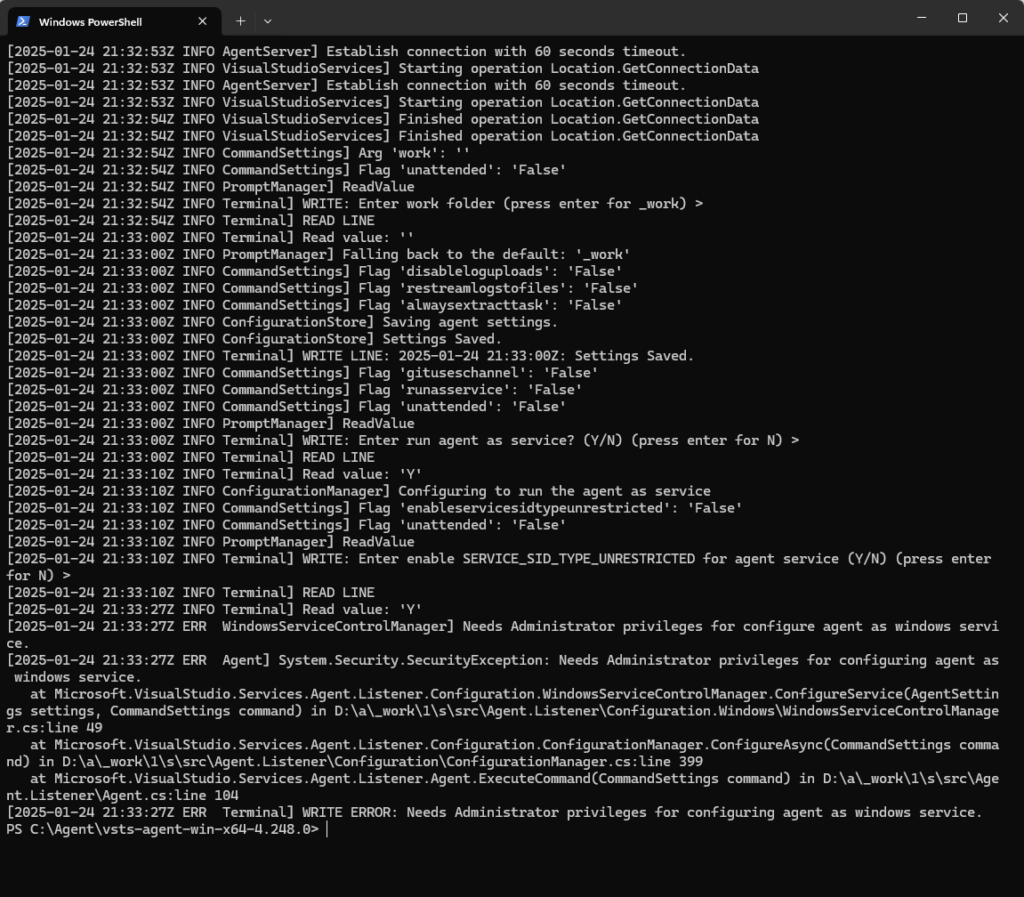

ALM Magic

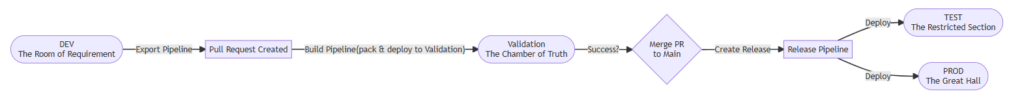

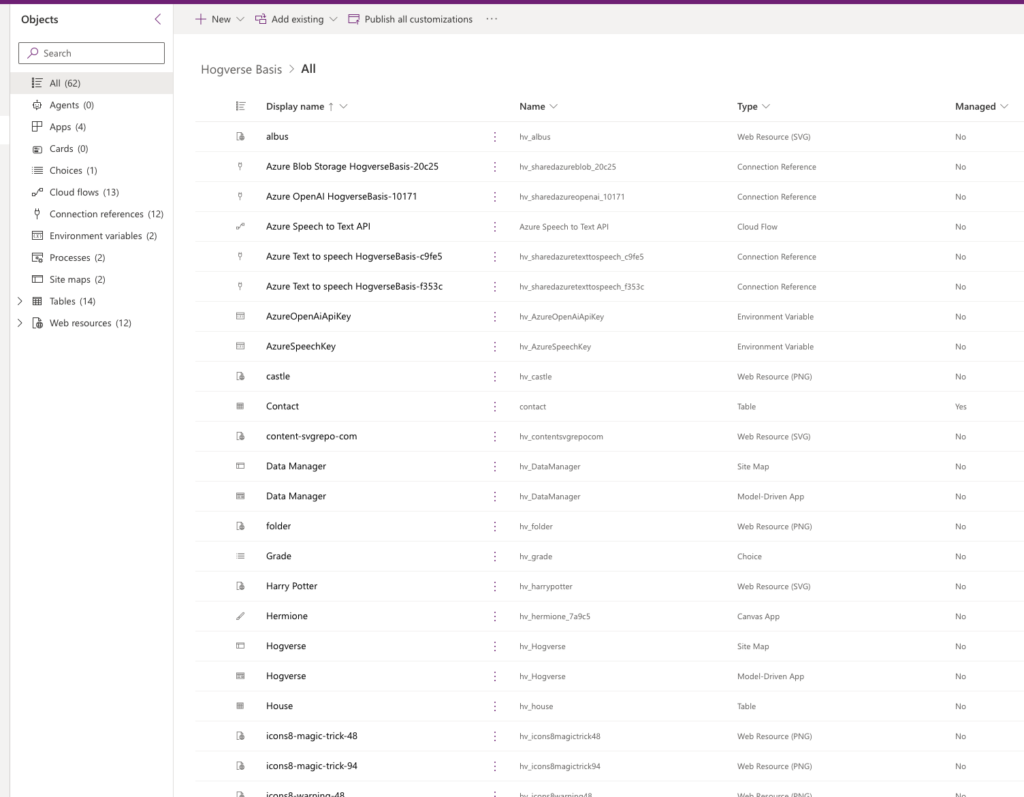

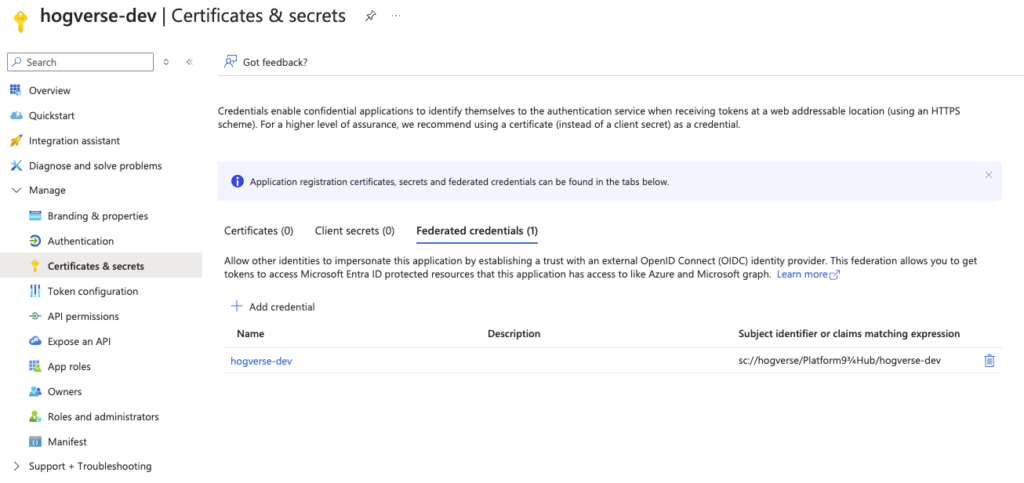

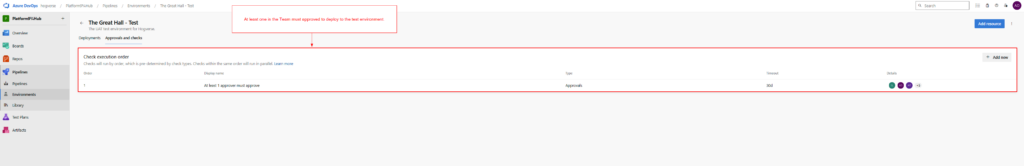

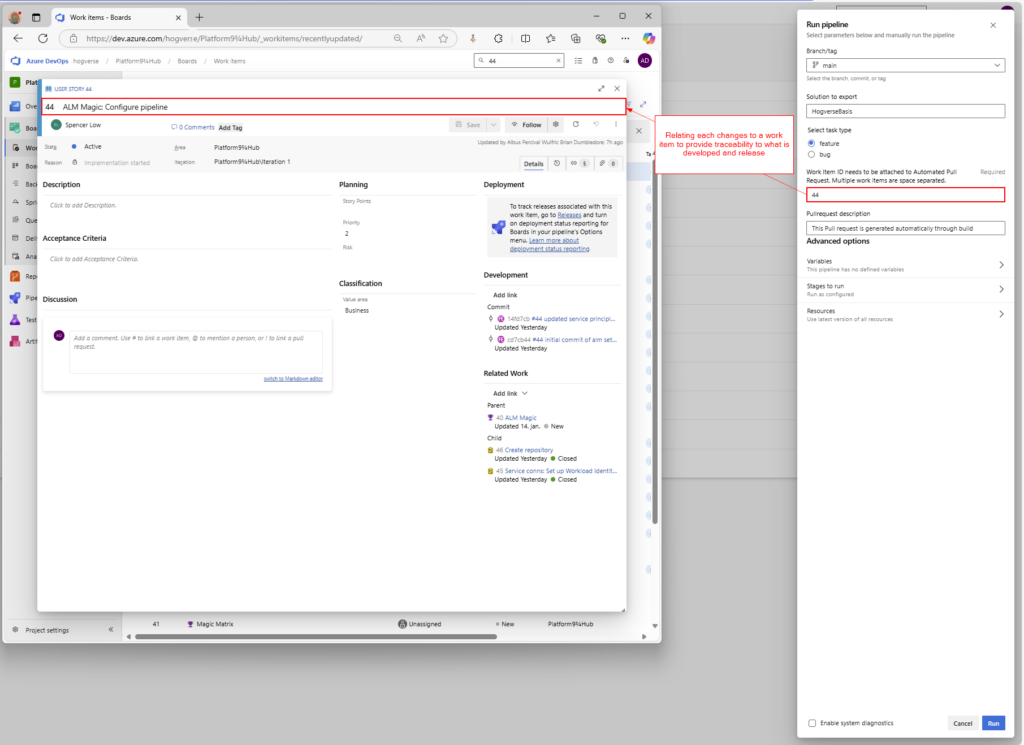

- Utilized Azure DevOps for boards, repos, pipelines, and Service Principal setup with Workload Identity Federation for ADO Connection . And in depth overview of all code

- Team Collaboration – Ensured seamless teamwork across CRM environments, Fabric pipelines, and GitHub collaboration for the Svelte project.

- Code and Solution Management – Maintained an organized approach with naming conventions, solution strategies, and in-depth code overviews.

- Streamlined Deployment – Automated pipelines supported structured deployments across Dev, Test, and Prod, with detailed diagrams for clarity.

- Issue Resolution – Tracked and resolved challenges transparently through DevOps boards and GitHub issues.

And in depth blog post has been written here.

Magic Matrix

- Combined Microsoft 365 tools like Teams, SharePoint, and Power Apps for seamless collaboration and learning management.

- Integrated GPT-4-powered Dobby Chat to fetch data from Harry Potter APIs, providing instant access to spells, potions, and more.

- Designed a Canvas App for on-the-go learning, utilizing AI Builder for interactive activities like tea leaf analysis in Divination.

- Prioritized user experience with real-time dashboards, responsive portals, and accessible tools for students and teachers.

- Ensured privacy, scalability, and innovation with a strong ALM strategy and secure integrations across platforms.

More detailed explanation here.