🧑💻 Update Your ALM – The Hipster Way 🎩

In the world of Application Lifecycle Management (ALM), being a hipster isn’t just about wearing glasses and drinking artisanal coffee. ☕ It’s about using the latest tech and best practices to ensure your solutions are compliant, governed, and respectful of privacy. Here’s how we’ve upgraded our ALM setup to be as sleek and modern as a barista’s latte art. 🎨

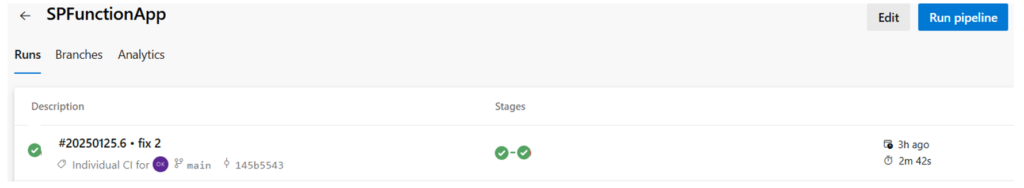

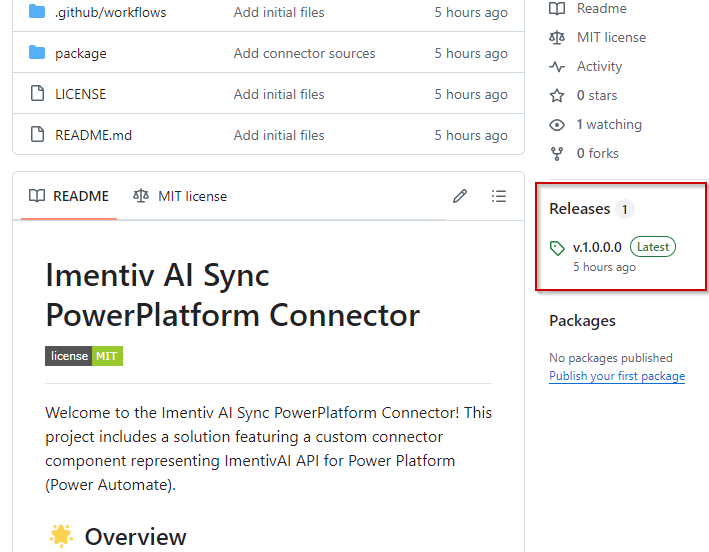

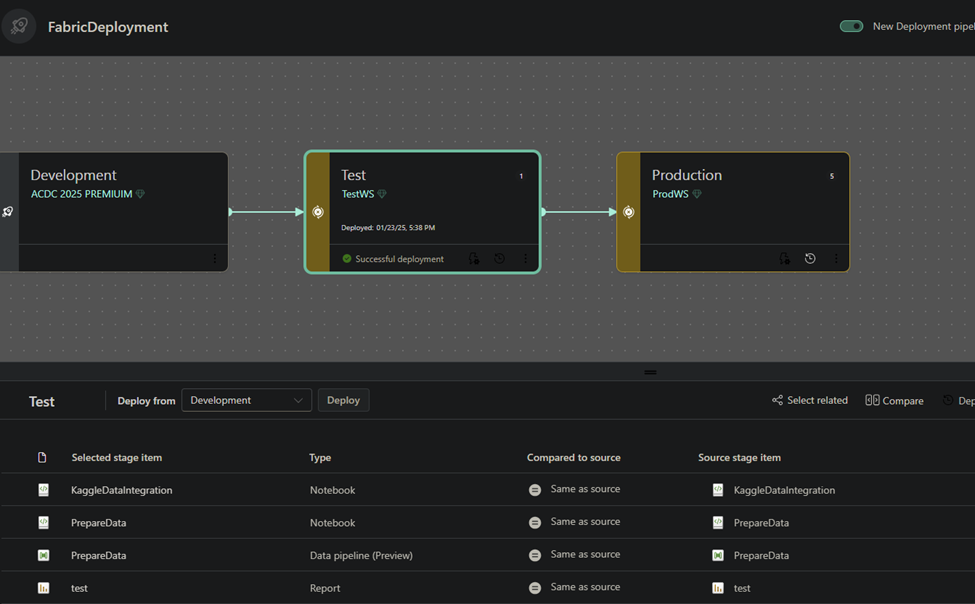

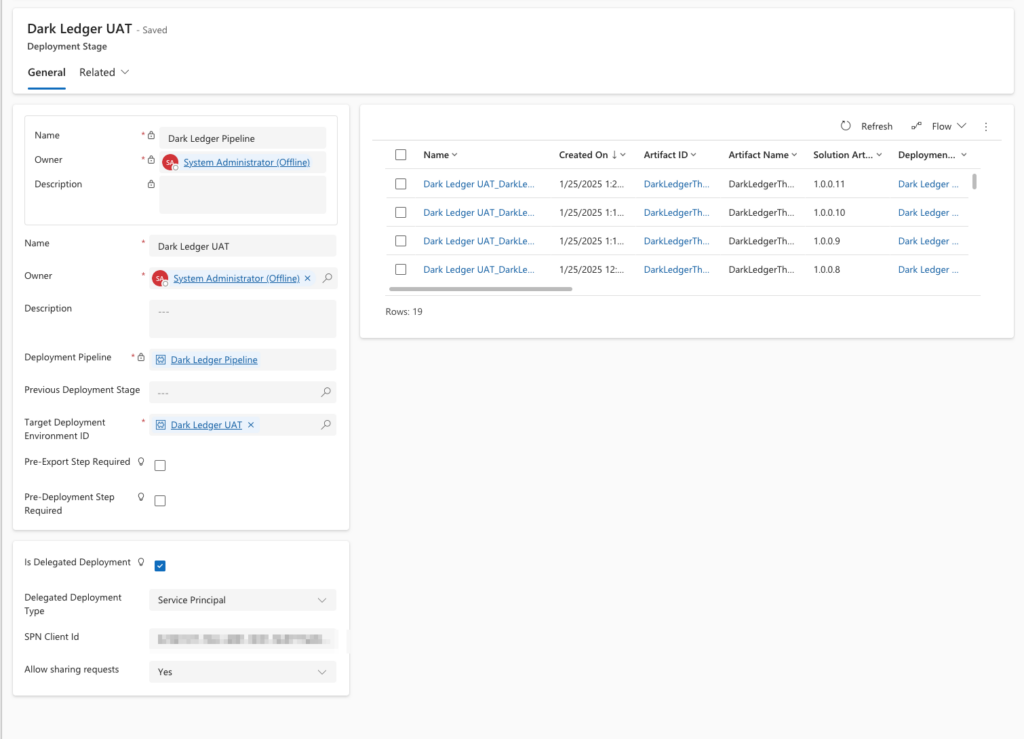

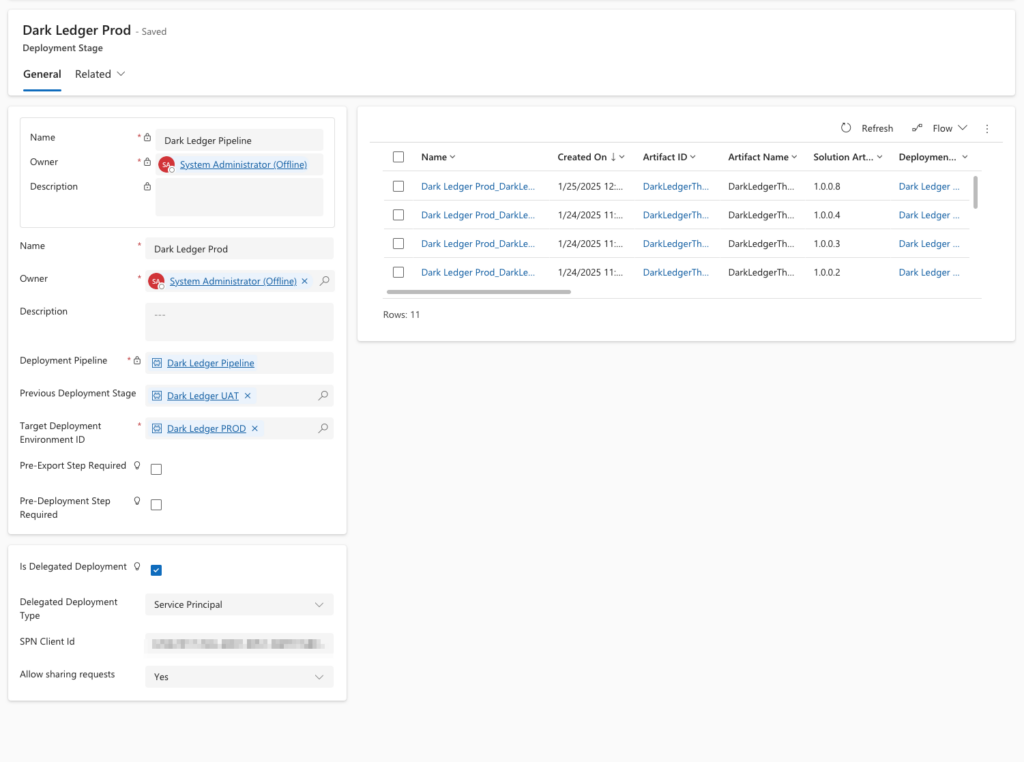

🌟 Delegated Deployment with SPNs

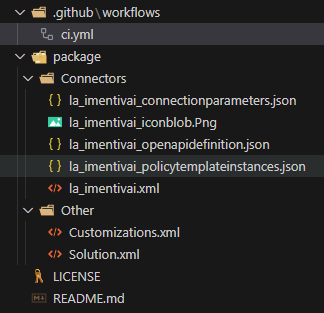

The backbone of our ALM setup is delegated deployment using a Service Principal (SPN). This ensures that our deployment process is:

- Secure: Using the SPN credentials, we minimize risks of unauthorized access.

- Streamlined: Delegated deployment allows automation without compromising governance.

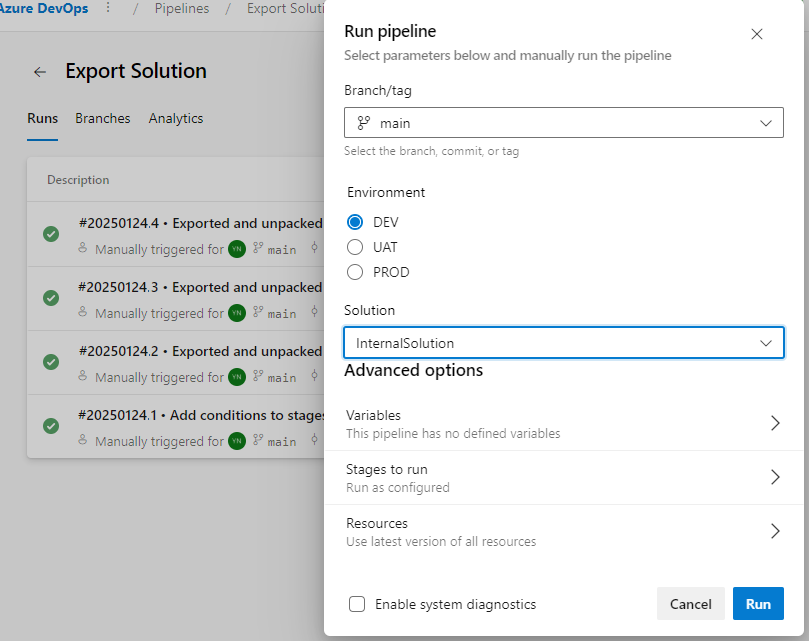

Key configuration steps:

- Set “Is Delegated Deployment” to

Yes. - Configure the required credentials for the SPN.

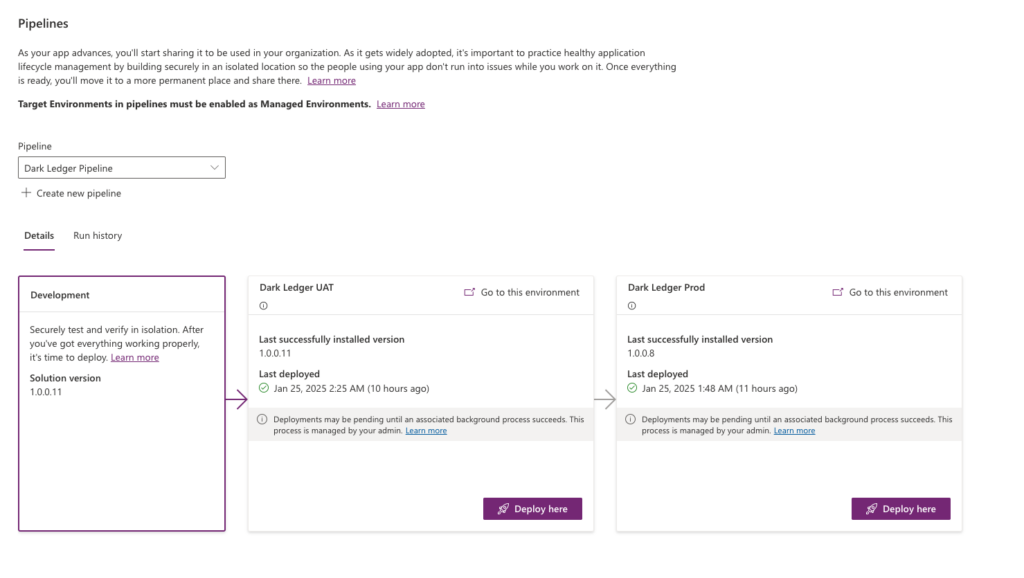

UAT:

PROD:

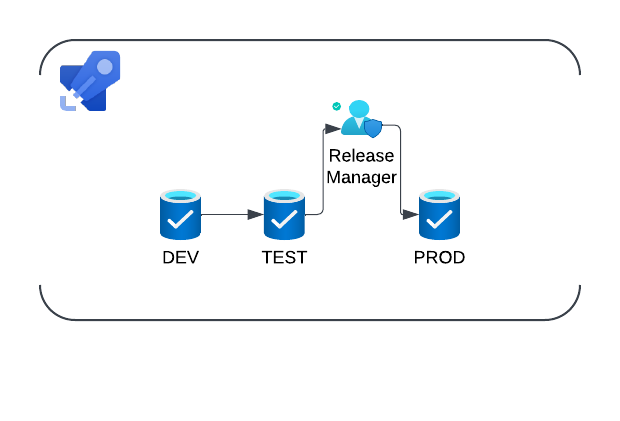

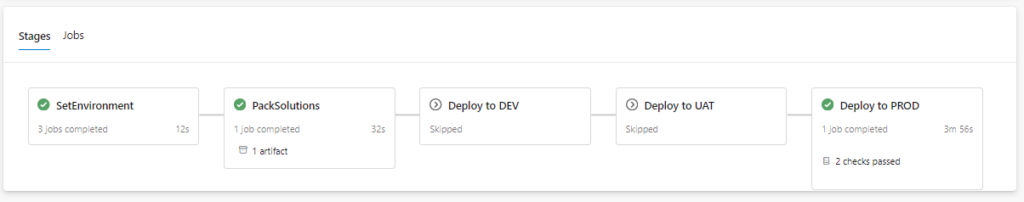

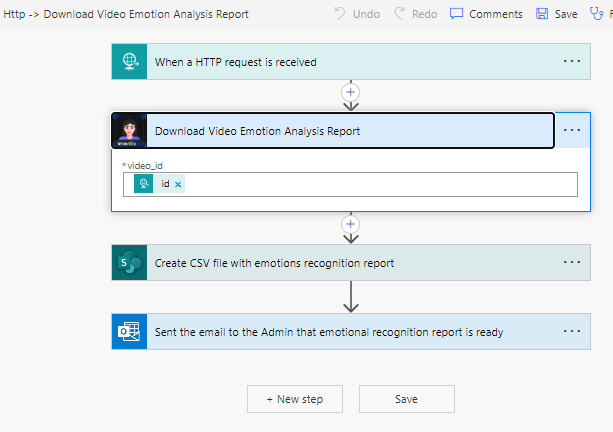

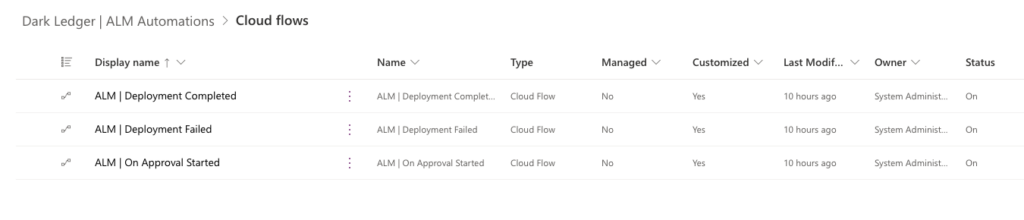

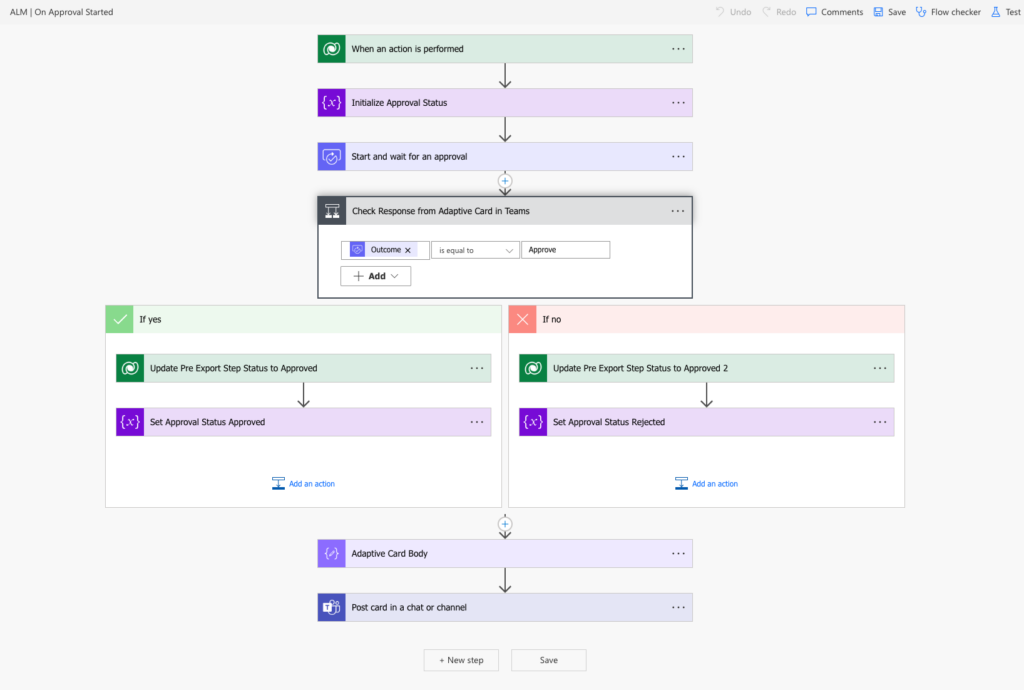

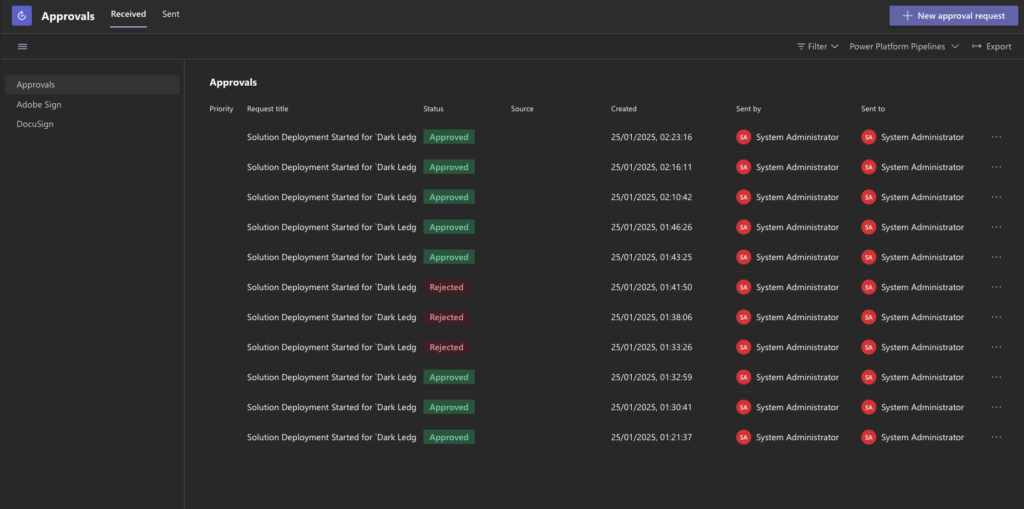

📜 Approval Flows for Deployment

Governance is king 👑, and we’ve built a solid approval process to ensure that no deployment goes rogue:

- Triggering the Approval:

- On deployment start, a flow triggers the action “OnApprovalStarted”.

- An approval request is sent to the administrator in Microsoft Teams.

- Seamless Collaboration:

- Once approved, the deployment process kicks off.

- If rejected, the process halts, protecting production from unwanted changes.

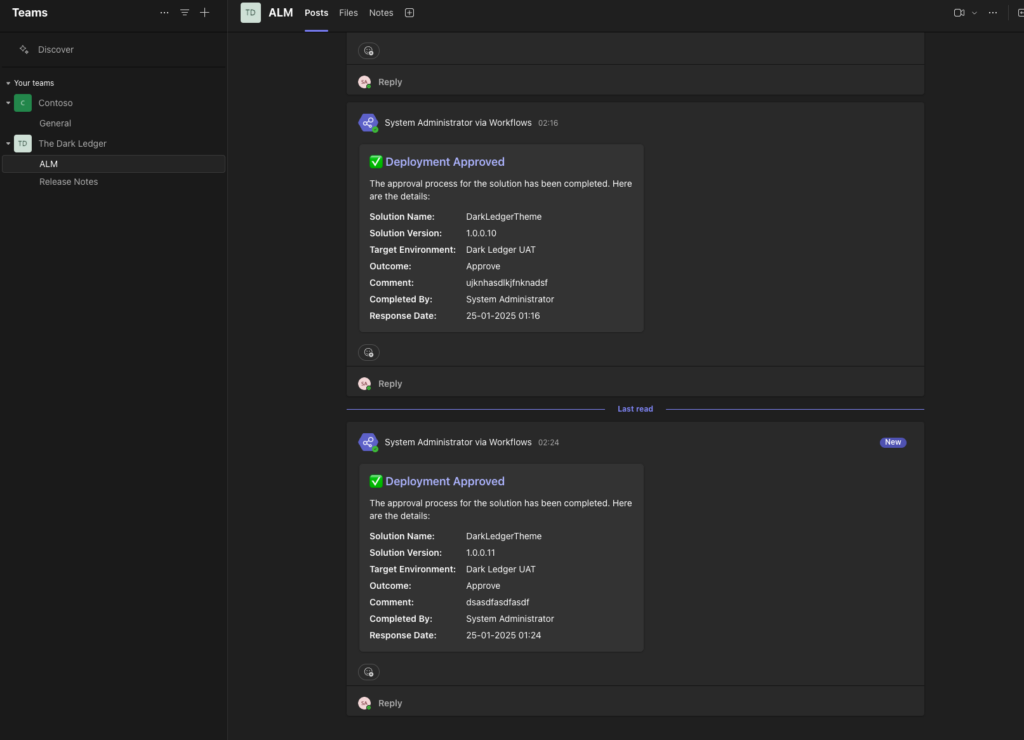

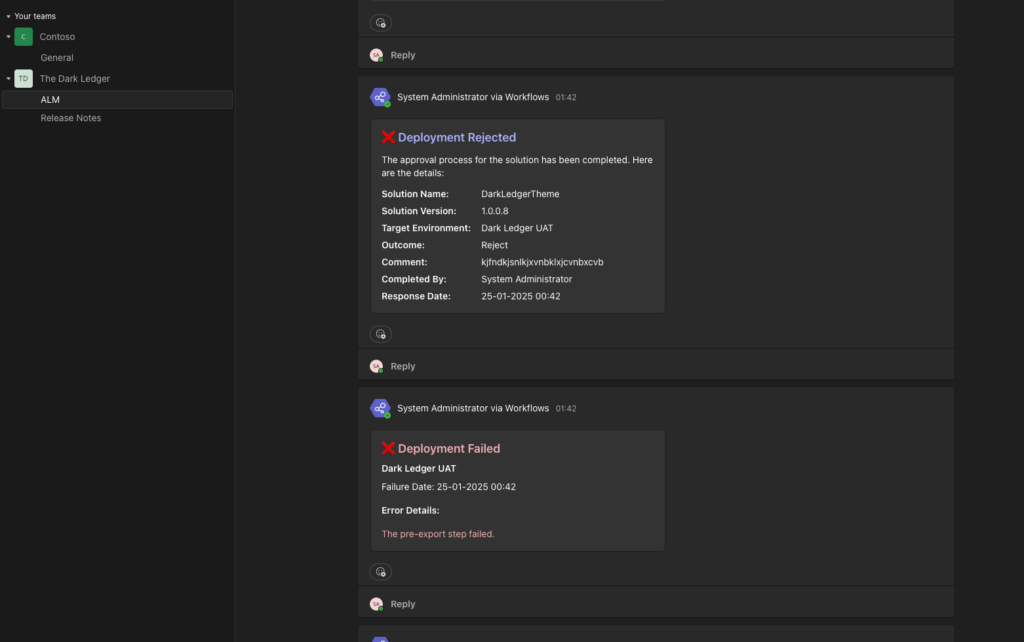

📢 Teams Integration: Keeping Everyone in the Loop

Adaptive Cards are the stars of our communication strategy:

- Monitoring Deployments:

- Each deployment sends an adaptive card to the “ALM” channel in Microsoft Teams.

- Developers and stakeholders can easily follow the deployment routine in real-time.

- Error Alerts:

- If a deployment fails, an error flow triggers and sends a detailed adaptive card to the same channel.

- This ensures transparency and swift troubleshooting.

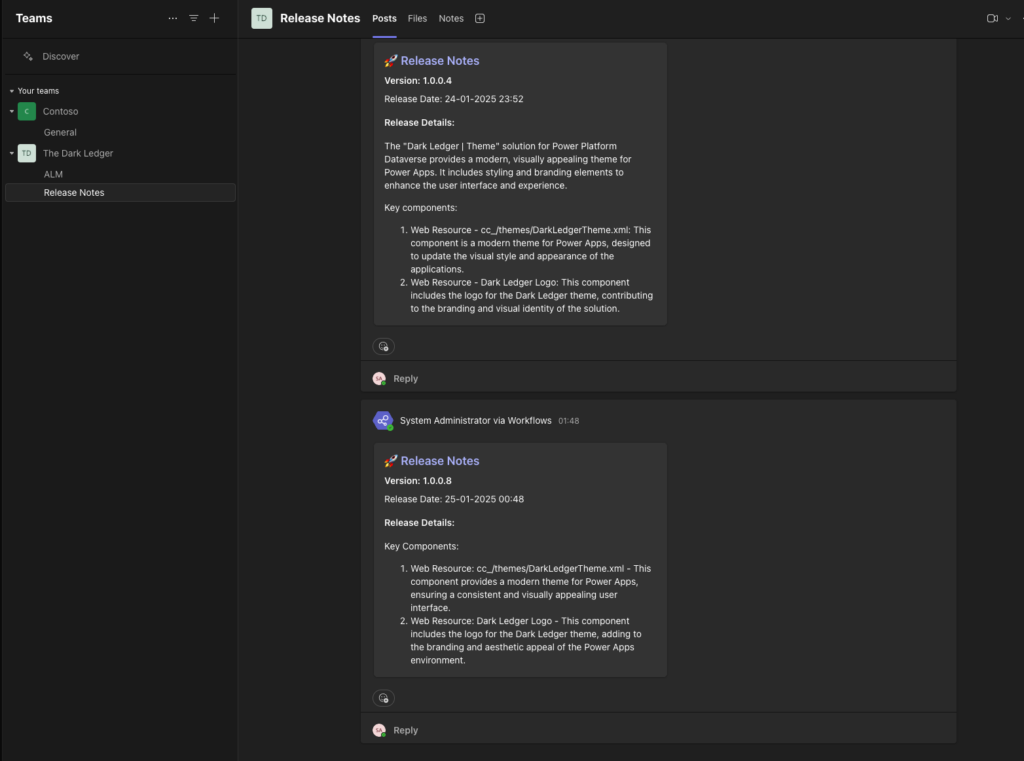

- Release Notes:

- Upon a successful production deployment, a flow automatically posts an adaptive card in the “Release Notes” channel.

- End users can see the latest updates and enhancements at a glance.

🛡️ Why This Matters

Our upgraded ALM setup doesn’t just look cool—it delivers real business value:

- Compliance: Ensures all deployments follow governance policies.

- Privacy: Protects sensitive credentials with SPN-based authentication.

- Efficiency: Automates processes, reducing manual intervention and errors.

- Transparency: Keeps all stakeholders informed with real-time updates and error reporting.

💡 Lessons from the ALM Hipster Movement

- Automation is Key: From approvals to error handling, automation reduces the risk of human error.

- Communication is Power: Integrating Teams with adaptive cards keeps everyone in the loop, fostering collaboration.

- Governance is Non-Negotiable: With SPNs and approval flows, we’ve built a secure and compliant deployment pipeline.

🌈 The Cool Factor

By blending cutting-edge tech like adaptive cards, Power Automate flows, and Teams integration, we’ve turned a routine ALM process into a modern masterpiece. It’s not just functional—it’s stylish, efficient, and impactful.