In our solution, users will be gathering ingredients using object detection in a Canvas App. The AI model used for this has been trained on objects around the conference venue, and so we wanted to enhance the connection between the app and the real world. Already having access to the users geo location through the geolocation web API inside the Canvas App and any PCF components, we decided to these data to place the active users on a 3D representation of the venue, expressing our power user love by merging 3D graphics with the OOB Canvas App elements.

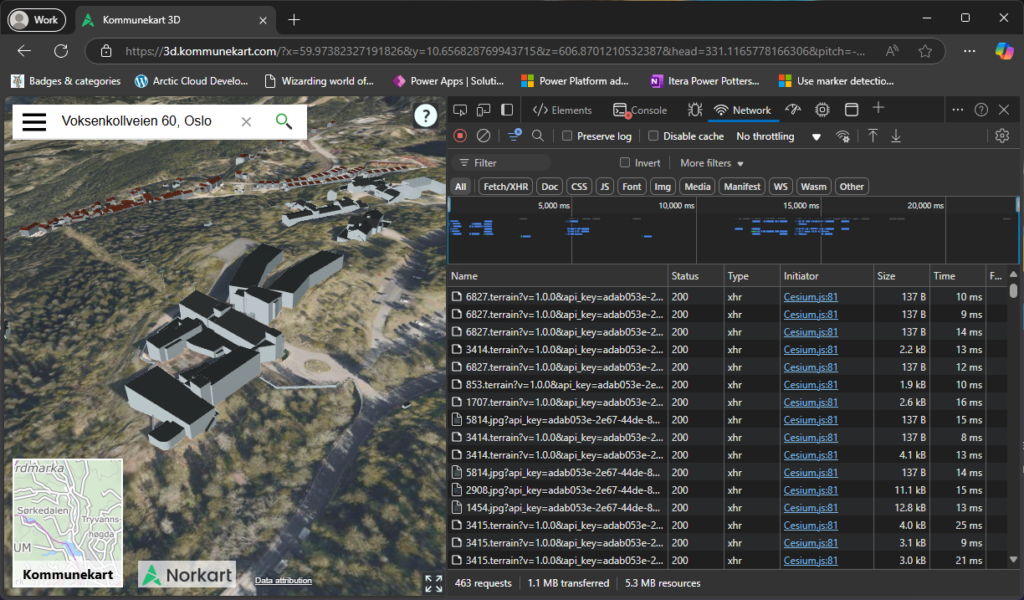

We were able to find a simple volume model of the buildings on the map service Kommunekart 3D, but these data seem to be provided by Norkart, which is not freely available.

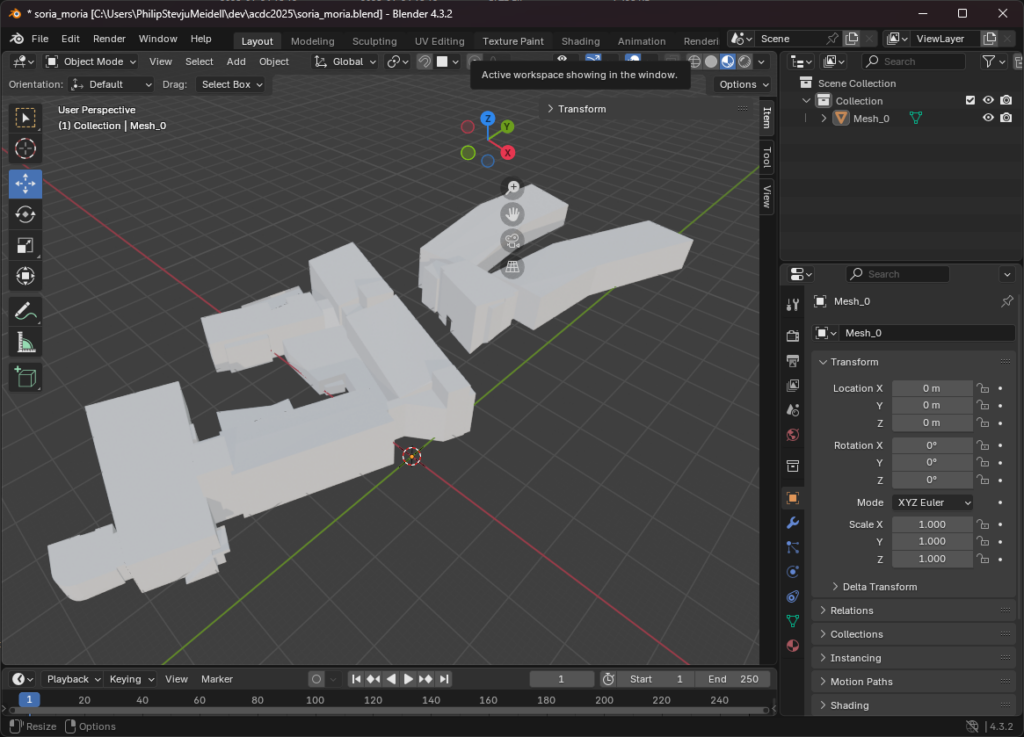

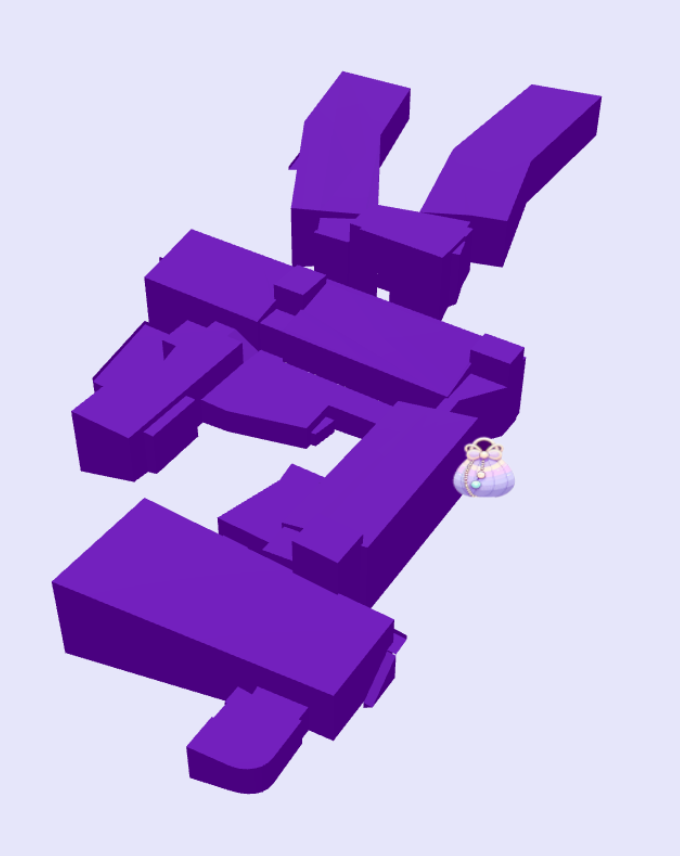

Like the thieving bastards we are, we decided to scrape the 3D model off of the site, by fetching all the resources that looked like binary 3D data. We found the data was in B3DM format and we found the buildings in one of these. We used Blender to clean up the model, by removing surrounding buildings and exporting it to glTF 3D file format, for use in a WebGL 3D context.

The representation of the 3D model, we decided to do with Three.js, which let us create an HTML canvas element inside the PCF component and using its WebGL context to render out the model in 3D. The canvas is continuously rendered using requestAnimationFrame under the hood, making it efficient in a browser context. The glTF model was loaded using a data URI, as a workaround for the web resource file format restrictions.

The coordinates from the user’s mobile device comes in as geographical coordinates, with longitude, latitude and altitude. The next step was to map these values relative to a known coordinate in the building, which we chose to be the main entrance. By using the main entrance geographical coordinates, we could then convert that to cartesian coordinates, with X, Y and Z, do the same to the realtime coordinates from the user, and subtract the origin, to get the offset in meters. The conversion from geographic to geocentric coordinates were done like so:

// eslint-disable-next-line @typescript-eslint/consistent-type-definitions

export type CartesianCoordinates = { x: number; y: number; z: number };

// eslint-disable-next-line @typescript-eslint/consistent-type-definitions

export type GeographicCoordinates = { lat: number; lon: number; alt: number };

// Conversion factor from degrees to radians

const DEG_TO_RAD = Math.PI / 180;

// Constants for WGS84 Ellipsoid

const WGS84_A = 6378137.0; // Semi-major axis in meters

const WGS84_E2 = 0.00669437999014; // Square of eccentricity

// Function to convert geographic coordinates (lat, lon, alt) to ECEF (x, y, z)

export function geographicToECEF(coords: GeographicCoordinates): { x: number; y: number; z: number } {

// Convert degrees to radians

const latRad = coords.lat * DEG_TO_RAD;

const lonRad = coords.lon * DEG_TO_RAD;

// Calculate the radius of curvature in the prime vertical

const N = WGS84_A / Math.sqrt(1 - WGS84_E2 * Math.sin(latRad) * Math.sin(latRad));

// ECEF coordinates

const x = (N + coords.alt) * Math.cos(latRad) * Math.cos(lonRad);

const y = (N + coords.alt) * Math.cos(latRad) * Math.sin(lonRad);

const z = (N * (1 - WGS84_E2) + coords.alt) * Math.sin(latRad);

return { x, y, z };

}This gave us fairly good precision, but not without the expected inaccuracy caused by being indoors.

In our solution the current position is then represented by an icon moving around the 3D model based on the current GPS data from the device.

To connect this representation to realtime data from all the currently active users, we decided to set up an Azure SignalR Service, with an accompanying Azure Storage and Azure Function App for the backend, bringing it all to the cloud, almost like a stairway to heaven. With this setup, we could use the @microsoft/azure package inside the PCF component, receiving connection, disconnection and location update message broadcast from all other users, showing where they are right now.