We all know that Power BI is a beautiful tool for dashboarding, but it’s always a tricky question of where to get the data from. It needs to be fast, and most importantly, it should be correct.

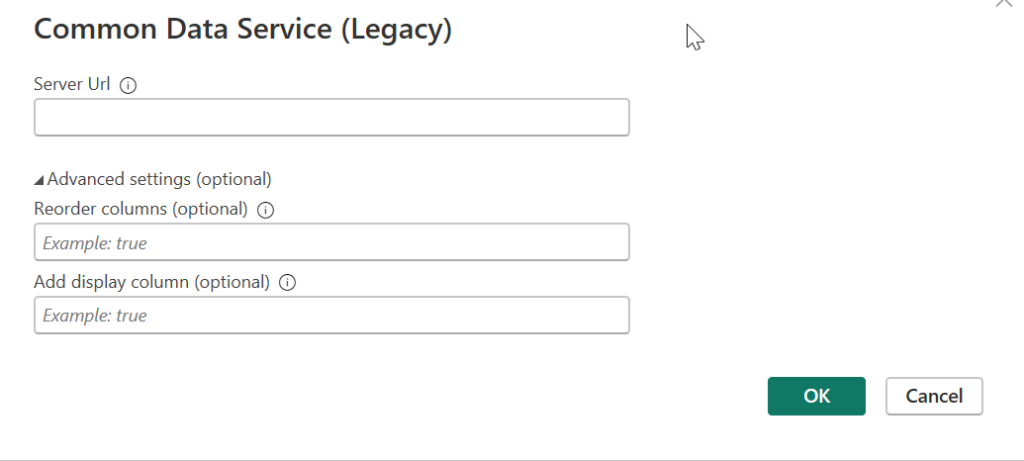

The traditional way, from what I gather, is using the CDS connector. Here, we get easily visible and editable tables.

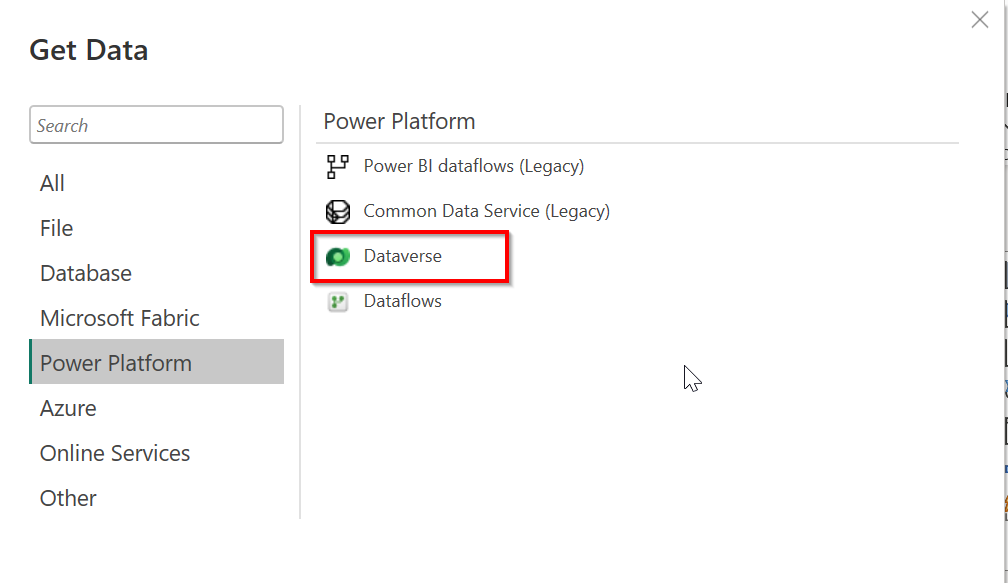

Another way, which will also give us Direct Query connection mode, is a connector directly to Dataverse.

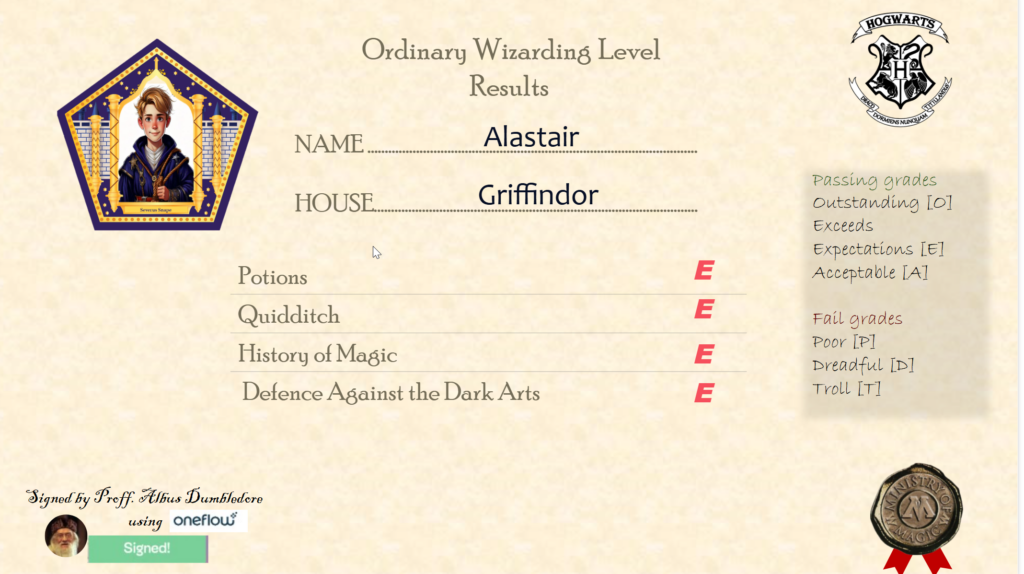

But what about Fabric? If we need to create many reports on the same data from the CRM, then it would be perfect to have our data in OneLake, create DataFlow Gen 2 to transform it, and have a shared data model that will be utilized by different reports, dashboards, apps, etc.

For that, there are several ways to do it. The most tempting one is just using a Fabric Premium subscription to create a Lakehouse and using Azure Synapse Link to sync the tables from PowerApps to Fabric.

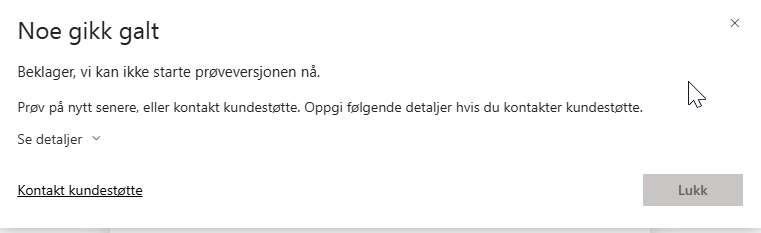

Unfortunately, when you have a Lab environment, it is not possible to create the OneLake on a Fabric workspace for now. Hopefully, this will be fixed in the future.

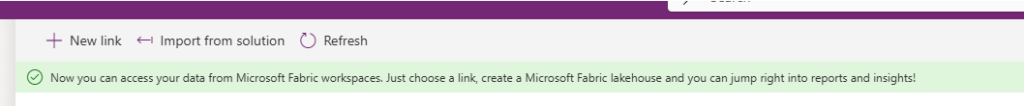

Another way is to create a resource group and create Azure storage account in the Azure Portal. If the user has the correct roles and access, then we should, in theory, be able to load tables from Power Apps to this storage and load them into a Storage Blob container. This approach got us much further, and we received a beautiful message on Power Apps.

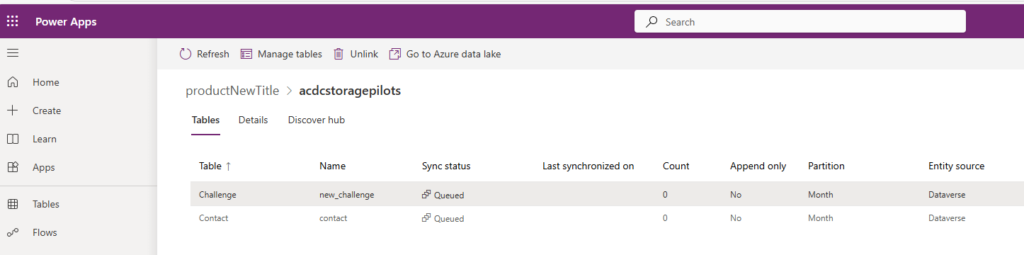

However, when we try to create a link, the tables get queued but never appear in the Blob Storage.

Another way that we actively tried, inspired by our great colleagues here at Itera Power Potters and It’s EVIDIosa, not Leviosaaaa. It’s quite nicely described by the first one in their blog post here: Fabric And data. | Arctic Cloud Developer Challenge Submissions.

However, for us, this approach did not work as our work tenant was registered in a different region from the Azure workspace where we are developing our CRM system.

Conclusion: If you are thinking of using Fabric, ensure your solution and Fabric are in the same region and don’t use the lab user.

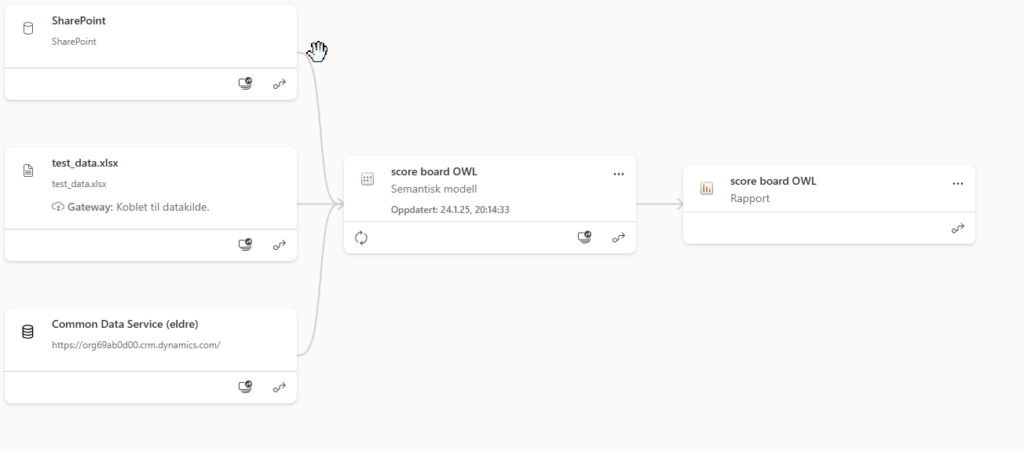

In the end, to have a beautiful, real-time updating report, we will go for the second approach described here: connecting directly to Dataverse and using Direct Query to have a real-time update of the changes.

We also used SharePoint to get images to visualize in the report, and Excel files (xlsx) for some test data.

P.S. Nice article that we got really inspired from 5 ways to get your Dataverse Data into Microsoft Fabric / OneLake – DEV Community