NOTE TO THE JURY: we have taken your comment in and added details in the bottom of this article.

In our Wayfinder Academy, we take a comprehensive and magical approach to understanding the student’s history, aspirations, and potential to recommend the best possible new school. The process is detailed, thorough, and personalized, ensuring the student is matched with an environment where they can thrive.

Just to remind the process, here we assume a student who didn’t feel right about their current faculty, filed an application. Immediately after that we request the tabelle from their current faculty (historical data), ask a student to upload some photos from most memorable moments, and then invited to an interview. While we are still working on the interview step and will share the details later, with this article we want to add more details about one of our approaches to mining extra insight from the student’s interview by analysing the emotions.

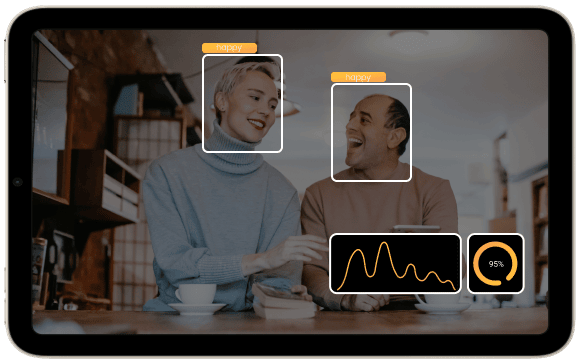

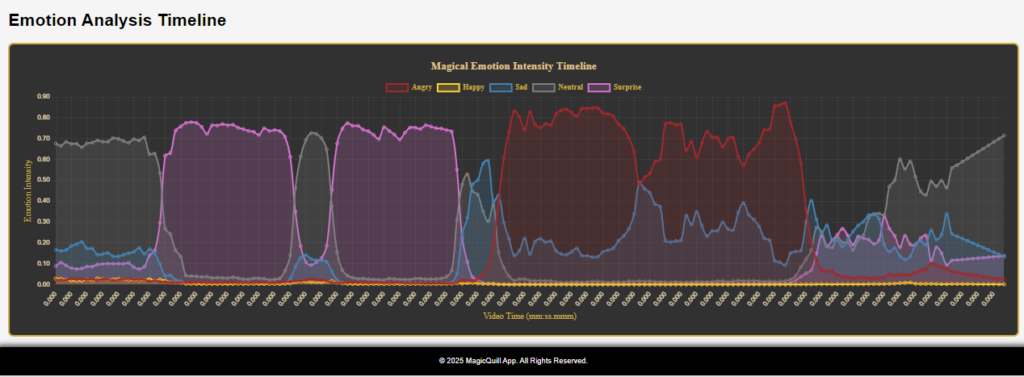

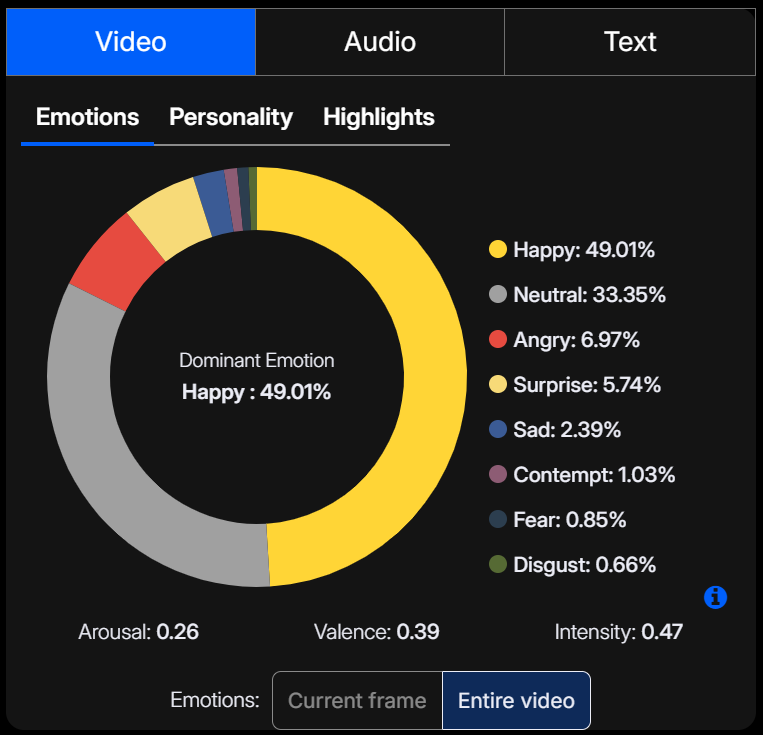

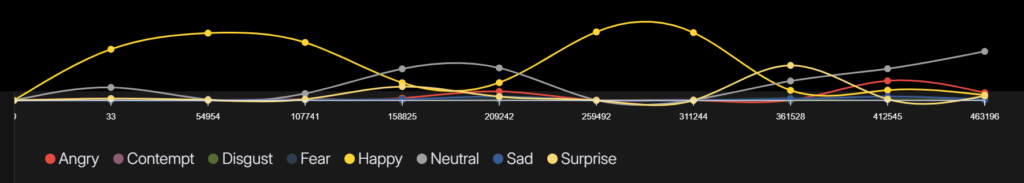

We use this emotional recognition along with the interview, to get 360 degree insight on the student`s reaction to the questions, that are designed to figure out their values, aspirations, fears, etc we can use to calculate the probability of their relation to the faculties and identify the one with the highest score (the scoring approach will be shared in a different post).

So, we are using a video stream capture to record an interview session and extract the emotional dataset.

It allows us to receive one more dimension that will extend standard datasets of the student, such as feedback, historical data from previous schools, etc.

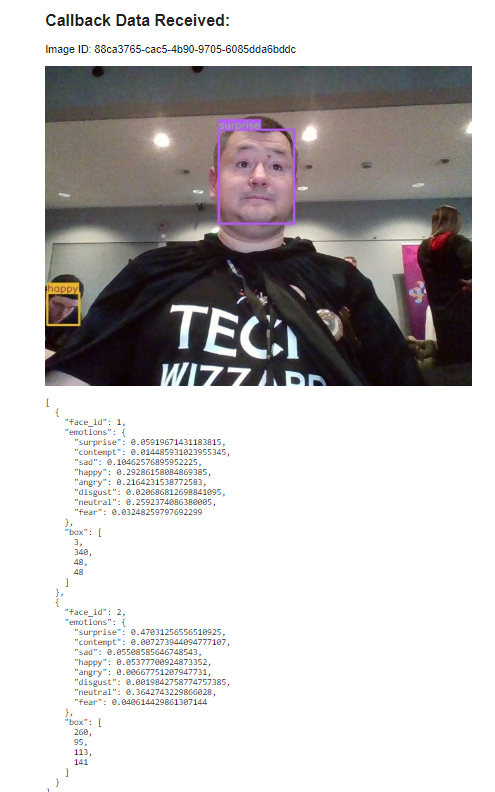

We use the imentiv.ai API to analyze the video and grab the final report. We then make the final dashboard in Power BI (we love it)

and embed it into OneLake.

Imentiv AI generates emotion recognition reports using different types of content, such as video, photos, text, and audio.

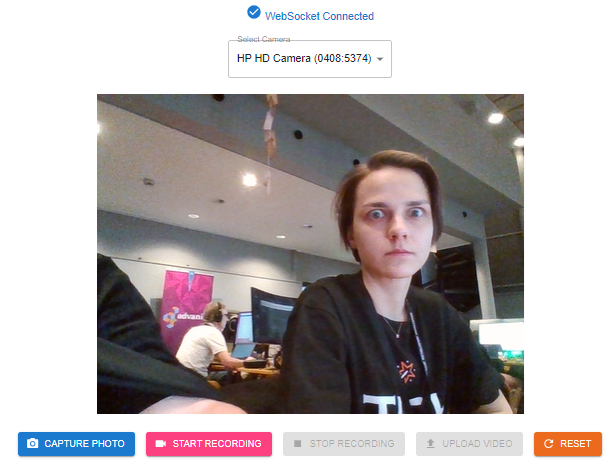

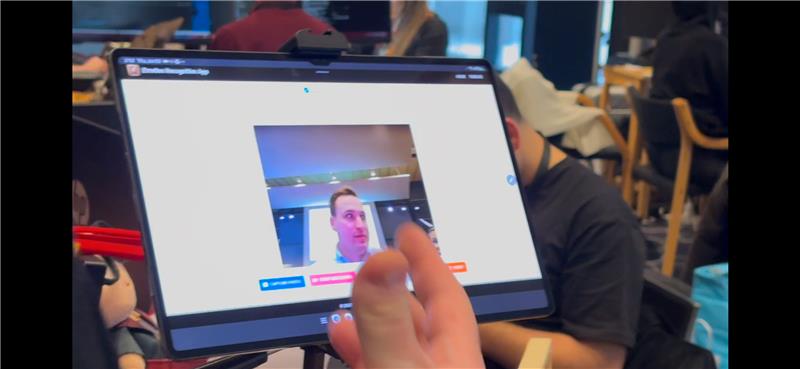

We implemented the single-page application to create an interactive experience by recognizing the emotions in the image captured via the webcam on our tablet. The analysis of the video stream takes more time, so we will demonstrate it later.

The app consists of two parts: a PoC to recognize the emotions in a photo from a webcam and an example of an emotion recognition report.

To build that PoC application, we decided to use the NodeJS stack. The engine is based on Bun, which is a modern and highly effective alternative to NodeJs. Compared to NodeJs, Bun was written with Rust.

For the front end, we are using React and ChartJs. We are hosting the PoC on our laptop. To make it available to the public internet, we are using CloudFlare tunnels. It also covers the SSL certificate termination, so your service will be secured by default without any significant effort.

The app server and the client app run inside a docker container, so you can deploy easily with a single command: docker-compose up—build.

To optimize the final container size and improve the speed of the build, we are using docker files with two stages: one to build the app and the second one to run the final artifacts.

PS:

Badges we claim:

Thieving bastards – we are using third party platform to recognize emotions in video and photo.

Hipster – we use BUN to run the application

Hogwarts Enchanter – we use Mystical AI imentiv.ai API to grab the emotional reports and visualize it in an user friendly way (see the screenshot above). Our enchanted workflow is using the data and making it available in OneLake. Wizarding world becomes closer when we see the AI based deep insight from various data sources in one place, in easy to read and interpret format.

Right now – we are using web socket server to implement real time communication between client and server site.

Client side salsa – we use React to implement front end.

PS2: pls come over to our camp and test it out! We want to know how you feel! 🙂