In order to improve the business productivity of our solution we opted for creating a workflow using Dataflows. Instead of manual data entry and import, we created several dataflows to enrich our solution as a whole. The workflows were set to automatically refresh at a scheduled interval, making sure that our database always would stay up to date both in terms of new and existing data. The source used in our dataflows were utilizing a Web API, which would enable us to build a dynamic solution including near real-time data.

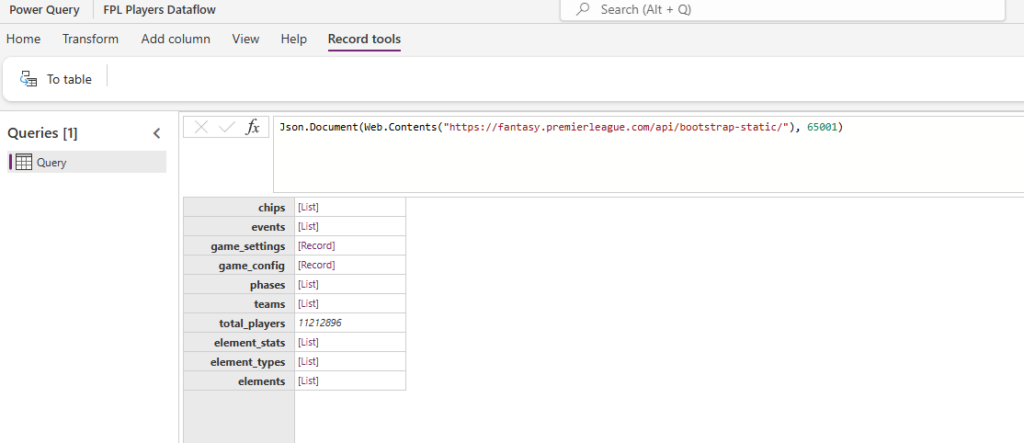

Below is a snippet of the public API that we utilized to populate our respective tables in our Dataverse database.

Dataflows offered an effective and low code approach of integrating external data into our system. The API consisted of multiple tables and columns which gave us the flexibility to cherry pick the statistics that were of most interest for our solution. We following a simple three step process for managing each data flow:

- Select table

- Filter and transform data

- Remove unnecessary columns

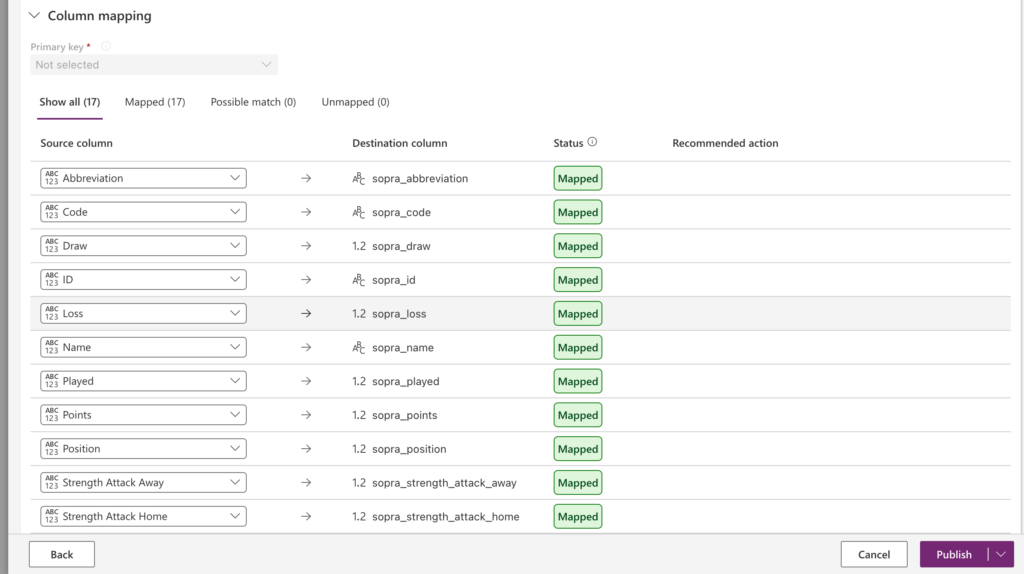

Once the data processing had been completed, we mapped the columns to the respective columns in our dataverse table.

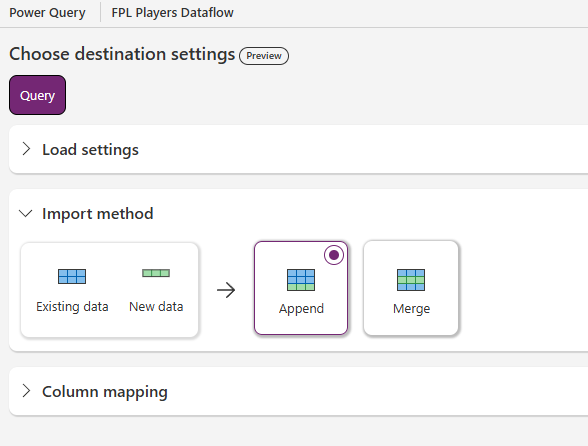

Lastly, for the import method we opted for the merge option in order to be able to update our existing data automatically. This option was also essential in order to avoid duplicate data and manually configuring bulk deletion rules.